In this article, I'm going to walk you through how to setup a simple event notification workflow in AWS Cloud using Terraform. It includes Terraform resources definition and relationships among different components, and some tiny but critical configurations that don't show up in below diagram. It focuses on blockers I met in the setup and how to address them.

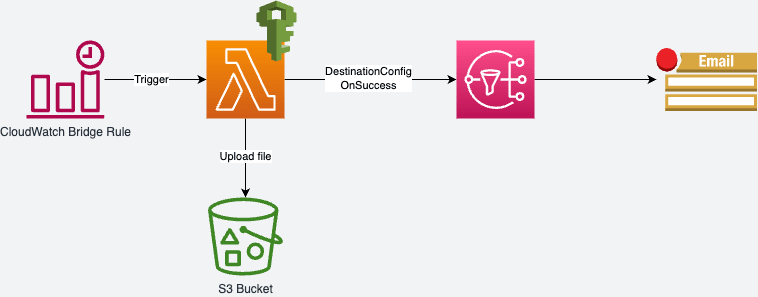

The architecture diagram shows as below which contains several components:

- A CloudWatch rule triggers downstream Lambda function as scheduled.

- A Lambda function for the core process and computing task.

- (Optional) A S3 bucket to save the output of Lambda function.

- A SNS topic to receive message when Lambda function is executed successfully.

- An Email subscription on SNS topic to receive email notification with message.

The workflow can fulfill a lot of common scenarios in the real world, for example, you have a small task that needs to be executed periodically. The task output is an excel file. You want to receive the notification immediately when the task is completed and get the output file easily. Then, the workflow best fits your needs. According to AWS Well-Architected, it's best practice to estimate and choose suitable AWS services according to your business requirements and scenarios. Some keywords can help you make a decision. For example, periodically means you need to setup a cron job to trigger your task as scheduled automatically, then AWS CloudWatch Bridge Rule is what you need. Considering the resource utilization and cost, Lambda is better than other services, such as EC2, Batch, etc.

To demonstrate the workflow, I implemented a scenario: Extract AWS available services by specific regions from AWS official API using AWS Lambda function, then save the result to an excel file and upload to S3 bucket, finally send out an email notification to subscribers with the pre-signed URL of excel file in S3 bucket. The subscribers can download the file via the pre-signed URL.

You can find the source code from https://github.com/camillehe1992/extract-aws-services-by-region

Prerequisite

- An Amazon Account - You should have an Amazon account with permissions to deploy all resources in the workflow.

- Terraform - Install Terraform environment on the machine to run Terraform CLI. I recommend https://github.com/tfutils/tfenv to manage your Terraform versions.

- AWS CLI - Install AWS CLI on the machine, and setup AWS credentials for deployment.

Components

1. Lambda Function

We get started from Lambda function as it's the core component in the workflow, and interacts with others.

# Create and upload Lambda function archive

data "archive_file" "lambda_extractor" {

type = "zip"

source_dir = "${path.module}/build/${var.application_name}"

output_path = "${path.module}/build/${var.application_name}.zip"

}

resource "aws_s3_object" "lambda_extractor" {

bucket = aws_s3_bucket.lambda_bucket.id

key = "lambda-function-zip-files/${var.application_name}.zip"

source = data.archive_file.lambda_extractor.output_path

etag = filemd5(data.archive_file.lambda_extractor.output_path)

}

# Lambda function

resource "aws_lambda_function" "extractor" {

function_name = var.application_name

s3_bucket = aws_s3_bucket.lambda_bucket.id

s3_key = aws_s3_object.lambda_extractor.key

runtime = "python3.9"

timeout = 60

handler = "main.lambda_handler"

source_code_hash = data.archive_file.lambda_extractor.output_base64sha256

role = aws_iam_role.lambda_exec.arn

environment {

variables = {

ENV = var.environment

APPLICATION = var.application_name

AWS_SERVICES_API_URL = var.aws_services_api_url

XLSX_FILE_S3_BUKCET_NAME = aws_s3_bucket.lambda_bucket.id

}

}

}

There are some dependencies in the function. During the deployment, data resource archive_file helps archive function source code from the given source directory and output a zip file in the given destination path. Then, define aws_s3_object resource that uploads the zip file to the given bucket and key. Finally, the source code package of Lambda function is retrieved from s3_bucket and s3_key. An execution role must be defined for Lambda function to interacts with other AWS services. In most of situations, Terraform manages dependence relationships between resources, so you don't need to worry about that. However, you can still manage dependency explicitly using Terraform meta argument depends_on.

2. EventBridge Rule

EventBridge, formerly called Amazon CloudWatch Events, is a serverless service that uses events to connect application components together, making it easier for you to build scalable event-driven applications. In the demo, we use the Amazon EventBridge rule to send events to target which is Lambda function here.

# Grant CloudWatch Events permission to invoke Lambda function

resource "aws_lambda_permission" "permission" {

function_name = aws_lambda_function.extractor.function_name

action = "lambda:InvokeFunction"

statement_id = "AllowCloudWatchEventsInvoke"

principal = "events.amazonaws.com"

source_arn = aws_cloudwatch_event_rule.rule.arn

}

# Define the rule and cron expression

resource "aws_cloudwatch_event_rule" "rule" {

name = "${var.application_name}-trigger-lambda"

schedule_expression = var.cron_expression

}

# Add Lambd function as rule target

resource "aws_cloudwatch_event_target" "lambda_target" {

rule = aws_cloudwatch_event_rule.rule.name

target_id = "SendToLambda"

arn = aws_lambda_function.extractor.arn

input = "{}"

}

Don't forget to grant CloudWatch Events permissions to invoke Lambda function by creating a resource policy aws_lambda_permission, otherwise your lambda function won't be invoked, even if no failed invocation is found in the rule monitoring metrics from AWS console. So check the permission firstly if your lambda won't be triggered by the rule.

SNS Topic

Last but not least, we configure a destination - SNS topic for asynchronous invocation when function is invoked successfully with the code below.

# Lambda function event configuration

resource "aws_lambda_function_event_invoke_config" "trigger_topic" {

function_name = aws_lambda_function.extractor.function_name

destination_config {

on_success {

destination = aws_sns_topic.notification.arn

}

}

}

Don't forget to add additional permissions to Lambda function according to the destination service. In our demo, I attached an AWS-managed policy AmazonSNSFullAccess directly, which is not best practice according to the least privileged principal.

For best practice, you should add policy Amazon SNS – sns:Publish only on Lambda function execution role.

With the configuration above, you don't need to publish a message to SNS topic in Lambda function source code using SDK, it will send a record with function output when the function successfully processes an asynchronous invocation.

When you invoke a function, you can choose to invoke it synchronously or asynchronously. With synchronous invocation, you wait for the function to process the event and return a response. With asynchronous invocation, Lambda queues the event for processing and returns a response immediately. For asynchronous invocation, Lambda handles retries and can send invocation records to a destination.

Deploy Terraform

Before deployment, you must have AWS credentials configured on your local machine following the office tutorial https://docs.aws.amazon.com/cli/latest/userguide/cli-configure-files.html. In my demo, I created an IAM user with suitable permissions. Then create AKSK (Access Key and Secret Key) under Security Credentials. Setup a named profile service.tsp-cicd-runner with AKSK credentials.

You can run command aws configure to quickly set and view your credentials. It will create a default profile in the .aws/credentials file. Run command aws sts get-caller-identity to verify the configuration.

Modify variables in variables.tf as you need before deploying.

- Update profile service.tsp-cicd-runner in the code to your named profile or default.

- Update Terraform backend configuration in main.tf.

- Update variable notification_email_address to your email address in variables.tf.

Local Deploy

Run below command to deploy the workflow to AWS from local machine.

make init

make plan

make apply

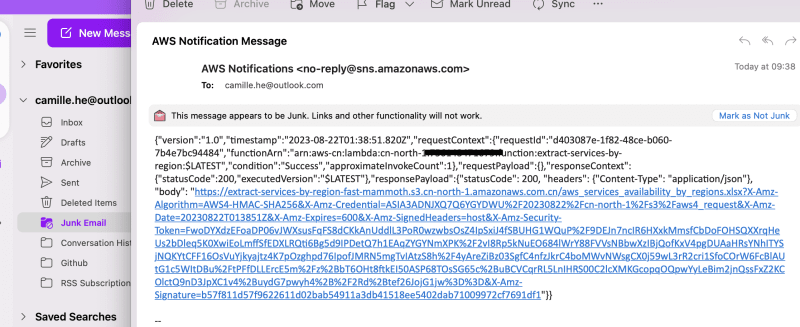

After applying, you should receive an email from AWS Notification that asks for confirmation of email subscription. Confirm it by clicking the URL in the email. After that, you are able to receive messages from the SNS topic. The email may fall into the Junk email folder with applied filter rules.

Deploy via GitHub Actions

Find the two GitHub workflows to deploy/destroy AWS resources from GitHub, which can be manually triggered from GitHub Actions. Fork the repo and setup the workflow as you need.

Invoke Lambda

Once Terraform creates the function, invoke it by running below command. You will get a 202 response, and an email after a few seconds with a S3 pre-signed URL. Download the file from S3 bucket by clicking on the URL as it will expire after 10 mins as preconfigured.

aws lambda invoke \

--function-name $(terraform output -raw function_name) \

--invocation-type Event \

--payload "{}" \

response.json

Summary

A few things I want to highlight are:

- Don't forget to grant CloudWatch Events permissions to invoke Lambda by adding a resource policy.

- Don't forget to add additional permissions to Lambda function according to the destination service.

- For best practice, always follow the least privileged principal.

- Invoke lambda asynchronously to trigger the destination service.

That's all.

I'm always looking forward to any comments and suggestions on contents and grammar.

Thanks for reading!

Top comments (0)