Let's talk about CPU's and their inner workings.

A CPU, the central processing unit, is the brain of your computer. It is the core hub which performs all operations of your device, and is responsible for performing arithmetic, providing instruction logic, and controlling the input and output operations as specified by that instruction logic. The rules surrounding its design fall into the field of CPU architecture design, in which are described the functionality, organization, and implementation of the internal systems. These definitions extend to instruction set design, microarchitecture design, and logic design.

Wheels, Levers, and Cogs

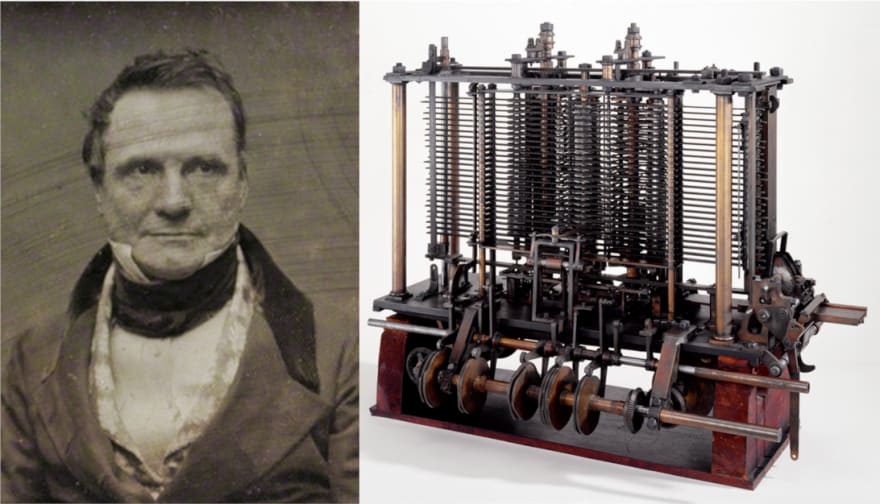

Long prior to the AMD Big Red vs the Intel Big Blue Wars, a notable and early development in exploration of computational units was provided by the work of Charles Babbage. A British mathematician and mechanical engineer, Babbage originated the idea of a digital programming computer, in which the principal ideas of all modern computers can be found in his proposed 'Analytical Engine'. While the 'Analytical Engine' never was fully realized due to arguments over design and withdrawal of government funding, it provided outline of the arithmetic logic unit - a unit capable of control-flow in the form of conditional branching and loops. This design allowed the system to be 'Turing-Complete', meaning that the system was able to recognize and decide upon use of other data-manipulation rule sets, based on the currently processing data.

Modern, Defined

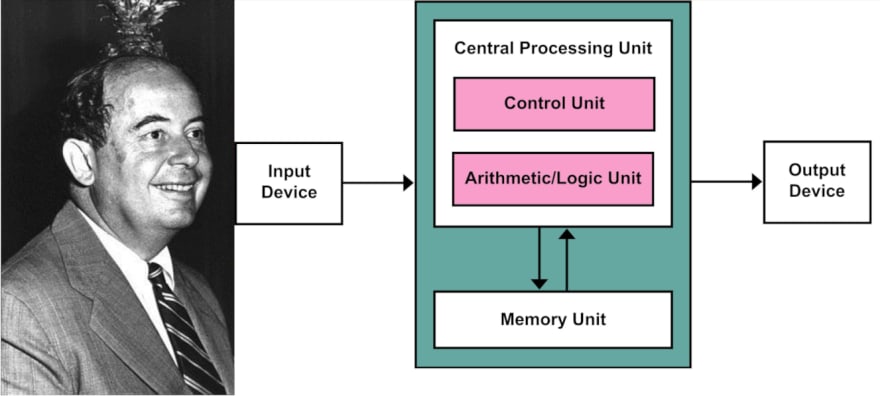

While CPU architecture has drastically changed and improved over the years, it was John von Neumann, Hungarian-American computer scientist and engineer who gave it's first real set of requirements. The following basic requirements are present in all modern-day CPU designs:

1. A processing unit which contains an arithmetic logic unit (ALU) and processing pipeline (instruction feed)

2. Processor registers for quick access to required data (Read-Only Memory and Random Access Memory)

3. A control unit that contains an instruction register and program counter

4. Memory that stores data and instructions

5. A location for external mass storage of data

6. Input and Output mechanisms

This set of basic requirements provides large-scale capability to treat instructions as data. This capability is what makes assemblers, compilers, and other automated programming tools possible - the tool that makes "programs that write programs" possible. These programs provide the system the capability to manipulate and manage data at runtime, which is a principal element of modern programming high-level languages, such as Java, Node.js, Swift, C++ to name a few.

What does this mean today?

Today, modern CPU architecture design has fairly straight-forward goals, revolving around performance, power efficiency, and cost.

Although CPU's still follow the same fundamental operations as their predecessors, the additional structure implementations provide more capability in a smaller and faster package. A few notable named structures and concepts that we enjoy today are parallelism, memory management units, CPU cache, voltage regulation, and increased integer range capability. These additional structures provide the ability to run multiple functions at the same time in a way similar to hyperthreading, give faster access to often used data, provide additional memory capacity, and give the CPU extra juice at critical times to perform process-intensive tasks.

While Big Red and Big Blue may fight for the top of the hill, they each contain the same elements which give us the speed and capability which we enjoy today.

Sources and Additional Reading:

Charles Babbage

John von Neumann

von Neumann Architecture

Fundamentals of Processor Design

Top comments (0)