Kubernetes autoscaling has become a critical component for managing workloads efficiently. Recent studies reveal that around 40% of organizations rely on Horizontal Pod Autoscalers (HPAs), while less than 1% use Vertical Pod Autoscalers (VPAs). This trend highlights the growing preference for tools that simplify scaling.

Among these tools, Karpenter and Cluster Autoscaler are often compared in discussions about autoscaling.

Karpenter offers faster scaling by directly integrating with cloud provider APIs. It also enhances cloud cost optimization through just-in-time provisioning and intelligent node cleanup.

On the other hand, Cluster Autoscaler provides a dependable solution with broad compatibility across Kubernetes environments.

Choosing between these tools depends on your workload needs, scaling speed, and overall Kubernetes autoscaling strategy.

Key Takeaways

- Karpenter scales faster by linking directly to cloud APIs. This makes it great for tasks with quick changes in demand.

- Cluster Autoscaler is dependable and relatively simple to set up. It excels in dynamic environments where resource needs fluctuate, providing efficient scaling based on real-time workloads.

- Karpenter saves money by using spot instances and adding nodes as needed. You only pay for what you use.

- Cluster Autoscaler helps optimize resource usage by resizing clusters based on workload demands, reducing unnecessary resource consumption and lowering cloud costs.

Karpenter: Features, Strengths, and Limitations

Key Features of Karpenter

Karpenter introduces a modern approach to Kubernetes autoscaling by focusing on workload-specific optimizations. It eliminates the need for multiple autoscaling groups by being zone-aware, which simplifies cluster management. You can benefit from its ability to allocate nodes intelligently based on workload requirements, ensuring cost efficiency and high availability.

This tool supports a variety of node configurations, matching instance types to the specific needs of your pods. It enhances scaling efficiency by dynamically adjusting resources based on workload demands. Karpenter also simplifies the user experience with a straightforward setup compared to the more complex configuration of Cluster Autoscaler.

Additionally, it resolves volume node affinity conflicts, ensuring stateful pods are scheduled correctly. Its efficient bin-packing mechanism reduces computational costs, making it a cost-effective choice.

Strengths of Karpenter

Karpenter excels in automating node provisioning and termination, reducing manual overhead. It addresses resource underutilization by scaling even during low workloads, ensuring optimal resource usage. You can rely on its ability to detect node creation failures in real-time, which prevents performance bottlenecks during high-demand periods.

One of its standout strengths is its effective use of EC2 Spot instances. Karpenter balances cost and reliability by maximizing Spot usage while automatically switching to On-Demand instances when interruptions are predicted. This capability significantly reduces Kubernetes costs without compromising stability.

Its advanced customization options, such as resource classes tailored to workload needs, provide you with greater control over scaling configurations.

Limitations of Karpenter

Despite its advantages, Karpenter has some limitations. For instance, it may encounter runaway scaling issues when new worker nodes remain in a NotReady state due to factors like buggy AMIs or misconfigured route tables. This can cause continuous scaling attempts without resolution.

Additionally, Karpenter does not provide a built-in mechanism for setting a hard ceiling on cluster costs, a feature that is typically managed through EC2 AutoScaling groups or Cluster Autoscaler. Instead, it dynamically scales based on resource demand, such as CPU and memory usage

These limitations highlight the importance of careful monitoring and configuration when using Karpenter. While it offers advanced features, addressing these challenges is necessary to fully optimize its potential.

Cluster Autoscaler: Features, Strengths, and Limitations

Key Features of Cluster Autoscaler

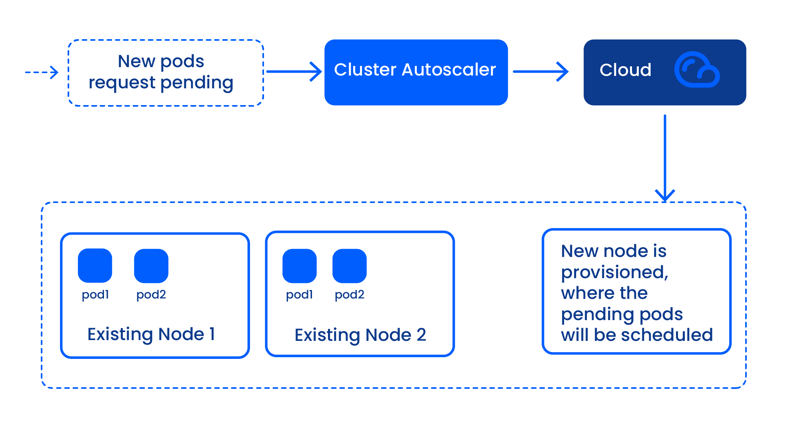

Cluster Autoscaler offers several features that make it a reliable choice for Kubernetes autoscaling. It automatically adjusts the number of worker nodes in your cluster based on workload demands. This ensures that resources are used efficiently and applications remain available. The tool prevents over-provisioning, which helps reduce costs.

Cluster Autoscaler integrates seamlessly with Kubernetes and cloud provider APIs. The tool supports multiple node groups, giving you flexibility in managing resources. It respects Kubernetes'Pod Disruption Budgets (PDBs), ensuring compliance with scheduling constraints. Additionally, it adds nodes when pods cannot be scheduled due to insufficient resources and removes underutilized nodes when their workloads can be rescheduled.

Strengths of Cluster Autoscaler

Cluster Autoscaler excels at dynamically adjusting cluster size based on workload needs to ensure optimal resource utilization and availability. The tool launches new nodes based on predefined configurations (e.g., CPU or memory specifications) in existing node groups, which is useful for meeting diverse workload requirements. New nodes are automatically registered with the Kubernetes control plane, making them ready for pod assignment.

Another strength lies in its ability to monitor workloads and adjust cluster size accordingly. This dynamic scaling ensures that resources are added or removed as needed, optimizing both performance and cost. Its compatibility with cloud-based Kubernetes environments makes it a dependable choice for many organizations, though its support for on-premises setups is limited.

Limitations of Cluster Autoscaler

Despite its strengths, Cluster Autoscaler has some limitations. It primarily supports cloud-based clusters, which restricts its use in on-premise environments. Scaling operations can take several minutes, which may delay responses to sudden workload changes. The tool cannot remove nodes with dependencies, such as those with local storage or non-relocatable pods, even if they are underutilized.

Cluster Autoscaler bases scaling decisions on resource requests rather than actual usage. This can lead to over-provisioning or insufficient scaling. Administrators must define the size of new nodes, which adds complexity to resource optimization. Additionally, finding the right balance between performance and cost efficiency can be challenging due to varying workloads and unpredictable demand patterns.

Karpenter vs Cluster Autoscaler: A Detailed Comparison

TLDR;

| Feature | Karpenter | Cluster Autoscaler |

|---|---|---|

| Speed of Scaling | Rapid scaling with cloud provider APIs, responds in seconds | Slower due to reliance on predefined node groups |

| Flexibility & Customization | Custom resource classes, NodePools, NodeClasses, workload-specific optimizations | Limited customization, relies on predefined node groups |

| Cost-Efficiency | Dynamic provisioning, maximizes spot instance usage, predictive termination handling | Static scaling, may lead to over-provisioning and higher costs |

| Kubernetes Compatibility | Supports multiple cloud providers (AWS, Azure, GCP) and various instance types | Broad compatibility with major Kubernetes distributions |

| Ease of Use & Configuration | Easy setup, automatic node provisioning, customization options | Simple setup with node groups but less dynamic scaling |

Speed of Scaling

When it comes to scaling speed, Karpenter outpaces Cluster Autoscaler significantly. Cluster Autoscaler relies on predefined node groups and scaling policies, which can delay scaling responses. This approach often results in moderate scaling speeds, making it less ideal for workloads requiring immediate resource adjustments.

Karpenter, on the other hand, is designed for rapid scaling. It interacts directly with cloud provider APIs, bypassing the slower Auto Scaling Group (ASG) mechanism. This allows Karpenter to react in seconds, ensuring your cluster scales quickly to meet changing demands. For example, Karpenter provisions resources swiftly by leveraging real-time EC2 API interactions, reducing latency for applications needing immediate resources. If your workloads experience sudden spikes, Karpenter ensures they remain uninterrupted.

Benchmarks consistently highlight Karpenter’s faster scaling capabilities. Its proactive approach to resource provisioning makes it a significant advancement over Cluster Autoscaler, especially for dynamic and unpredictable workloads.

Flexibility and Customization

Karpenter offers unparalleled flexibility and customization options compared to Cluster Autoscaler. With Karpenter, you can define custom resource classes tailored to specific workload profiles. This allows you to optimize both cost and performance. For instance, Karpenter enables scaling based on custom metrics, such as CPU or memory usage, ensuring resources align with actual workload needs.

Additionally, Karpenter introduces NodePools and NodeClasses, which provide fine-grained control over infrastructure provisioning. These features let you specify instance types, availability zones, and even integrate custom scripts. This level of customization ensures that your workload runs on the most suitable infrastructure. In contrast, Cluster Autoscaler relies on predefined node groups, which can lead to inefficiencies like over-provisioning.

Karpenter also excels in workload-specific optimizations. For example, it allows you to target availability zones based on workload placement preferences or leverage spot instances for cost-sensitive tasks. This adaptability makes Karpenter a more responsive and efficient choice for Kubernetes autoscaling.

Cost-Efficiency

Karpenter's cost-saving measures set it apart from Cluster Autoscaler. It eliminates the need for pre-created node groups, dynamically provisioning nodes of varying sizes as needed. This reduces management overhead and prevents unnecessary resource allocation. Karpenter also runs scheduling simulations to avoid extra node creation, ensuring optimal resource utilization.

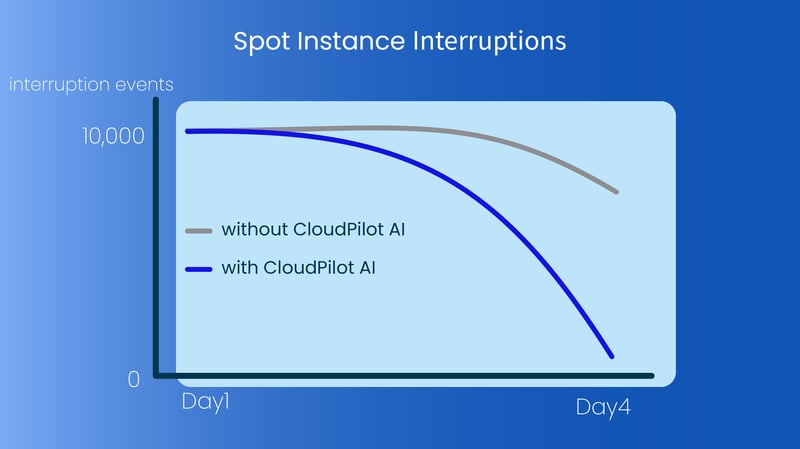

One of Karpenter's standout features is its ability to maximize spot instance usage. It monitors AWS spot instance termination notices and predicts interruptions with the default AWS 2-minute notice, but CloudPilot AI can provide up to 120 minutes of prediction in advance. This proactive approach helps ensure stability while keeping costs low. Additionally, Karpenter prioritizes using On-Demand and Spot instances, focusing on immediate availability and cost efficiency.

Cluster Autoscaler, while reliable, often leads to higher costs due to its static scaling mechanism. It bases scaling decisions on resource requests rather than actual usage, which can result in over-provisioning.

In contrast, Karpenter's dynamic provisioning and intelligent node selection minimize waste and optimize performance, making it the more cost-effective choice for large-scale Kubernetes clusters.

If you're looking for a tool that balances performance and cost, Karpenter clearly outshines Cluster Autoscaler. Its ability to dynamically adjust resources ensures you only pay for what you need, without compromising on application performance.

Compatibility with Kubernetes Ecosystem

When choosing between Karpenter and Cluster Autoscaler, you need to consider how well each tool integrates with the Kubernetes ecosystem.

Cluster Autoscaler has been around for years and supports a wide range of Kubernetes distributions. It works seamlessly with major cloud providers like AWS, Azure, and Google Cloud. If you use managed Kubernetes services such as EKS, AKS, or GKE, Cluster Autoscaler is a dependable choice. Its compatibility with Kubernetes-native features, like Pod Disruption Budgets and taints and tolerations, ensures smooth operation in most environments.

Karpenter, on the other hand, takes a modern approach to compatibility. It directly interacts with cloud provider APIs, bypassing traditional mechanisms like Auto Scaling Groups. This makes it highly adaptable to dynamic workloads. Karpenter supports multiple cloud providers, including AWS, Azure, and GCP(in progress, welcome to contribute), and matches workloads to thousands of instance types.

While Cluster Autoscaler excels in traditional setups, Karpenter shines in environments requiring flexibility and advanced optimizations. Your choice depends on whether you prioritize broad compatibility or cutting-edge features.

Ease of Use and Configuration

Ease of use plays a critical role in selecting an autoscaling tool. Cluster Autoscaler offers a straightforward setup process. You configure it by defining node groups and scaling policies. It integrates well with Kubernetes, making it easy to manage for users familiar with the ecosystem. However, its reliance on predefined node groups can make scaling less dynamic. You may need to spend extra time fine-tuning configurations to avoid over-provisioning.

Karpenter simplifies configuration by removing the need for multiple autoscaling groups. It automatically provisions nodes based on workload demands, reducing manual setup.

With CloudPilot AI, powered by Karpenter, deployment is quick—taking just five minutes—and node selection is fully automated, saving you time and ensuring optimal performance without complex configurations.

Furthermore, Karpenter allows for customization of scaling policies using NodePools and NodeClasses, giving you greater control over your infrastructure.

If you prefer a tool that works out of the box with minimal configuration, Karpenter is the better choice. However, if you value a more traditional approach with predictable scaling behavior, Cluster Autoscaler remains a reliable option.

Choosing the Right Tool for Your Kubernetes Cluster

When to Choose Karpenter

Karpenter excels in dynamic and unpredictable environments. Its ability to react in seconds to scaling demands makes it ideal for workloads with sudden spikes. Karpenter provisions nodes dynamically without relying on predefined instance types. This flexibility reduces resource waste and ensures your workload runs on the most suitable infrastructure.

If cost optimization is your priority, Karpenter is a great choice. It efficiently leverages spot instances and minimizes costs by predicting interruptions with AWS's default 2-minute notice.

Karpenter's advanced features, such as real-time rightsizing and bin-packing, make it a powerful tool for modern Kubernetes clusters. If you value speed, flexibility, and cost efficiency, Karpenter is the better choice.

When to Choose Cluster Autoscaler

Cluster Autoscaler works best in environments where you need precise control over node scaling and placement. It supports a wide range of cloud providers and even self-hosted Kubernetes setups, making it a versatile choice.

If your workloads require stable and predictable scaling, this tool ensures efficient resource utilization. For example, it automatically adjusts cluster size by adding nodes when pods cannot be scheduled and removing underutilized nodes. This balance between resource usage and availability makes it ideal for traditional setups.

You should consider Cluster Autoscaler if your workloads often run close to capacity or if you need to manage pending pods due to insufficient resources. It performs well in such scenarios by launching new nodes and reallocating workloads efficiently. To maximize its effectiveness, you can:

- Set node taints and tolerations to ensure proper pod scheduling.

- Monitor the cluster regularly to identify performance issues.

- Optimize node sizes for cost-effectiveness.

- Use multiple availability zones to enhance reliability.

Cluster Autoscaler’s compatibility with various Kubernetes distributions and its granular control make it a dependable choice for many organizations.

Let CloudPilot AI elevate Karpenter for you

CloudPilot AI doesn’t just enhance Karpenter’s capabilities—it maximizes them. With up to 120 minutes of spot instance interruption forecasts, far exceeding default 2-minute notice, you gain the foresight needed to avoid unexpected disruptions. Real-time analysis of price fluctuations and interruption rates ensures smarter, more data-driven decisions for managing your cloud resources.

CloudPilot AI operates on the industry’s only “success-based” pricing model, meaning you only pay for results. By leveraging our unique combination of spot instance automation and intelligent node selection, we can help you cut cloud costs by up to 80%, eliminating waste and staying within budget. This allows you to scale rapidly, save substantially, and maintain high performance—all without compromise.

Whether you’re a DevOps engineer, SRE, or FinOps professional, CloudPilot AI integrates seamlessly with Karpenter to offer unparalleled control over your cloud costs. By combining the power of both tools, you can optimize smarter, not harder—ensuring your cloud operations are as efficient, cost-effective, and reliable as possible. Let CloudPilot AI take your Karpenter experience to the next level, helping you make the most out of your cloud resources.

Book a demo to see the savings for yourself!

Top comments (0)