TL; DR

In this article, we will discuss and present a script (ingest-static-pv.sh) for Persistent Volume static provisioning of Dell CSI Drivers for PowerMax, PowerStore, PowerScale, PowerFlex, and Unity.

The premise

As part of an OpenShift migration project from one cluster to a new one, we wanted to ease the transition by loading the existing persistent storage in the new cluster.

- TL; DR

- The premise

- Concepts for static provisioning

-

ingest-static-pv.sh; step by step with PowerMax - Conclusion

Concepts for static provisioning

Before we dive into the implementation, let us review some Kubernetes concepts that back the static provisioning.

PersistentVolume static provisioning

The static provisioning, as opposite to dynamic provisioning, is the action of creating PersistentVolume upfront to they are ready to be consumed later by a PersistentVolumeClaim.

reclaimPolicy

Each StorageClass has a reclaimPolicy that tells Kubernetes what to do with a volume once it is released from it usage. Every Dell drivers support the Retain and Delete policies.

If set to Delete, the Dell drivers will remove the volume and other objects (like the exports, quotas, etc.) on the backend array. If set to Retain, only the Kubernetes objects (PersistentVolume, VolumeAttachment) will be deleted. With Retain, it is up to the storage administrator to cleanup or not.

The reclaimPolicy is inherited in the PersistentVolume under the attribute persistentVolumeReclaimPolicy. It is possible to change a persistentVolumeReclaimPolicy at any point in time with a kubectl edit pv [my_pv] or command like :

kubectl patch pv [my_pv] -p '{"spec":{"persistentVolumeReclaimPolicy":"Retain"}}'

Provisioner

The provisioner gives the driver to be used for the volume provisioning. The Dell drivers defaults are:

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

isilon (default) csi-isilon.dellemc.com Delete Immediate true 15d

powermax (default) csi-powermax.dellemc.com Delete Immediate true 46d

powermax-xfs csi-powermax.dellemc.com Delete Immediate true 46d

powerstore (default) csi-powerstore.dellemc.com Delete WaitForFirstConsumer true 22h

powerstore-nfs csi-powerstore.dellemc.com Delete WaitForFirstConsumer true 22h

powerstore-xfs csi-powerstore.dellemc.com Delete WaitForFirstConsumer true 22h

unity (default) csi-unity.dellemc.com Delete Immediate true 20d

unity-iscsi csi-unity.dellemc.com Delete Immediate true 20d

unity-nfs csi-unity.dellemc.com Delete Immediate true 20d

vxflexos (default) csi-vxflexos.dellemc.com Delete WaitForFirstConsumer true 29d

vxflexos-xfs csi-vxflexos.dellemc.com Delete WaitForFirstConsumer true 29d

csi-powerstore and csi-powermax drivers allow you to tweak the provisioner. It enables you to spin multiple instances of the driver in the same cluster and, therefore, connect to different array or create more granular StorageClass.

To do so you can, during the installation, edit the variable driverName for PowerStore and the variable customDriverName for PowerMax.

volumeHandle

The volumeHandle is the unique identifier of the volume created on the storage backend and returned by the CSI driver during the volume creation. Any call by the CSI drivers on a volume will reference that unique id.

What follows is probably the most important piece of this article. We will list for each Dell driver how the volumeHandle is construct and how to load an existing volume as a PV.

PowerMax

The PowerMax volumeHandle consists of:

csi-<Cluster Prefix>-<Volume Prefix>-<Volume Name>-<Symmetrix ID>-<Symmetrix Vol ID>

1 2 3 4 5 6

- csi- is an hardcoded value

-

Cluster Prefix , is the value given during the installation of the driver in the variable named

clusterPrefix. -

Volume Prefix , is the value given during the installation of the driver in the variable named

volumeNamePrefix. - Volume Name , is a random UUID given by the controller sidecar.

- Symmetrix ID is the twelve characters long PowerMax identifier.

- Symmetrix Vol ID is the LUN identifier.

The volumeHandle can be found in Unisphere for PowerMax under Storage > Volumes. Highlighting the volume will populate the window on the left showing this information.

The construction of the volumeHandle is given here and you can test your volumeHandle is valid on rubular or directly from STORAGECLASS=powermax VOLUMEHANDLE=help ingest-static-pv.sh.

PowerStore

The PowerStore volumehandle is just volume’s or NFS share ID. For example:

volumeHandle: 880fb26c-9a94-4565-9e6e-c0bf2b029ecc

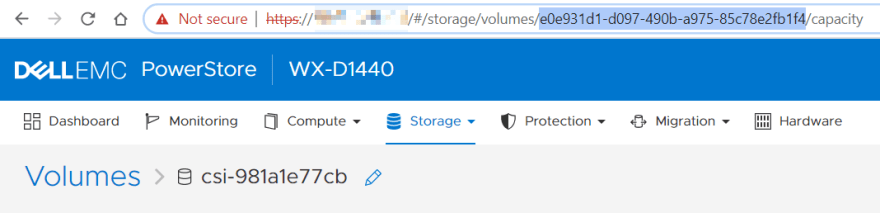

The easiest to get the identifier, is to connect to the WebUI and copy the UUID from the URL like here:

You can test your volumeHandle is valid a valid UUID on rubular or directly from STORAGECLASS=powerstore VOLUMEHANDLE=help ingest-static-pv.sh.

PowerScale

The PowerScale/Isilon volumeHandle is the volume name, the Export ID and the Access Zone separated by =_=_=. For example:

volumeHandle: PowerScaleStaticVolTest=_=_=176=_=_=System

This information can be found in the PowerScale OneFS GUI under Protocols > Unix sharing (NFS) > NFS Exports

The construction of the volumeHandle is given here and you can test your volumeHandle is valid on rubular or directly from STORAGECLASS=isilon VOLUMEHANDLE=help ingest-static-pv.sh.

PowerFlex

The PowerFlex/VxFlexOS volumehandle is just volume’s ID. For example:

volumeHandle: ecdbd5bd0000000a

The volume’s ID can be found in the VxFlex OS GUI under Frontend > Volumes. Click on the volume then the Show property sheet icon. The ID will be listed under Identity > ID ; or you can check it with : scli --query_all_volumes

The volume ID is missing from the 3.5 Web UI.

Unity

The Unity volumeHandle is the volume name or filesystem name, the protocol (iSCSI, FC or NFS), and the CLI ID separated by dashes -.

volumeHandle: csiunity-fde5df688a-iSCSI-fnm00000000000-sv_16

volumeHanble: csiunity-46d0385efd-FC-fnm00000000000-sv_938

volumeHandle: csiunity-a7bb9ee130-NFS-fnm00000000000-fs_5

This information can be found in the Unisphere for Unity ; under Storage then Block or File. The CLI ID may not be visible by default. To view the CLI ID click Customize your view > Columns check the CLI ID check box.

The construction of the volumeHandle is given here and you can test your volumeHandle is valid on rubular or directly from:

STORAGECLASS=unity VOLUMEHANDLE=help ./ingest-static-pv.sh

ingest-static-pv.sh ; step by step with PowerMax

The ingest-static-pv.sh script (available on https://github.com/coulof/dell-csi-static-pv) will ease the loading of existing volume as a PV or both PV and PVC. It is designed to take the minimal required fields by the provisioner to work.

The example below will go through every step to statically load a PowerMax LUN in Kubernetes.

Get the volume details

For the first step you can connect to Unisphere and get the Volume Identifier (here csi-fdg-pmax-9e954fcdfa), the Symmetrix ID (000197900704), the Symmetrix Vol ID (0017C) and the Capacity (8GB) which are mandatory to provision the PV.

Confirm the Provisioner and StorageClass

You can validate the provisioner for your driver with kubectl get sc -o custom-columns=NAME:.metadata.name,PROVISIONER:.provisioner

NAME PROVISIONER

powermax csi-powermax.dellemc.com

powermax-xfs csi-powermax.dellemc.com

In this case, we will use the default one given by the StorageClass name.

Prepare the volumeHandle value

As mentioned in the chapter above, the construction of volumeHandle is the key piece to map a Kubernetes PersistentVolume to its actual volume in the backend storage array.

To get help on the volumeHandle for the storage system you need to load, you can use : STORAGECLASS=powermax VOLUMEHANDLE=help ./ingest-static-pv.sh ; or here, refer to the PowerMax chapter of this blog.

For the help on all the parameters, run ./ingest-static-pv.sh without any environment variable.

Thanks to the Unisphere values we found above, we must define the value of VOLUMEHANDLE as csi-fdg-pmax-9e954fcdfa-000197900704-0017C.

Tip: in case you need to create a volume from scratch and you need to cook a PV name with a UUID you can use the following command:

UUID=$(uuidgen) && echo ${UUID: -10}

Dry-run

The script will output the definitions on stdout only by default.

In the example below, we decided to create both the PV and the PVC (cf. PVCNAME variable) in the default namespace.

To do so we execute:

STORAGECLASS=powermax VOLUMEHANDLE=csi-fdg-pmax-9e954fcdfa-000197900704-0017C PVNAME=pmax-9e954fcdfa SIZE=8 PVCNAME=testpvc ./ingest-static-pv.sh

which results in :

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pmax-9e954fcdfa

spec:

capacity:

storage: 8Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: powermax

volumeMode: Filesystem

csi:

driver: csi-powermax.dellemc.com

volumeHandle: csi-fdg-pmax-9e954fcdfa-000197900704-0017C

fsType: ext4

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: testpvc

namespace: default

spec:

volumeName: pmax-9e954fcdfa

storageClassName: powermax

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 8Gi

If you run the script without PVNAME the PersistentVolume definition only will be displayed.

And run it for real !

To load the definition in your Kubernetes instance you must specify DRYRUN=false.

Map the PVC to a Pod

Now that the PVC is Bound to the PV, we can use it in a Pod.

If the CSI driver succeeds to mount the volume to the Pod, we will have VolumeAttachment object displayed with kubectl get volumeattachments:

NAME ATTACHER PV NODE ATTACHED AGE

csi-39d511b28dc4490d47ede7f573d7616ae05addda6db9f2a8b6aecf7649a00722 csi-powermax.dellemc.com pmax-9e954fcdfa lsisfty94.lss.emccom true 82s

And the events attached to the Pod will show a SuccessfulAttachVolume message like below :

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 28s default-scheduler Successfully assigned default/testpod-script to lsisfty94.lss.emc.com

Normal SuccessfulAttachVolume 18s attachdetach-controller AttachVolume.Attach succeeded for volume "pmax-9e954fcdfa"

Conclusion

The static provisioning proved to be very useful for migration projects.

The script ingest-static-pv.sh is planned to be used in a couple of projects. One for a migration from an existing OpenShift cluster to a new one ; the second for a migration of an OpenShift storage backend from Pure storage to PowerMax.

Feel free to try it and open tickets to the repo .

Top comments (0)