A common hurdle I see among developers is deciding on a testing strategy for the system they are working on.

Thankfully finding a good testing strategy involves the entire team, not just developers as it is everyone's responsibility that the system works as intended.

Kick it off

Gather everyone who has a stake in your technology together for a meeting, the purpose of which is to discover and understand the risks involved in your technology operations.

Get everyone to list off features of your technology onto post it notes, the more diverse the people present the more features you will discover as certain features are more relevant to different areas.

Once you have a list of features, draw the following chart on a whiteboard.

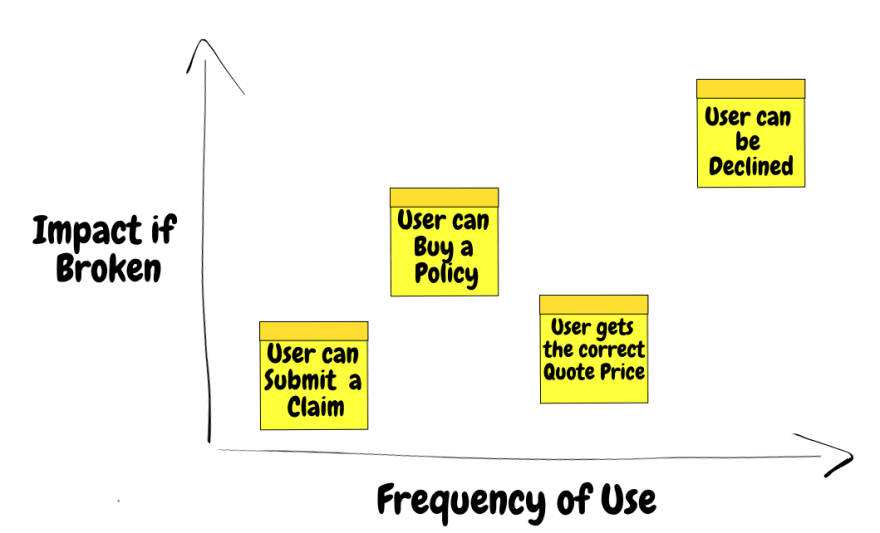

Get everyone to place the features where they think is appropriate. Since I have some experience in insurance I will use that as an example.

Here I have four features;

- User can submit a claim: Users rarely submit claims and are able to call us if there is an issue with the online system.

- User can buy a policy: Need this to make money but a user will only ever do it once and it is not the end of the world if it malfunctions.

- User can be declined: Every user will interact with this feature before buying a policy, and the cost to the business for one malfunction of this feature can be catastrophic to the business.

- User gets the correct price: Users need to get quotes that are reasonably priced, but this feature is about getting an accurate price which doesn't carry as much risk as a decline.

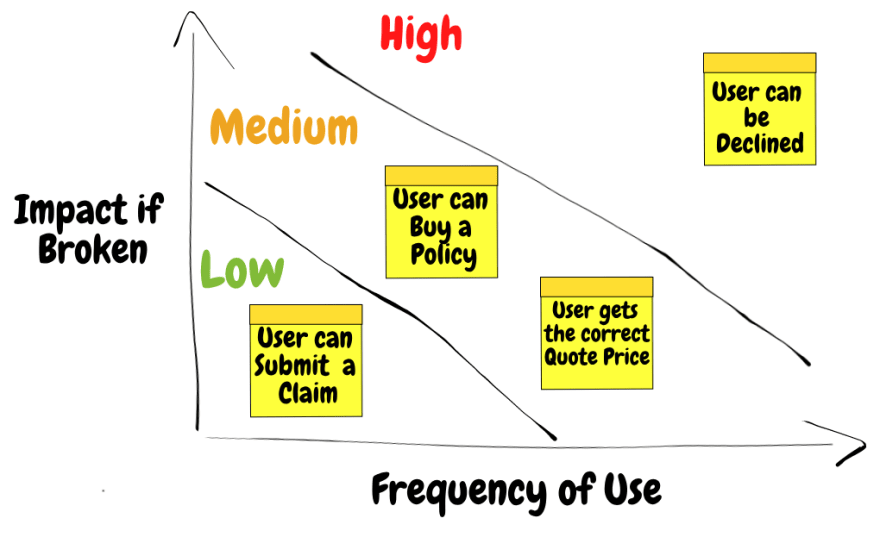

Once the features are understood by everyone draw two or more lines from top left to bottom right. These define how much risk it is to the business if something goes wrong with a feature.

Once everyone has agreed on where features sit within the zones, conclude the meeting.

Develop and Communicate the Technical Implementation

Gather all technical staff and develop a rough guide to testing different parts of the system based on the risk (risk-based testing). Following on from the previous example you may end up with something like this:

Feel free to have additional rows like trivial and critical to better classify the varying risks in your business.

In the example we have extra monitoring for success on the declines to let us know that the service was functioning as expected. We also manually test that the service was working before each release on pre-production to be 100% sure it is working before releasing.

Once the technical team has decided on a rough testing strategy they can present back to the rest of the team. Developers should take onboard any feedback and adapt.

Learn and Improve

It is highly unlikely the strategy is perfect and it is important to keep improving.

A couple ideas to improve your testing strategy are:

- Whenever there is a bug in production assess its impact and if its significant adjust your testing strategy to prevent it from happening again.

- Set metrics to measure the outcome of your testing strategies, an interesting metric I've heard of is escape defects which are issues in production that were not identified before reaching customers. If you are able to measure something like that accurately and weigh it by impact of the defect it can provide insight into how your testing is performing.

Thanks for reading.

Inspired by:

https://www.youtube.com/watch?v=RuGFSSUYGXQ

https://www.youtube.com/watch?v=rSY-zqDfc_s

Reviewed by: @nhnhatquan, @WickyNilliams

Top comments (5)

I very much should look into doing this. I can't get all stakeholders but I can get a number of the. Right now many believe all features follow the high path and we don't agree with what is high. We have a dedicated QA so complete testing is obviously possible, and with automation we can even do it quickly 🤥.

I'm also fighting against bad testing. Basically the equivalent to testing the compiler. We don't need to retest that 1=1 in every environment.

Yeah it is not up to just the developers to decide what needs the most tests. Pragmatic testing means having the minimal amount of tests you need to reach the confidence required for the business risk. Bad tests cost you time with maintenance, it is better to not have them imo. If you have a QA you can use them to do stuff like accessibility and performance auditing instead of writing a lot of automated tests that slow down development.

Yes I have seen a lot of ineffective tests as well. I am going to write on what a good unit test is next :).

Yeah, I'm being directed toward leading QA, but I'm questioned every step for making change. But I have got enough that my push to make tests that are stable and reliable is working out.

Some of the challenges fighting the bad tests is that for so long the creator emphasized the limit time to maintain, which when compared to the same expectations of manually running the test, completely true.

For tests like 0=0 I think you could make a case to remove them but automation tests are more useful, I think it's worth keeping them in until you find a way to test the same stuff with integration or unit tests. If you have automation tests covering low risk stuff I think you could make a case for the cost in time to run the tests vs the value they provide and maybe trim them back.

Right, it can be hard to get to the bottom of why they are useful, see we have the equivalent unit test so it's not like I'm advocating it to not be tested.

Lots of educating I need to do, like explaining declarative languages their risks and why the unittests do cover the declarations.