https://deepsource.io/blog/tailscale-at-deepsource/

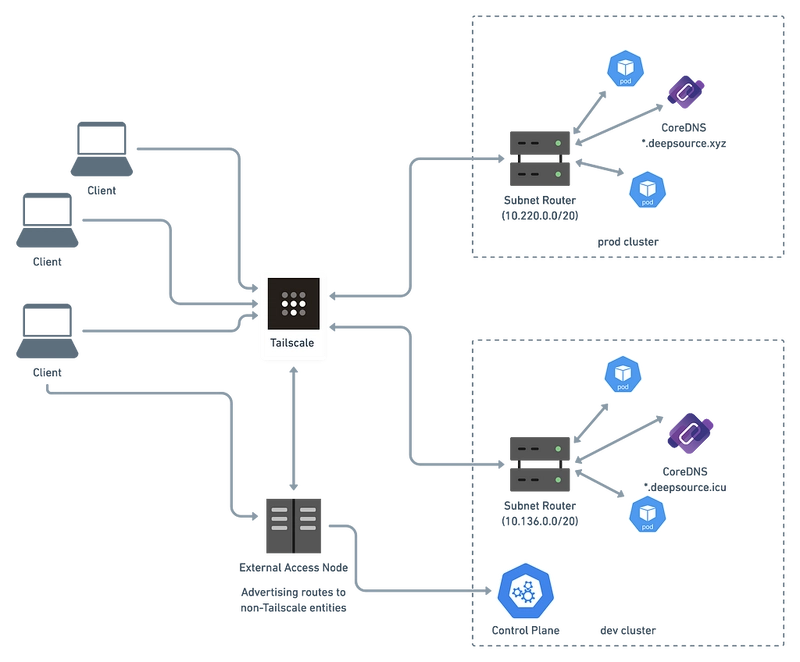

Comme on a pu le voir précédemment, Tailscale est un service VPN qui rend les appareils et les applications que vous possédez accessibles partout dans le monde, en toute sécurité et sans effort.

Accéder à Red Hat CodeReady Containers dans Azure depuis Tailscale …

Il existe de nombreuses façons d’exécuter Tailscale à l’intérieur d’un cluster Kubernetes et je vais m’intéresser au mode subnet-router ici …

Subnet routers and traffic relay nodes

Lancement d’une instance “High Memory” Ubuntu 22.04 LTS dans Linode :

High Memory Instances | Linode

et comme dans ce précédent article, utilisation de LXD comme base d’un cluster Kubernetes avec k3s :

- Créer des applications directement dans Kubernetes avec Acorn …

- LXD: five easy pieces | Ubuntu

- MicroK8s - MicroK8s in LXD | MicroK8s

root@localhost:~# snap install lxd --channel=5.9/stable

2022-12-25T13:40:38Z INFO Waiting for automatic snapd restart...

lxd (5.9/stable) 5.9-76c110d from Canonical✓ installed

root@localhost:~# lxd init --minimal

root@localhost:~# lxc profile show default > lxd-profile-default.yaml

root@localhost:~# vim lxd-profile-default.yaml

root@localhost:~# sed -ri "s'@@SSHPUB@@'$(cat ~/.ssh/id_rsa.pub)'" lxd-profile-default.yaml

root@localhost:~# lxc profile edit default < lxd-profile-default.yaml

root@localhost:~# lxc profile create microk8s

Profile microk8s created

root@localhost:~# wget https://raw.githubusercontent.com/ubuntu/microk8s/master/tests/lxc/microk8s.profile -O microk8s.profile

Saving to: ‘microk8s.profile’

microk8s.profile 100%[=============================================================================================>] 816 --.-KB/s in 0s

root@localhost:~# cat microk8s.profile | lxc profile edit microk8s

root@localhost:~# for i in {1..3}; do lxc launch -p default -p microk8s ubuntu:22.04 k3s$i; done

Creating k3s1

Starting k3s1

Creating k3s2

Starting k3s2

Creating k3s3

Starting k3s3

root@localhost:~# lxc ls

+------+---------+----------------------+-----------------------------------------------+-----------+-----------+

| NAME | STATE | IPV4 | IPV6 | TYPE | SNAPSHOTS |

+------+---------+----------------------+-----------------------------------------------+-----------+-----------+

| k3s1 | RUNNING | 10.137.67.183 (eth0) | fd42:bb74:6eb3:8eb6:216:3eff:fe0d:bda8 (eth0) | CONTAINER | 0 |

+------+---------+----------------------+-----------------------------------------------+-----------+-----------+

| k3s2 | RUNNING | 10.137.67.177 (eth0) | fd42:bb74:6eb3:8eb6:216:3eff:fe2e:fdcf (eth0) | CONTAINER | 0 |

+------+---------+----------------------+-----------------------------------------------+-----------+-----------+

| k3s3 | RUNNING | 10.137.67.222 (eth0) | fd42:bb74:6eb3:8eb6:216:3eff:fe9b:c0d2 (eth0) | CONTAINER | 0 |

+------+---------+----------------------+-----------------------------------------------+-----------+-----------+

Utilisation de k3sup pour créer le cluster k3s sur la base de ces instances LXC :

- OpenStack sur LXD avec Juju et k3sup dans phoenixNAP …

- GitHub - alexellis/k3sup: bootstrap K3s over SSH in < 60s 🚀

root@localhost:~# curl -sLS https://get.k3sup.dev | sh

x86_64

Downloading package https://github.com/alexellis/k3sup/releases/download/0.12.12/k3sup as /tmp/k3sup

Download complete.

Running with sufficient permissions to attempt to move k3sup to /usr/local/bin

New version of k3sup installed to /usr/local/bin

_ _____

| | _| ___/___ _ _ _ __

| |/ / |_ \/ __| | | | '_ \

| < ___) \__ \ |_| | |_) |

|_|\_\ ____/|___ /\ __,_| .__ /

|_|

bootstrap K3s over SSH in < 60s 🚀

🐳 k3sup needs your support: https://github.com/sponsors/alexellis

Version: 0.12.12

Git Commit: 02c7a775b9914b9dcf3b90fa7935eb347b7979e7

================================================================

alexellis's work on k3sup needs your support

https://github.com/sponsors/alexellis

================================================================

root@localhost:~# k3sup --help

Usage:

k3sup [flags]

k3sup [command]

Examples:

# Install k3s on a server with embedded etcd

k3sup install \

--cluster \

--host $SERVER_1 \

--user $SERVER_1_USER \

--k3s-channel stable

# Join a second server

k3sup join \

--server \

--host $SERVER_2 \

--user $SERVER_2_USER \

--server-host $SERVER_1 \

--server-user $SERVER_1_USER \

--k3s-channel stable

# Join an agent to the cluster

k3sup join \

--host $SERVER_1 \

--user $SERVER_1_USER \

--k3s-channel stable

🐳 k3sup needs your support: https://github.com/sponsors/alexellis

Available Commands:

completion Generate the autocompletion script for the specified shell

help Help about any command

install Install k3s on a server via SSH

join Install the k3s agent on a remote host and join it to an existing server

ready Check if a cluster is ready using kubectl.

update Print update instructions

version Print the version

Flags:

-h, --help help for k3sup

Use "k3sup [command] --help" for more information about a command.

root@localhost:~# export IP=10.137.67.183

root@localhost:~# k3sup install --ip $IP --user ubuntu

Running: k3sup install

2022/12/25 14:30:07 10.137.67.183

Public IP: 10.137.67.183

[INFO] Finding release for channel stable

[INFO] Using v1.25.4+k3s1 as release

[INFO] Downloading hash https://github.com/k3s-io/k3s/releases/download/v1.25.4+k3s1/sha256sum-amd64.txt

[INFO] Downloading binary https://github.com/k3s-io/k3s/releases/download/v1.25.4+k3s1/k3s

[INFO] Verifying binary download

[INFO] Installing k3s to /usr/local/bin/k3s

[INFO] Skipping installation of SELinux RPM

[INFO] Creating /usr/local/bin/kubectl symlink to k3s

[INFO] Creating /usr/local/bin/crictl symlink to k3s

[INFO] Creating /usr/local/bin/ctr symlink to k3s

[INFO] Creating killall script /usr/local/bin/k3s-killall.sh

[INFO] Creating uninstall script /usr/local/bin/k3s-uninstall.sh

[INFO] env: Creating environment file /etc/systemd/system/k3s.service.env

[INFO] systemd: Creating service file /etc/systemd/system/k3s.service

[INFO] systemd: Enabling k3s unit

Created symlink /etc/systemd/system/multi-user.target.wants/k3s.service → /etc/systemd/system/k3s.service.

[INFO] systemd: Starting k3s

Result: [INFO] Finding release for channel stable

[INFO] Using v1.25.4+k3s1 as release

[INFO] Downloading hash https://github.com/k3s-io/k3s/releases/download/v1.25.4+k3s1/sha256sum-amd64.txt

[INFO] Downloading binary https://github.com/k3s-io/k3s/releases/download/v1.25.4+k3s1/k3s

[INFO] Verifying binary download

[INFO] Installing k3s to /usr/local/bin/k3s

[INFO] Skipping installation of SELinux RPM

[INFO] Creating /usr/local/bin/kubectl symlink to k3s

[INFO] Creating /usr/local/bin/crictl symlink to k3s

[INFO] Creating /usr/local/bin/ctr symlink to k3s

[INFO] Creating killall script /usr/local/bin/k3s-killall.sh

[INFO] Creating uninstall script /usr/local/bin/k3s-uninstall.sh

[INFO] env: Creating environment file /etc/systemd/system/k3s.service.env

[INFO] systemd: Creating service file /etc/systemd/system/k3s.service

[INFO] systemd: Enabling k3s unit

[INFO] systemd: Starting k3s

Created symlink /etc/systemd/system/multi-user.target.wants/k3s.service → /etc/systemd/system/k3s.service.

Saving file to: /root/kubeconfig

# Test your cluster with:

export KUBECONFIG=/root/kubeconfig

kubectl config use-context default

kubectl get node -o wide

🐳 k3sup needs your support: https://github.com/sponsors/alexellis

root@localhost:~# export AGENT1_IP=10.137.67.177

root@localhost:~# k3sup join --ip $AGENT1_IP --server-ip $IP --user ubuntu

Running: k3sup join

Server IP: 10.137.67.183

K107122269d6d9c54733f9c835439e668a98b6b0a9c5b9cc7ce7272b0cc9b294b09::server:ccc6e02aac3498cabc1b8ab7b3f334c1

[INFO] Finding release for channel stable

[INFO] Using v1.25.4+k3s1 as release

[INFO] Downloading hash https://github.com/k3s-io/k3s/releases/download/v1.25.4+k3s1/sha256sum-amd64.txt

[INFO] Downloading binary https://github.com/k3s-io/k3s/releases/download/v1.25.4+k3s1/k3s

[INFO] Verifying binary download

[INFO] Installing k3s to /usr/local/bin/k3s

[INFO] Skipping installation of SELinux RPM

[INFO] Creating /usr/local/bin/kubectl symlink to k3s

[INFO] Creating /usr/local/bin/crictl symlink to k3s

[INFO] Creating /usr/local/bin/ctr symlink to k3s

[INFO] Creating killall script /usr/local/bin/k3s-killall.sh

[INFO] Creating uninstall script /usr/local/bin/k3s-agent-uninstall.sh

[INFO] env: Creating environment file /etc/systemd/system/k3s-agent.service.env

[INFO] systemd: Creating service file /etc/systemd/system/k3s-agent.service

[INFO] systemd: Enabling k3s-agent unit

Created symlink /etc/systemd/system/multi-user.target.wants/k3s-agent.service → /etc/systemd/system/k3s-agent.service.

[INFO] systemd: Starting k3s-agent

Logs: Created symlink /etc/systemd/system/multi-user.target.wants/k3s-agent.service → /etc/systemd/system/k3s-agent.service.

Output: [INFO] Finding release for channel stable

[INFO] Using v1.25.4+k3s1 as release

[INFO] Downloading hash https://github.com/k3s-io/k3s/releases/download/v1.25.4+k3s1/sha256sum-amd64.txt

[INFO] Downloading binary https://github.com/k3s-io/k3s/releases/download/v1.25.4+k3s1/k3s

[INFO] Verifying binary download

[INFO] Installing k3s to /usr/local/bin/k3s

[INFO] Skipping installation of SELinux RPM

[INFO] Creating /usr/local/bin/kubectl symlink to k3s

[INFO] Creating /usr/local/bin/crictl symlink to k3s

[INFO] Creating /usr/local/bin/ctr symlink to k3s

[INFO] Creating killall script /usr/local/bin/k3s-killall.sh

[INFO] Creating uninstall script /usr/local/bin/k3s-agent-uninstall.sh

[INFO] env: Creating environment file /etc/systemd/system/k3s-agent.service.env

[INFO] systemd: Creating service file /etc/systemd/system/k3s-agent.service

[INFO] systemd: Enabling k3s-agent unit

[INFO] systemd: Starting k3s-agent

🐳 k3sup needs your support: https://github.com/sponsors/alexellis

root@localhost:~# export AGENT2_IP=10.137.67.222

root@localhost:~# k3sup join --ip $AGENT2_IP --server-ip $IP --user ubuntu

Running: k3sup join

Server IP: 10.137.67.183

K107122269d6d9c54733f9c835439e668a98b6b0a9c5b9cc7ce7272b0cc9b294b09::server:ccc6e02aac3498cabc1b8ab7b3f334c1

[INFO] Finding release for channel stable

[INFO] Using v1.25.4+k3s1 as release

[INFO] Downloading hash https://github.com/k3s-io/k3s/releases/download/v1.25.4+k3s1/sha256sum-amd64.txt

[INFO] Downloading binary https://github.com/k3s-io/k3s/releases/download/v1.25.4+k3s1/k3s

[INFO] Verifying binary download

[INFO] Installing k3s to /usr/local/bin/k3s

[INFO] Skipping installation of SELinux RPM

[INFO] Creating /usr/local/bin/kubectl symlink to k3s

[INFO] Creating /usr/local/bin/crictl symlink to k3s

[INFO] Creating /usr/local/bin/ctr symlink to k3s

[INFO] Creating killall script /usr/local/bin/k3s-killall.sh

[INFO] Creating uninstall script /usr/local/bin/k3s-agent-uninstall.sh

[INFO] env: Creating environment file /etc/systemd/system/k3s-agent.service.env

[INFO] systemd: Creating service file /etc/systemd/system/k3s-agent.service

[INFO] systemd: Enabling k3s-agent unit

Created symlink /etc/systemd/system/multi-user.target.wants/k3s-agent.service → /etc/systemd/system/k3s-agent.service.

[INFO] systemd: Starting k3s-agent

Logs: Created symlink /etc/systemd/system/multi-user.target.wants/k3s-agent.service → /etc/systemd/system/k3s-agent.service.

Output: [INFO] Finding release for channel stable

[INFO] Using v1.25.4+k3s1 as release

[INFO] Downloading hash https://github.com/k3s-io/k3s/releases/download/v1.25.4+k3s1/sha256sum-amd64.txt

[INFO] Downloading binary https://github.com/k3s-io/k3s/releases/download/v1.25.4+k3s1/k3s

[INFO] Verifying binary download

[INFO] Installing k3s to /usr/local/bin/k3s

[INFO] Skipping installation of SELinux RPM

[INFO] Creating /usr/local/bin/kubectl symlink to k3s

[INFO] Creating /usr/local/bin/crictl symlink to k3s

[INFO] Creating /usr/local/bin/ctr symlink to k3s

[INFO] Creating killall script /usr/local/bin/k3s-killall.sh

[INFO] Creating uninstall script /usr/local/bin/k3s-agent-uninstall.sh

[INFO] env: Creating environment file /etc/systemd/system/k3s-agent.service.env

[INFO] systemd: Creating service file /etc/systemd/system/k3s-agent.service

[INFO] systemd: Enabling k3s-agent unit

[INFO] systemd: Starting k3s-agent

🐳 k3sup needs your support: https://github.com/sponsors/alexellis

Le cluster k3s est opérationnel :

root@localhost:~# mkdir .kube

root@localhost:~# cp kubeconfig .kube/config

root@localhost:~# kubectl cluster-info

Kubernetes control plane is running at https://10.137.67.183:6443

CoreDNS is running at https://10.137.67.183:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

Metrics-server is running at https://10.137.67.183:6443/api/v1/namespaces/kube-system/services/https:metrics-server:https/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

root@localhost:~# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k3s1 Ready control-plane,master 5m30s v1.25.4+k3s1 10.137.67.183 <none> Ubuntu 22.04.1 LTS 5.15.0-47-generic containerd://1.6.8-k3s1

k3s2 Ready <none> 3m19s v1.25.4+k3s1 10.137.67.177 <none> Ubuntu 22.04.1 LTS 5.15.0-47-generic containerd://1.6.8-k3s1

k3s3 Ready <none> 2m41s v1.25.4+k3s1 10.137.67.222 <none> Ubuntu 22.04.1 LTS 5.15.0-47-generic containerd://1.6.8-k3s1

root@localhost:~# kubectl get po,svc -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system pod/local-path-provisioner-79f67d76f8-zg7wg 1/1 Running 0 5m20s

kube-system pod/coredns-597584b69b-xzpsb 1/1 Running 0 5m20s

kube-system pod/helm-install-traefik-crd-gpxp2 0/1 Completed 0 5m20s

kube-system pod/helm-install-traefik-8tkzk 0/1 Completed 1 5m20s

kube-system pod/svclb-traefik-7833f573-cl5nj 2/2 Running 0 5m4s

kube-system pod/traefik-bb69b68cd-59mwp 1/1 Running 0 5m4s

kube-system pod/metrics-server-5c8978b444-sxhgg 1/1 Running 0 5m20s

kube-system pod/svclb-traefik-7833f573-xdp5t 2/2 Running 0 3m14s

kube-system pod/svclb-traefik-7833f573-5nhph 2/2 Running 0 2m37s

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default service/kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 5m36s

kube-system service/kube-dns ClusterIP 10.43.0.10 <none> 53/UDP,53/TCP,9153/TCP 5m32s

kube-system service/metrics-server ClusterIP 10.43.129.55 <none> 443/TCP 5m31s

kube-system service/traefik LoadBalancer 10.43.253.230 10.137.67.177,10.137.67.183,10.137.67.222,fd42:bb74:6eb3:8eb6:216:3eff:fe0d:bda8,fd42:bb74:6eb3:8eb6:216:3eff:fe2e:fdcf,fd42:bb74:6eb3:8eb6:216:3eff:fe9b:c0d2 80:30133/TCP,443:30501/TCP 5m4s

Je vais me baser sur ce dépôt GitHub :

tailscale/docs/k8s at main · tailscale/tailscale

Voici les plans d’adressage par défaut dans k3s :

K3s Server Configuration | K3s

root@localhost:~# git clone https://github.com/tailscale/tailscale

Cloning into 'tailscale'...

root@localhost:~# cd tailscale/docs/k8s/

root@localhost:~/tailscale/docs/k8s# tree .

.

├── Makefile

├── proxy.yaml

├── README.md

├── rolebinding.yaml

├── role.yaml

├── sa.yaml

├── sidecar.yaml

├── subnet.yaml

└── userspace-sidecar.yaml

0 directories, 9 files

Création d’un secret avec la clé d’autorisation dans Tailscale et du contrôle d’accès basé sur les rôles (RBAC) pour autoriser le Pod Tailscale à lire/écrire le secret :

root@localhost:~/tailscale/docs/k8s# cat secret.yaml

apiVersion: v1

kind: Secret

metadata:

name: tailscale-auth

stringData:

TS_AUTH_KEY: tskey-auth-XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX

root@localhost:~/tailscale/docs/k8s# kubectl apply -f secret.yaml

secret/tailscale-auth created

root@localhost:~/tailscale/docs/k8s# export SA_NAME=tailscale

root@localhost:~/tailscale/docs/k8s# export TS_KUBE_SECRET=tailscale-auth

root@localhost:~/tailscale/docs/k8s# make rbac

role.rbac.authorization.k8s.io/tailscale created

rolebinding.rbac.authorization.k8s.io/tailscale created

serviceaccount/tailscale created

Le dépôt contient en effet un fichier Makefile pour ces actions :

root@localhost:~/tailscale/docs/k8s# cat Makefile

# Copyright (c) 2022 Tailscale Inc & AUTHORS All rights reserved.

# Use of this source code is governed by a BSD-style

# license that can be found in the LICENSE file.

TS_ROUTES ?= ""

SA_NAME ?= tailscale

TS_KUBE_SECRET ?= tailscale

rbac:

@sed -e "s;{{TS_KUBE_SECRET}};$(TS_KUBE_SECRET);g" role.yaml | kubectl apply -f -

@sed -e "s;{{SA_NAME}};$(SA_NAME);g" rolebinding.yaml | kubectl apply -f -

@sed -e "s;{{SA_NAME}};$(SA_NAME);g" sa.yaml | kubectl apply -f -

sidecar:

@kubectl delete -f sidecar.yaml --ignore-not-found --grace-period=0

@sed -e "s;{{TS_KUBE_SECRET}};$(TS_KUBE_SECRET);g" sidecar.yaml | sed -e "s;{{SA_NAME}};$(SA_NAME);g" | kubectl create -f-

userspace-sidecar:

@kubectl delete -f userspace-sidecar.yaml --ignore-not-found --grace-period=0

@sed -e "s;{{TS_KUBE_SECRET}};$(TS_KUBE_SECRET);g" userspace-sidecar.yaml | sed -e "s;{{SA_NAME}};$(SA_NAME);g" | kubectl create -f-

proxy:

kubectl delete -f proxy.yaml --ignore-not-found --grace-period=0

sed -e "s;{{TS_KUBE_SECRET}};$(TS_KUBE_SECRET);g" proxy.yaml | sed -e "s;{{SA_NAME}};$(SA_NAME);g" | sed -e "s;{{TS_DEST_IP}};$(TS_DEST_IP);g" | kubectl create -f-

subnet-router:

@kubectl delete -f subnet.yaml --ignore-not-found --grace-period=0

@sed -e "s;{{TS_KUBE_SECRET}};$(TS_KUBE_SECRET);g" subnet.yaml | sed -e "s;{{SA_NAME}};$(SA_NAME);g" | sed -e "s;{{TS_ROUTES}};$(TS_ROUTES);g" | kubectl create -f-

Utilisation des plans d’adressage par défaut pour le déploiement de subnet-router avec Tailscale :

root@localhost:~/tailscale/docs/k8s# SERVICE_CIDR=10.43.0.0/16

root@localhost:~/tailscale/docs/k8s# POD_CIDR=10.42.0.0/16

root@localhost:~/tailscale/docs/k8s# export TS_ROUTES=$SERVICE_CIDR,$POD_CIDR

root@localhost:~/tailscale/docs/k8s# make subnet-router

pod/subnet-router created

Et il apparait sur la console de contrôle de Tailscale :

root@localhost:~# kubectl get po -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

subnet-router 1/1 Running 0 4m34s 10.42.2.3 k3s3 <none> <none>

root@localhost:~# kubectl logs subnet-router

boot: 2022/12/25 14:55:54 Starting tailscaled

boot: 2022/12/25 14:55:54 Waiting for tailscaled socket

2022/12/25 14:55:54 logtail started

2022/12/25 14:55:54 Program starting: v1.34.1-t331d553a5, Go 1.19.2-ts3fd24dee31: []string{"tailscaled", "--socket=/tmp/tailscaled.sock", "--state=kube:tailscale-auth", "--statedir=/tmp", "--tun=userspace-networking"}

avec exposition des routes vers les plans d’adressage définis dans le Pod Tailscale :

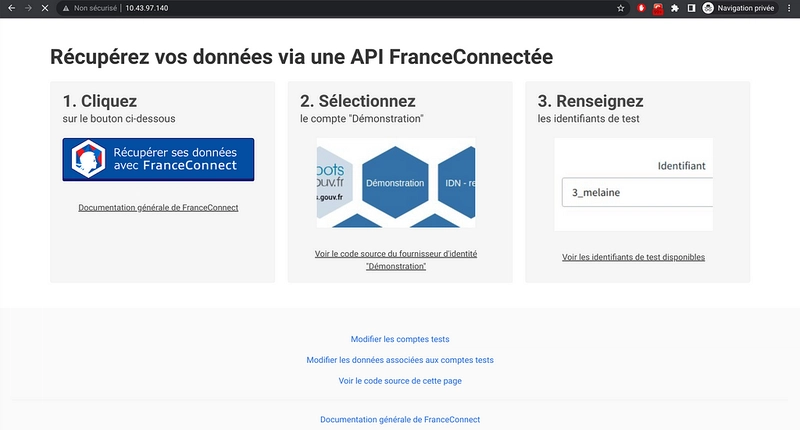

Test avec le sempiternel démonstrateur FranceConnect et ce manifest YAML

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: fcdemo3

labels:

app: fcdemo3

spec:

replicas: 4

selector:

matchLabels:

app: fcdemo3

template:

metadata:

labels:

app: fcdemo3

spec:

containers:

- name: fcdemo3

image: mcas/franceconnect-demo3:latest

ports:

- containerPort: 3000

---

apiVersion: v1

kind: Service

metadata:

name: fcdemo-service

spec:

type: ClusterIP

selector:

app: fcdemo3

ports:

- protocol: TCP

port: 80

targetPort: 3000

Ni mode NodePort, Ingress ou LoadBalancer ici. Simplement le mode ClusterIP …

root@localhost:~# cat fcdemo.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: fcdemo3

labels:

app: fcdemo3

spec:

replicas: 4

selector:

matchLabels:

app: fcdemo3

template:

metadata:

labels:

app: fcdemo3

spec:

containers:

- name: fcdemo3

image: mcas/franceconnect-demo3:latest

ports:

- containerPort: 3000

---

apiVersion: v1

kind: Service

metadata:

name: fcdemo-service

spec:

type: ClusterIP

selector:

app: fcdemo3

ports:

- protocol: TCP

port: 80

targetPort: 3000

root@localhost:~# kubectl apply -f fcdemo.yaml

deployment.apps/fcdemo3 created

service/fcdemo-service created

root@localhost:~# kubectl get po,svc -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/subnet-router 1/1 Running 0 10m 10.42.2.3 k3s3 <none> <none>

pod/fcdemo3-785559847c-w5d2r 1/1 Running 0 37s 10.42.1.3 k3s2 <none> <none>

pod/fcdemo3-785559847c-l856z 1/1 Running 0 37s 10.42.1.4 k3s2 <none> <none>

pod/fcdemo3-785559847c-zd26q 1/1 Running 0 37s 10.42.2.4 k3s3 <none> <none>

pod/fcdemo3-785559847c-2t2wp 1/1 Running 0 37s 10.42.0.9 k3s1 <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 36m <none>

service/fcdemo-service ClusterIP 10.43.97.140 <none> 80/TCP 37s app=fcdemo3

Comme j’ai accès directement aux plans d’adressage des services et Pods dans ce cluster k3s via Tailscale, vérification de l’accès au Portail FranceConnect Démo qui est effectif :

Comme je peux également y accéder directement avec l’adresse IP du Pod :

Je peux alors sur la base d’une autre instance Ubuntu 22.04 LTS connectée à Tailscale sur DigitalOcean, utiliser un proxy pour accéder au Portail FC :

root@proxy:~# curl -fsSL https://tailscale.com/install.sh | sh

Installing Tailscale for ubuntu jammy, using method apt

+ mkdir -p --mode=0755 /usr/share/keyrings

+ tee /usr/share/keyrings/tailscale-archive-keyring.gpg

+ curl -fsSL https://pkgs.tailscale.com/stable/ubuntu/jammy.noarmor.gpg

+ tee /etc/apt/sources.list.d/tailscale.list

+ curl -fsSL https://pkgs.tailscale.com/stable/ubuntu/jammy.tailscale-keyring.list

# Tailscale packages for ubuntu jammy

deb [signed-by=/usr/share/keyrings/tailscale-archive-keyring.gpg] https://pkgs.tailscale.com/stable/ubuntu jammy main

+ apt-get update

+ apt-get install -y tailscale

Reading package lists... Done

Building dependency tree... Done

Reading state information... Done

The following NEW packages will be installed:

tailscale

0 upgraded, 1 newly installed, 0 to remove and 70 not upgraded.

Need to get 22.0 MB of archives.

After this operation, 40.9 MB of additional disk space will be used.

Get:1 https://pkgs.tailscale.com/stable/ubuntu jammy/main amd64 tailscale amd64 1.34.1 [22.0 MB]

Fetched 22.0 MB in 3s (7602 kB/s)

Selecting previously unselected package tailscale.

(Reading database ... 64443 files and directories currently installed.)

Preparing to unpack .../tailscale_1.34.1_amd64.deb ...

Unpacking tailscale (1.34.1) ...

Setting up tailscale (1.34.1) ...

Created symlink /etc/systemd/system/multi-user.target.wants/tailscaled.service → /lib/systemd/system/tailscaled.service.

NEEDRESTART-VER: 3.5

NEEDRESTART-KCUR: 5.15.0-50-generic

NEEDRESTART-KEXP: 5.15.0-50-generic

NEEDRESTART-KSTA: 1

+ [false = true]

+ set +x

Installation complete! Log in to start using Tailscale by running:

tailscale up

root@proxy:~# tailscale up --accept-routes --authkey tskey-auth-XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX

root@proxy:~# ip a | grep tailscale

4: tailscale0: <POINTOPOINT,MULTICAST,NOARP,UP,LOWER_UP> mtu 1280 qdisc fq_codel state UNKNOWN group default qlen 500

inet 100.64.32.96/32 scope global tailscale0

Installation de Rust et Cargo :

rustup.rs - The Rust toolchain installer

root@proxy:~# curl --proto '=https' --tlsv1.2 -sSf https://sh.rustup.rs | sh

pour ce package :

crates.io: Rust Package Registry

root@proxy:~# cargo install reverseproxy

Updating crates.io index

Installing reverseproxy v0.1.0

Compiling autocfg v1.1.0

Compiling proc-macro2 v1.0.49

Compiling libc v0.2.139

Compiling quote v1.0.23

Compiling unicode-ident v1.0.6

Compiling syn v1.0.107

Compiling cfg-if v1.0.0

Compiling memchr v2.5.0

Compiling version_check v0.9.4

Compiling futures-core v0.3.25

Compiling slab v0.4.7

Compiling futures-channel v0.3.25

Compiling futures-task v0.3.25

Compiling log v0.4.17

Compiling proc-macro-error-attr v1.0.4

Compiling lock_api v0.4.9

Compiling futures-sink v0.3.25

Compiling futures-util v0.3.25

Compiling pin-project-lite v0.2.9

Compiling parking_lot_core v0.9.5

Compiling proc-macro-error v1.0.4

Compiling futures-macro v0.3.25

Compiling futures-io v0.3.25

Compiling scopeguard v1.1.0

Compiling smallvec v1.10.0

Compiling pin-utils v0.1.0

Compiling getrandom v0.2.8

Compiling indexmap v1.9.2

Compiling tokio v1.23.0

Compiling thiserror v1.0.38

Compiling rand_core v0.6.4

Compiling parking_lot v0.12.1

Compiling mio v0.8.5

Compiling aho-corasick v0.7.20

Compiling thiserror-impl v1.0.38

Compiling tokio-macros v1.8.2

Compiling atty v0.2.14

Compiling num_cpus v1.15.0

Compiling signal-hook-registry v1.4.0

Compiling socket2 v0.4.7

Compiling hashbrown v0.12.3

Compiling bytes v1.3.0

Compiling regex-syntax v0.6.28

Compiling os_str_bytes v6.4.1

Compiling heck v0.4.0

Compiling termcolor v1.1.3

Compiling ppv-lite86 v0.2.17

Compiling anyhow v1.0.68

Compiling rand_chacha v0.3.1

Compiling clap_derive v3.2.18

Compiling clap_lex v0.2.4

Compiling regex v1.7.0

Compiling futures-executor v0.3.25

Compiling bitflags v1.3.2

Compiling humantime v2.1.0

Compiling either v1.8.0

Compiling strsim v0.10.0

Compiling textwrap v0.16.0

Compiling once_cell v1.16.0

Compiling clap v3.2.23

Compiling tokio-socks v0.5.1

Compiling env_logger v0.9.3

Compiling futures v0.3.25

Compiling rand v0.8.5

Compiling reverseproxy v0.1.0

Finished release [optimized] target(s) in 2m 32s

Installing /root/.cargo/bin/reverseproxy

Installed package `reverseproxy v0.1.0` (executable `reverseproxy`)

root@proxy:~# reverseproxy --help

reverseproxy 0.1.0

Yuki Kishimoto <yukikishimoto@proton.me>

TCP Reverse Proxy written in Rust

USAGE:

reverseproxy [OPTIONS] <LOCAL_ADDR> <FORWARD_ADDR>

ARGS:

<LOCAL_ADDR> Local address and port (ex. 127.0.0.1:8080)

<FORWARD_ADDR> Address and port to forward traffic (ex. torhiddenservice.onion:80)

OPTIONS:

-h, --help Print help information

--socks5-proxy <SOCKS5_PROXY> Socks5 proxy (ex. 127.0.0.1:9050)

-V, --version Print version information

Les routes exposées me permettent d’accéder aux plans d’adressage des Pods et services du cluster k3s depuis cette instance sur DigitalOcean via Tailscale :

root@proxy:~# ping -c 4 10.42.2.3

PING 10.42.2.3 (10.42.2.3) 56(84) bytes of data.

64 bytes from 10.42.2.3: icmp_seq=1 ttl=64 time=87.8 ms

64 bytes from 10.42.2.3: icmp_seq=2 ttl=64 time=20.2 ms

64 bytes from 10.42.2.3: icmp_seq=3 ttl=64 time=24.7 ms

64 bytes from 10.42.2.3: icmp_seq=4 ttl=64 time=19.9 ms

--- 10.42.2.3 ping statistics ---

4 packets transmitted, 4 received, 0% packet loss, time 3005ms

rtt min/avg/max/mdev = 19.898/38.132/87.793/28.734 ms

root@proxy:~# ping -c 4 10.42.2.4

PING 10.42.2.4 (10.42.2.4) 56(84) bytes of data.

64 bytes from 10.42.2.4: icmp_seq=1 ttl=64 time=20.7 ms

64 bytes from 10.42.2.4: icmp_seq=2 ttl=64 time=20.4 ms

64 bytes from 10.42.2.4: icmp_seq=3 ttl=64 time=19.9 ms

64 bytes from 10.42.2.4: icmp_seq=4 ttl=64 time=20.5 ms

--- 10.42.2.4 ping statistics ---

4 packets transmitted, 4 received, 0% packet loss, time 3006ms

rtt min/avg/max/mdev = 19.907/20.380/20.726/0.301 ms

Exposition locale du démonstrateur FC via reverseproxy :

root@proxy:~# reverseproxy 0.0.0.0:80 10.43.97.140:80

[2022-12-25T16:29:00Z INFO reverseproxy::tcp] Listening on 0.0.0.0:80

Exposition également du Pod via reverseproxy :

root@proxy:~# nohup reverseproxy 127.0.0.1:3000 10.42.1.3:3000 &

[1] 4554

root@proxy:~# cat nohup.out

[2022-12-25T16:31:14Z INFO reverseproxy::tcp] Listening on 127.0.0.1:3000

root@proxy:~# curl http://localhost:3000

<!doctype html>

<html lang="en">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, user-scalable=no, initial-scale=1.0, maximum-scale=1.0, minimum-scale=1.0">

<meta http-equiv="X-UA-Compatible" content="ie=edge">

<link rel="stylesheet" href="https://cdnjs.cloudflare.com/ajax/libs/bulma/0.7.1/css/bulma.min.css" integrity="sha256-zIG416V1ynj3Wgju/scU80KAEWOsO5rRLfVyRDuOv7Q=" crossorigin="anonymous" />

<link rel="stylesheet" href="https://unpkg.com/bulma-tooltip@2.0.2/dist/css/bulma-tooltip.min.css" />

<title>Démonstrateur Fournisseur de Service</title>

</head>

<link rel="stylesheet" href="css/highlight.min.css">

<script src="js/highlight.min.js"></script>

<script>hljs.initHighlightingOnLoad();</script>

</body>

</html>

Utilisation de Tailscale Funnel, une fonctionnalité qui vous permet d’acheminer le trafic de l’Internet au sens large vers un ou plusieurs de vos nœuds Tailscale. Vous pouvez considérer cela comme un partage public d’un nœud auquel tout le monde peut accéder, même s’il ne possède pas lui-même Tailscale.

Tailscale Funnel est actuellement en version alpha.

root@proxy:~# tailscale serve --help

USAGE

serve [flags] <mount-point> {proxy|path|text} <arg>

serve [flags] <sub-command> [sub-flags] <args>

***ALPHA; all of this is subject to change***

The 'tailscale serve' set of commands allows you to serve

content and local servers from your Tailscale node to

your tailnet.

You can also choose to enable the Tailscale Funnel with:

'tailscale serve funnel on'. Funnel allows you to publish

a 'tailscale serve' server publicly, open to the entire

internet. See https://tailscale.com/funnel.

EXAMPLES

- To proxy requests to a web server at 127.0.0.1:3000:

$ tailscale serve / proxy 3000

- To serve a single file or a directory of files:

$ tailscale serve / path /home/alice/blog/index.html

$ tailscale serve /images/ path /home/alice/blog/images

- To serve simple static text:

$ tailscale serve / text "Hello, world!"

SUBCOMMANDS

status show current serve status

tcp add or remove a TCP port forward

funnel turn Tailscale Funnel on or off

FLAGS

--remove, --remove=false

remove an existing serve config (default false)

--serve-port uint

port to serve on (443, 8443 or 10000) (default 443)

root@proxy:~# tailscale serve / proxy 3000

root@proxy:~# tailscale serve funnel on

root@proxy:~# tailscale serve status

https://proxy.shark-lizard.ts.net (Funnel on)

|-- / proxy http://127.0.0.1:3000

J’accède alors à l’URL fournie :

D’autres modes existent pour l’utilisation de Tailscale comme l’exécution en tant que sidecar vous permet d’exposer directement un pod Kubernetes sur Tailscale. Ceci est particulièrement utile si vous ne souhaitez pas exposer un service sur l’internet public. Cette méthode permet une connectivité bidirectionnelle entre le pod et d’autres appareils sur le tailnet. Vous pouvez utiliser des ACL pour contrôler le flux de trafic.

Vous pouvez également exécuter le sidecar en mode réseau dans l’espace utilisateur. L’avantage évident est de réduire le nombre de permissions dont Tailscale a besoin pour fonctionner. L’inconvénient est que pour la connectivité sortante du pod vers le tailnet, vous devrez utiliser le proxy SOCKS5 ou le proxy HTTP.

À suivre !

Top comments (0)