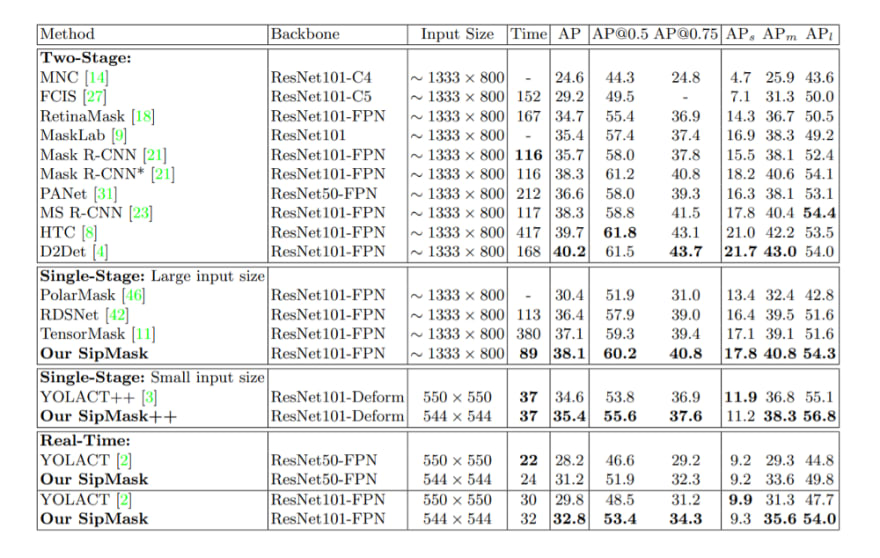

SipMask is a one-stage neural network for instance segmentation of objects in an image. The model bypasses the previous one-stage state-of-the-art approaches on the COCO test-dev dataset. Compared to TensorMask, SipMask gives a 1% AP gain. Moreover, the model produces predictions 4 times faster. The model bypasses YOLACT by 3% in AP.

More about the model

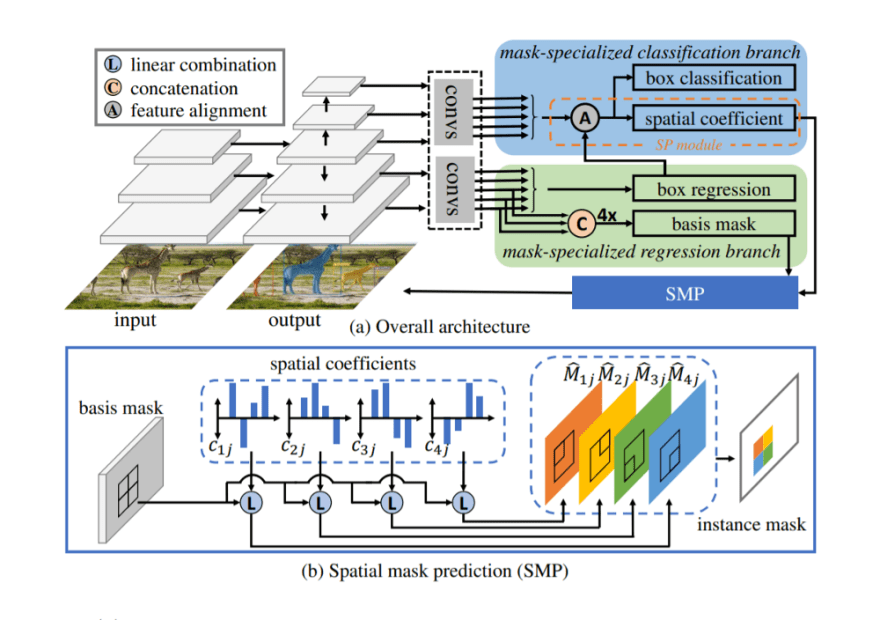

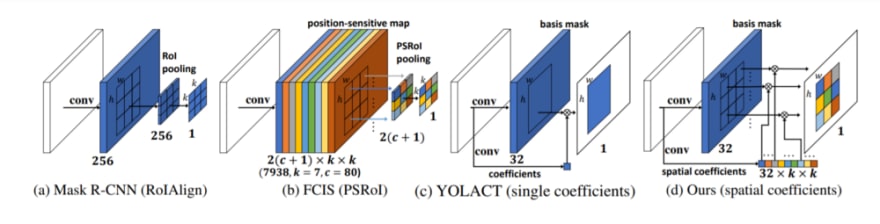

A feature of the neural network architecture is the new spatial preservation (SP) module. The SP module is a feature pooling mechanism in a one-stage segmentation model. The idea of the module is to store spatial information about an object.

The model is based on the FCOS architecture. However, the two standard branches of classification and regression have been replaced with mask-specific classification and regression in order to adapt the model for instance segmentation. The classification unit predicts the rates of the classes and assigns spatial coefficients for the regions of the boundaries of objects. These coefficients are then used by the SP to predict the individual masks.

Testing the model

The researchers validated the model on the COCO test dataset. Compared to state-of-the-art one-step approaches for instance segmentation, SipMask produces more accurate predictions.

The source code of the project is available in the repository on GitHub.

Read More

If you found this article helpful, click the💚 or 👏 button below or share the article on Facebook so your friends can benefit from it too.

Top comments (0)