You'll learn best about a specific tool or program by helping users with their problems and questions. I have experienced this when starting with Nagios/Icinga more than 10 years ago. Today I am trying to help the GitLab community and learn myself a lot. Often I just have an idea but never tackled the code or environment myself.

Today I have found a question on the GitLab forums on the first steps with CI/CD. It actually involved building source code for an ARM Cortex hardware architecture:

to build a small cortex-m4 firmware

The question was about a specific build step and make not being found. Also, whether the Docker-in-Docker image build really is the correct way of doing it.

Never did that before for ARM Cortex builds. The question also included a link to the project repository and its .gitlab-ci.yml with all the details there. It also showed the build error right in front of me.

Guess what I did next? Right, the power of open source and collaboration - I forked the repository into my account!

Analyse the first make error

The Docker-in-Docker approach is used to build a new image providing the cortex-m4 hardware specific build tools. Among the container build steps there was one which executed a test.sh script which ran make.

Ok, then let's just see how to install make into the docker:git image. Wait, which OS is that?

$ docker run -ti docker:git sh

/ # cat /etc/os-release

NAME="Alpine Linux"

ID=alpine

VERSION_ID=3.11.6

PRETTY_NAME="Alpine Linux v3.11"

HOME_URL="https://alpinelinux.org/"

BUG_REPORT_URL="https://bugs.alpinelinux.org/"

/ # apk add make

OK: 26 MiB in 26 packages

The CI build runs fine now, the script does something. But does it really compile the binaries ... it is executed in the outer Alpine Docker container, not inside the newly created build image.

Build the image, then compile inside the image

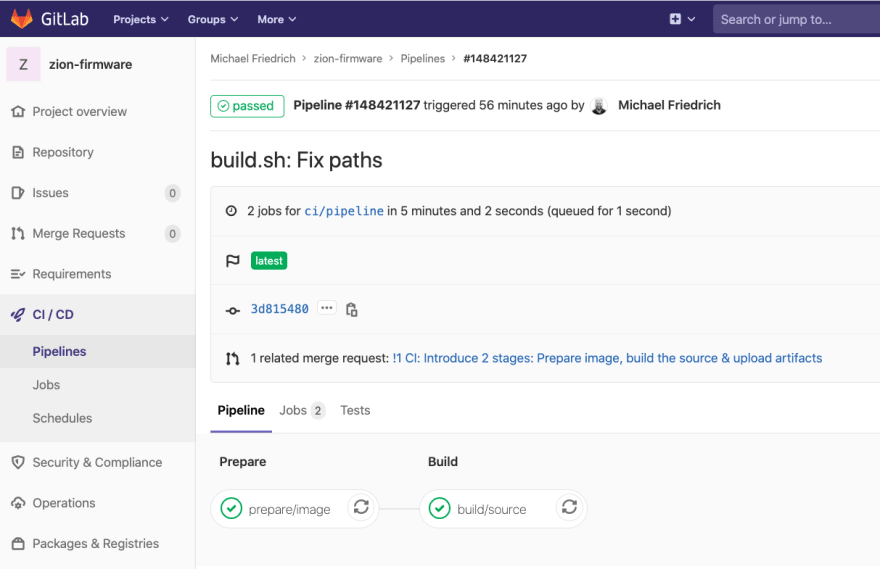

Then I remembered the two stages for GitLab CI/CD and the container registry:

- First, create a new build image with your tool chain. Push that into the container registry.

- Second, define a new job which uses this image to spawn a container and compile the source code from the repository

To better visualize this, let's create two stages as well:

stages:

- prepare

- build

Tip

Use the Web IDE for syntax highlighting and direct CI/CD feedback in the upper right corner.

To prepare this environment, define these variables: DOCKER_DRIVER is read by the CI/CD Runner environment in the background, while the IMAGE_TAG allows a shorter variable reference for the GitLab registry URL to the container image.

variables:

DOCKER_DRIVER: overlay2

IMAGE_TAG: $CI_REGISTRY_IMAGE:$CI_COMMIT_REF_SLUG

Build and push the Docker image

The next step is to define the build image. Ensure that the Dockerfile is located in the main repository.

Wait, there was Docker-in-Docker? Yes, the CI/CD runners use the Docker executor, so every job on its own is run inside a container.

- A container does not run any services by default.

- You want to build a new Docker image inside this job container.

- There is no Docker daemon answering

docker loginat first.

The trick here is to virtually share the runner's Docker daemon socket with the job container building the image. This happens in the background, only visible with the settings for image: docker and service: docker:dind.

Things to note:

- The job name

prepare/imagecan be freely defined and is just an example. - The

imageis defined in the job scope and uses the DinDdocker:gitimage - The job belongs to the

preparestage - Before any script is run, the login into the container registry is persisted once

- The

docker:dindservice must be running to communicate with the outside Docker daemon - The script invokes building the Docker image and tagging it accordingly. If this steps success, it pushes the newly tagged image to authenticated container registry.

# Build the docker image first

prepare/image:

image: docker:git

stage: prepare

before_script:

- docker login -u "$CI_REGISTRY_USER" -p "$CI_JOB_TOKEN" $CI_REGISTRY

services:

- docker:dind

script:

- docker build --pull -t "$IMAGE_TAG" .

- docker push "$IMAGE_TAG"

Compile the source code

The reason for creating the Docker image is a different architecture and specific cross compilation requirements for the ARM Cortex architecture. A similar approach is needed when building binaries for Raspberry Pis with using QEMU for example.

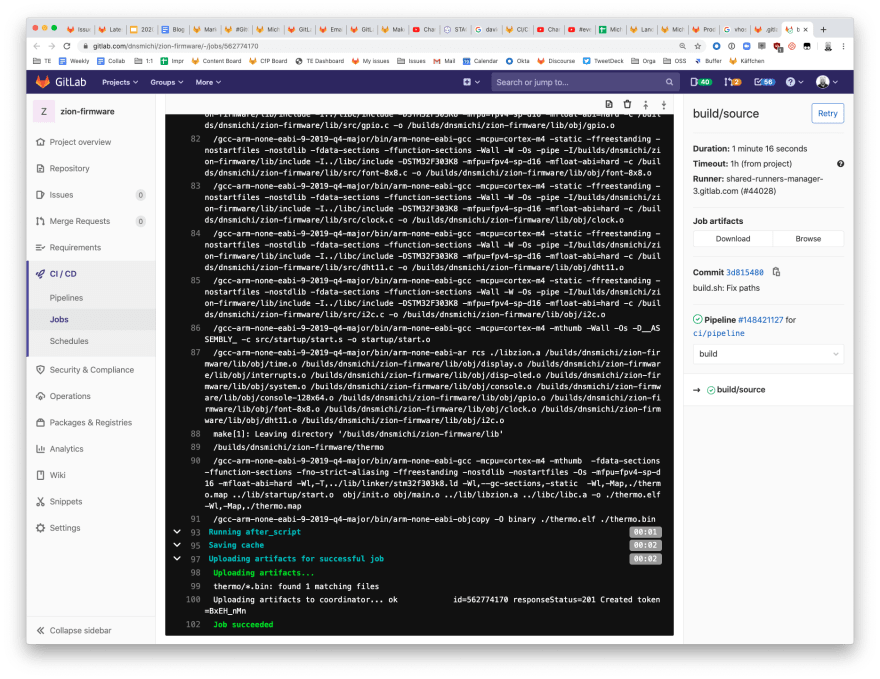

Things to note:

- Use the previously tagged

image - Assign the job to the

buildstage - Run the compilation steps in a script provided in the same Git repository. It is located in

scripts/build.sh. - The binaries need to be stored somewhere. The easiest way is to define them as artifacts and store them on the GitLab server in the job.

# Build the source code based on the newly created Docker image

build/source:

image: $IMAGE_TAG

stage: build

script:

- ./scripts/build.sh

artifacts:

paths:

- "thermo/*.bin"

Conclusion

I didn't achieve this in one shot, so don't fear a build to fail and another Git commit to attempt to fix it. The hardest challenge is to understand the code and scripts from someone else, and iteratively learn.

Open source definitely helps as we can collaborate and work on the different challenges. You can see my attempt in this merge request.

More questions on GitLab CI/CD first steps? Hop onto the forum and maybe we'll write another dev.to post from our findings!

Top comments (0)