My name is Enze Zhou. I'm an e-commerce manager who is currently seeking a position in cloud computing. I was exposed to AWS through a friend who worked for IBM. I mean, of course I had heard of AWS and some other cloud jargons before that, but Heather gave me an insider-view about how cloud services are revolutionizing and transforming the business world. After that inspiring chat, I decided to learn deep into the cloud. I finished two AWS courses on Udemy in the next couple of months, to gain a solid theoretical understanding and prepare myself for the certs. I registered myself two exams and obtained the AWS Solutions Architect Associate Certification and the AWS Developer Associate Certification.

The next day after my exam for AWS DA cert, I was thinking about what to do next. I skimmed through YouTube channels to see if I can find some advice. It was then I found out about Cloud Resume Challenge, a comprehensive hands-on project that requires participants to design and implement a cloud-based infrastructure to host a personal resume website. Seems perfect for me!

The challenge began

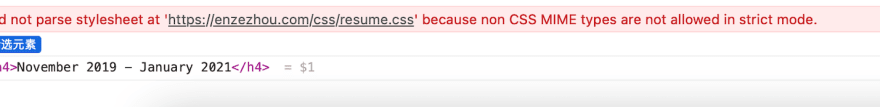

The first step was to create the website front-end. Since I was already quite familiar with the console (I played with the console a lot when I was preparing for the certs), I decided to configure my S3 bucket, CloudFront distribution and Route53 using AWS CLI. Here came my first setback... After all the necessary set-up, I accessed the HTML file through my domain successfully, but the CSS files were not rendered correctly on the page. I was confused… the HTML file and the CSS files were stored in the same s3 bucket. If the index HTML is accessible, so should CSS files. To identify what had caused the issue, I opened Safari inspection mode and was returned with the following error message: failed to parse CSS files because non CSS MIME types are not allowed.

This was happening because I didn’t explicitly define the ‘content-type’ of my CSS files as ‘text/css’ in my command so they were labeled as binary files by default. I run the s3api cp command with metadata-directive to change the content type. And it worked!

Feeling confident!

Setting up the back-end (API, DynamoDB table and Lambda function) were easily done! I had a couple of small problems when I was writing the Lambda code to read and write to the DynamoDB table (e.g., using Python list and dict to filter DynamoDB item attributes). The Python boto3 documentation had really helped me to find the solutions. I love clear documentations!

Also, when I created my HTTP API, the route was not configured correctly. I received a {message: not found} error when I tested the API using the invocation URL. To ensure there was nothing wrong with my Lambda function and IAM roles, I created another REST API and it worked just fine. I compared the stage, route setting and resource-based permissions of two different APIs carefully, and identified and solved the route issue.

Solving the CORS error

After I finished with my Javascript code, which triggers the API to invoke a Lambda function to increment the page visit count, I tried to test the site locally before I push a commit to GitHub actions. My site didn’t show the visit count at all. I tested my Lambda function and javascript. They worked just fine. I realized it was due to a lack of CORS headers, so I added the Access-Control-Allow-Origin headers in my lambda function response. I set the allow origin as “*” in order to test locally. After testing, I pushed my updated code to GitHub actions and changed the header to only allow my website domain to access responses from Lambda.

Onto the REAL good staff: Infrastructure as Code

My terraform code visualized, powered by Terraform Visual. All these infrastructures were deployed by terraform apply!

IaC speeds up the deployment, iterations and recovery of cloud services. In my cloud resume project, I have chosen Terraform as the IaC tool because it is a widely used multi-cloud tool with detailed documentation. Using Terraform allowed me to manage my infrastructure as code effectively and ensured that the testing and deployment were consistent and repeatable.

The pain but also the fun: troubleshooting

Troubleshooting the IaC terraform code is the most difficult part when migrating to IaC. Getting the code to pass the terraform validation took me a while. I was new to HCI language so I took several online tutorials to learn the syntax. Then it came with the terraform plan and apply issues, like DynamoDB database schema mismatch, missing attributes, permission errors and Lambda function errors. Some of these problems were quite self-explainatory, the exception message clearly pointed out how to solve them. But others can be confusing. I had to navigate through Terraform and AWS documentations, and Googled for potential solutions. After a couple of hours troubleshooting, I was really excited to see the IaC code successfully running and creating AWS infrastructure for me!

Go beyond: making the site more manageable

CI/CD pipeline (Front-end & Back-end)

I automated the deployment of my cloud site by creating two GitHub repositories and leveraging GitHub actions to automatically deploy updates to the front-end and back-end. It makes the infrastructure and content management a lot quicker and more consistent. When I have some content update on my website or I need to change the configuration of my infrastructure, all I need to do is to change the code and run a git push command in my terminal. GitHub actions and terraform apply will take care of the rest, testing the code, updating my S3 bucket, invalidating CloudFront cache, and making changes to relevant AWS components.

Metrics and Monitoring

To ensure I got notified timely about any anomaly of my website, I created CloudWatch alarms on API and Lambda metrics and integrated them with Slack. Three alarms on API latency, Lambda function error and spike of Lambda invocations were configured to monitor the functionality and traffic of my site. Real time Slack notifications will be triggered once the alarms are breached.

Some extra thoughts...

"The devil's in the detail" is an idiom my last boss always says. I mentioned this because I felt quite the same when I was doing my cloud resume challenge. IAM permissions, database schema, API routes, CORS, GitHub Actions, Terraform validate... there were many places where my cloud site could go wrong. And if I have to name only one solution to them, I will say thinking through all the details, like following up a clue to seize the culprit. Having a clear-drawn diagram had helped me visualize the code flow, on top of which I checked all the relevant code in great detail to troubleshoot problems encountered.

Conclusion

The Cloud Resume Challenge has been a fun and valuable learning experience for me. Within three weeks, I learned how to design and implement a cloud web application using AWS services and IaC, configure CI/CD pipeline, and monitor the system. I am confident that these skills will be useful in my future career, hopefully as a cloud engineer :). And I plan to continue learning and exploring the world of cloud technology.

If you are interested, please check out my site/resume at: https://www.enzezhou.com/

Top comments (1)

This is great, I had a great time completing the Cloud Resume Challenge using Azure! Thank you for sharing your experience