There are many articles trying to explain how to improve Selenium tests within the code. They show best practices and are very relevant.

On the other hand, it is hard to find pages with tips to improve the execution against Selenium Grid.

This document aims to cover some simple practices that can result in faster execution of Selenium tests using Selenium Grid.

TL;DR;

- Define a maximum time to run each spec in your test suite and implement them with that constraint in mind

- Sort the specs inside your suite of tests

- Measure the overall time that each spec takes to be executed

- Run the longest specs first

- Cancel subsequent tests of a spec when a test case inside the spec fails

Implement automated tests with a time constraint

Specs should be small - period.

Small specs take advantage of better usage of Selenium Grid, since they are better distributed between the available nodes.

Every case must be evaluated, but in my case I try to have 5 minutes as a time limit. In the suite of tests that I work with, if a spec takes more than 5 minutes to run, I usually put some effort to split it into 2 (or more) smaller specs.

Sort the execution of the tests suite

Selenium tests are commonly run against a CI Server (Jenkins, TeamCity, etc), but even when run locally they provide a report with the list of specs executed and the duration of each spec.

Usually, the order how tests are run is arbitrary and managed by the test library (JUnit, TestNG, etc) used with Selenium.

Saving this data can be useful to sort tests.

Measure and set the overall time in each spec

Every time a spec is run, it takes a different time to execute. Page load and some other factors influence in how the duration takes a bit more or less each time that a spec is run.

However, by looking at the history of tests executed, it's possible to find an average time. It is interesting to use this average time to sort the specs before they are run.

The average time could be set in a property, internally in the spec, or be searched from a database, if that's saved.

A possible approach to sort the specs is to define a category based on the average time the spec runs:

- Very Short (executed in less than 30 seconds)

- Short (executed between 30 seconds and 1:30 minutes)

- Medium (executed between 1:30 minutes and 3:00 minutes)

- Long (executed between 3:00 minutes and 5 minutes)

- Very Long (executed in more than 5:00 minutes)

If every spec has a constant containing the information above, it is easy to order the list of specs from a test suite using that criteria.

The drawback is that it needs to be updated if a spec is changed (e.g. split), but other approaches can be considered (e.g. saving every test result in the database and calculating each spec average time before every test run).

Run the longest specs first

This is my favourite trick. It must be combined with the previous steps, and it makes the test distribution between the Selenium nodes better, reducing the overall time that tests take to run and also reducing idleness of Selenium nodes.

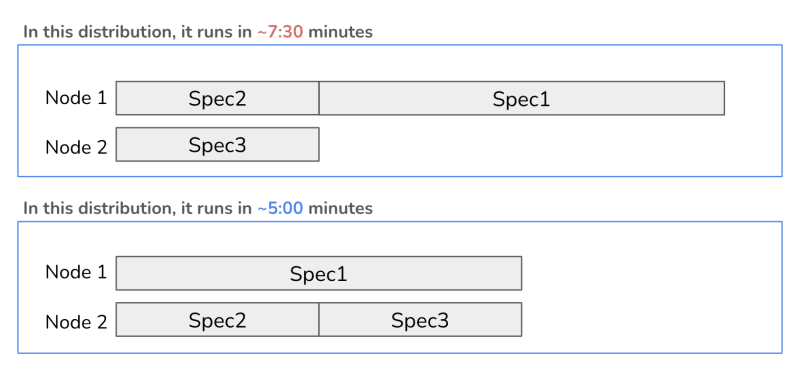

As an example, let's say we have just 2 Selenium nodes available to run 3 different specs with the following execution time average.

- Spec1: runs in 5 minutes

- Spec2: runs in 2:30 minutes

- Spec3: runs in 2:30 minutes

As seen in the image above, if we don't run the longest spec first, the overall execution time may take longer due to the distribution that is not optimised. Apart from the longer time to run tests, it shows that the Node 2 will be idle for some time. In a scenario with hundreds of specs, it can result in a big difference in the overall execution time and when tuned it can represent a better use of the resources available for the Selenium Grid.

Cancel subsequent tests of a spec after a failure

Selenium tests are different from Unit Test. In many cases, they depend on previous tests to run.

As an example:

1. log in to the application

1.1. ensure the page title is as expected

1.2. ensure the values are loaded correctly

2. click on the button "New"

2.1. ensure the page title is as expected

3. complete the form

4. click on the button "Save"

4.1. ensure the success message is displayed

For the example above, if the test 2.1 fails, what's the benefit of trying the next ones, which depend on it? They may just add a lot of invalid failures, which are not related to the root cause of the problem, adding unnecessary error LOGs to the results.

Just as an example, in ScalaTest it can be easily done mixing-in the trait CancelAfterFailure to the specs.

Final words

The simple changes listed above can save some time and optimise the execution of Selenium tests.

For some of the topics pointed above, it is needed to have a custom implementation, like ordering the specs to consider the longest ones to run first. However, there shouldn't be any complicated implementation.

Hopefully it's somehow useful for somebody else.

Top comments (0)