We've been running weekly developer lunch & learn sessions over video. I've been downloading the video from Zoom, doing some basic trimming, and then uploading it using Fission, and embedding the IPFS link in our Discourse forum. This works pretty well – Discourse recognizes the video file extension and embeds a video player in the post automatically. I had read a bit about HTTP Live Streaming (HLS) and wanted to experiment.

Originally developed by Apple, and now widely supported, HLS doesn't need any special server-side support. A bit more in this freeCodeCamp article:

The most important feature of HLS is its ability to adapt the bitrate of the video to the actual speed of the connection. This optimizes the quality of the experience.

HLS videos are encoded in different renditions at different resolutions and bitrates. This is usually referred to as the bitrate ladder. When a connection gets slower, the protocol automatically adjusts the requested bitrate to the bandwidth available.

– freeCodeCamp: HLS Video Streaming: What it is, and When to Use it

There's an example in the JavaScript implementation of IPFS about using HLS with js-ipfs. The README explains:

The fact that HLS content is just "a bunch of files" makes it a good choice for IPFS (another protocol that works this way is MPEG-DASH, which could certainly be a good choice as well). Furthermore, the hls.js library enables straightforward integration with the HTML5 video element.

"Just a bunch of files" is pretty much how we're thinking about Fission. Fission and any standard IPFS gateway serves up files over HTTP and/or natively with IPFS, without plugins, in any browser. It's really great to get back to what is basically the 2020 equivalent of upload files to the server.

I took the latest video file of Joel talking about Ceramic Network and ran the ffmpeg command from the js-ipfs example. On MacOS, brew install ffmpeg will get you the program.

ffmpeg -i ../YOURVIDEO.mp4 -profile:v baseline -level 3.0 -start_number 0 -hls_time 5 -hls_list_size 0 -f hls master.m3u8

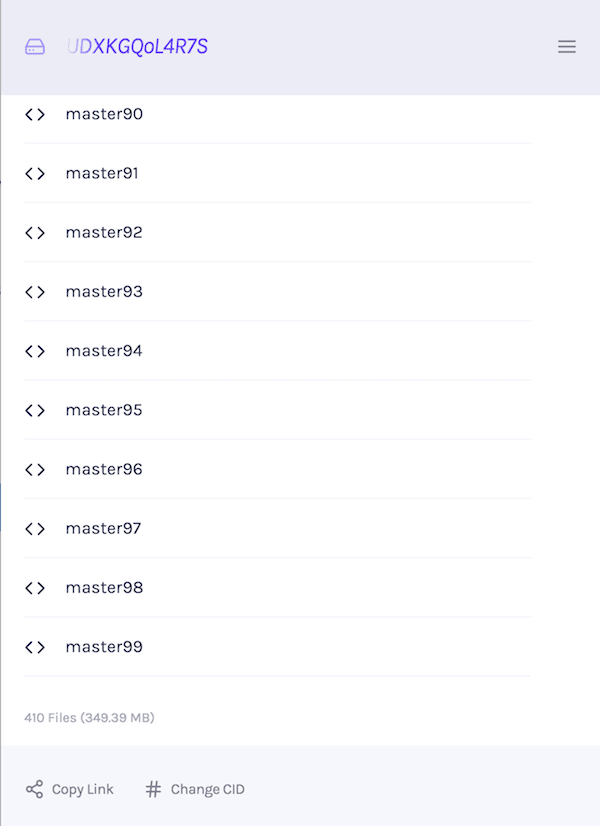

On my desktop iMac, the progress showed to be encoding at 4x - 5x speed. The video is about an hour, so it took about 20 minutes to complete. The output is 400+ files, which I uploaded with fission up and then noted down the hash of the folder.

Then I went back and edited the streaming.js folder and index.html. The streaming.js file just needs the hash of the folder that contains the HLS-encoded video, which for this video is QmYGs1ksGX3eMiGvxNuvRT6PD7zPKZpHyiUDXKGQoL4R7S. Feel free to use this to experiment with! I kept the master.m3u8 file name so didn't need to change that.

One change I made was to to also include the IPFS script from a CDN in the index.html file (the js-ipfs example assumes you're running things locally and working with js-ipfs from there). Just add this line:

<script src="https://cdn.jsdelivr.net/npm/ipfs/dist/index.min.js"></script>

You can browse all the files directly using Fission Drive, including the source video that was encoded. Visiting the index page will load the player and HLS stream the video.

Example HLS Video

Here's the source and video embedded via Codepen – hit the HTML button to view source. Note the Fission gateway link to the published version of the streaming.js file, also remotely included:

The video isn't very high quality to begin with, and adding width/height to the video element could constrain it to different sizes.

More about Joel and Ceramic Network on the forum event page »

Conclusion

This is a very manual process, and there are lots of dedicated video encoding services. It was a good experiment to see that anything that is a "bunch of files" can just work over the distributed IPFS network, and easily published and hosted on Fission.

Experimenting with a large, high quality video and testing across different devices and network speeds (and whether or not nearby peers had a copy of the video) would need to be done vs. just embedding the original video to see what the user experience is actually like.

This also leads to some interesting thoughts about distributed encoding. Once an original video is encoded and added to the network by one person, it never needs to be encoded again. From some brief research, ffmpeg is not deterministic, which lead to this thread on Mastodon. This means that different people encoding a video could get different output files, which means different hashes in IPFS.

However, the original video file is unique, so adding metadata to the original source video file for discovery of the unique, permanent hash of an HLS-encoded version could work.

We're currently doing some work on how to do this for images, so that different sizes are automatically available and cached in the network.

Fission is an app & web hosting platform that implements a web native file system powered by IPFS. Build and run locally, fission up to host everywhere. Read our guide to get started and sign up right from the command line.

Top comments (0)