Observability means being able to infer the internal state of your system through knowledge of external outputs. For all but the simplest applications, it’s widely accepted that software observability requires a combination of metrics, traces and events (e.g. logs). As to the last one, a growing chorus of voices strongly advocates for structuring log events upfront. Why? Well, to pick a few reasons - without structure you find yourself dealing with the pain of unwieldy text indexes, fragile and hard to maintain regexes, and reactive searches. You’re also impaired in your ability to understand patterns like multi-line events (e.g. stack traces), or to correlate events with metrics (e.g. by transaction ID).

There is another reason structured events are extremely helpful – they enable anomaly detection that really works. And good anomaly detection surfaces “unknown unknowns”, a key goal of observability. Think about it - if you know which metrics to monitor, you can probably get by with human defined thresholds. (note: if you don’t know all the metrics to monitor upfront, smart anomaly detection can help – more on this in an upcoming blog). And if you know specific events that are diagnostic (such as specific errors), you can track them using well defined alerts or signatures (which btw is much easier to do with structured events). But there is always likely to be a much larger set of conditions you don’t know in advance, and good anomaly detection (i.e. high signal to noise, with few false positives) can really help here.

There are approaches that attempt anomaly detection on unstructured events. But in practice, this is very hard to do. Or at least to do it well enough to rely on day to day. There are two basic problems without structure – lack of context, and an explosion in cardinality.

Here’s an example of lack of context. Say your anomaly detection tracks counts of keywords such as “FAIL” and “SUCCESS”. The former is bad, the latter good. But it’s not unusual to see messages of the type: “XYZ task did not complete successfully”, quite the opposite of the expected meaning. So just matching keywords would prove unreliable.

It’s even trickier with common words such as “at”. In the right context this keyword is extremely diagnostic, such as the first word of lines number 2-to-N in a multi-line stack trace.

Exception in thread "main" java.lang.NullPointerException

at com.example.myproject.Book.getTitle(Book.java:16)

at com.example.myproject.Author.getBookTitles(Author.java:25)

at com.example.myproject.Bootstrap.main(Bootstrap.java:14)

With an approach that understands event structures, the structure:

"at \<STRING\>\(\<STRING\>:\<INTEGER\>\)"

would be uniquely understood, and its location in a multi-line log sequence would be diagnostically useful – for example identifying a Java exception. But without structure, the keyword “at” is far too common, and a simplistic attempt based on keyword matches alone would generate so much noise as to be completely useless. So, lack of context is one reason keyword matches make a weak foundation for useful anomaly detection.

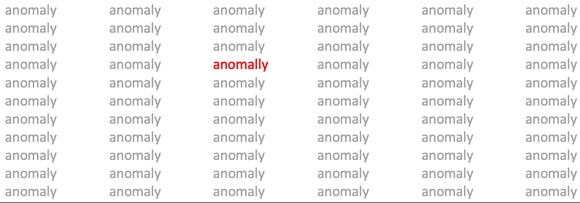

The other issue is cardinality. As discussed in an earlier blog, our software uses machine learning to automatically distil tens of millions of unstructured log lines down to a much smaller set of perfectly structured event types (with typed variables tracked in associated columns). For example, our entire Atlassian suite has an “event dictionary” of just over 1,000 unique event types. As a result, it’s easy for us to learn the normal frequency, periodicity and severity of every single event type, and highlight anomalous patterns in any one of them. A very effective way of finding the unknown unknowns. If on the other hand we were trying to detect anomalies in arbitrary keyword combinations or clusters, the cardinality is orders of magnitude higher, making it impractical to do this reliably, at least within practical time and resource constraints.

Does this work in practice? You bet! In real world testing across hundreds of data sets, we found our approach has turned out to be effective in generating anomalies. For one thing, it doesn’t generate much noise: only about 1 in 50,000 events were flagged in relatively terse logs, and 1 in millions of events for chattier ones. And these datasets all contained real issues.

Second, their diagnostic “signal” value is high – over half the root causes eventually tagged by a human as a fault signature had already been highlighted as an anomaly. And a high number of the rest happened just before an anomaly, meaning the anomaly had picked out identifiable symptoms of a real problem, saving time in triage and root cause.

We are an early stage startup and about to start beta. Please connect with us [at] if our approach interests you.

Note: This article was posted with permission of the author: Ajay Singh.

Top comments (0)