This blog post demonstrates how one can make custom AI service engaged in one's solution effortlessly under 10 minutes, learn a new node-flow based code development with Node-Red.

To make it happen you will learn about Watson Visual Recognition (it is a seasoned - commercial - industry grade AI service available via IBM Cloud), Watson Studio for fast machine learning, Node-Red - the javascript based graphical node-flow language to use the created Visual Recognition classifier in the on-line mode.

The why

I am a full stack developer always looking for great technologies to utilize in modern systems. Since I founded a robotics startup I have been always amazed with the use of AI powered visual recognition services for drones and robots. In this blog post I am sharing with you how it is easy now to make AI doing stuff for you and your systems/robots/drones. Back 10 years ago I tried to use autonomous robots in a swarm to detect armed intruders - the task seemed to me impossible then to tackle it without huge financial resources. Today, the solution can be delivered after a day long hackathon. _Man, I wish I knew then what I am going to share with you now ;) _

In addition United Nations, Red Cross and IBM among other organizations support CallForCode.org hackathon to help people affected by disasters. Using drones with image recognition might impact and improve well being of all those affected. I will show the code for detecting houses affected by wild fires, floods, etc. So why not take this call, contribute to the cause and help others!

Using Watson Visual Recognition in Node-Red

Watson Visual Recognition is the trained AI based service that can tell you what it sees on the picture with the pretrained classifiers. It can detect colors, people, faces, some objects, foods, etc. You are going to really like it.

Node-Red is an open source project and a programming tool for wiring together hardware devices, APIs and online services in new and interesting ways. It provides a browser-based editor that makes it easy to wire together flows using the wide range of nodes in the palette that can be deployed to its runtime in a single-click (*repeating after the nodered.org site)

So let's start with IBM Visual Recognition - the last time I checked on May 30th 2019 - you are able to call the service free of charge with a IBM Cloud lite account about thousand times a month. And you can get yourself a free lite account (just providing your email) by simply clicking on my personalized link (if you use this URL, I will be told that this blog is a good one) : https://ibm.biz/Bd2CUa

Just a side note - you can easily prepare a working Proof of Concept prototype for investors while just using the IBM Cloud Lite Account.

After you sign up, log in and just open the Catalog and select from AI category the Watson Visual Recognition service, and create it. After that you need to also create the Node-RED Starter service. Both services would work in the free tier!

When Node-RED service is provisioned, connect it with the previously created Watosn Visual Recognition service. You would need to restage the Node-RED service after you connect them together. When you set the service up for running you can create the first flows and use the following Node-RED lab: https://github.com/watson-developer-cloud/node-red-labs/tree/master/basic_examples/visual_recognition

*a small hint: at the bottom of the Node-RED lab page there is a link to a full JSON of the required Node-RED flow. Just copy the flow's JSON, import the JSON in the flow editor from the clipboard, and you would be good to start this service in matter of 1 minute! Do not forget to use "DEPLOY" button to install the new or changed flows.

Your service will look like this:

Now you can try your test service in your web browser thru this simple web application (just use the link with /reco extension) - for example: http://my-node-red-app.mybluemix.com / reco

Customizing the AI service with the custom classifier

If you want to add something new - create a custom classifier - you need to provide 1,000s of pictures for positive detection, and as I was told - about a half of this number with "negative" pictures - ie. pictures that do not represent the chosen classification.

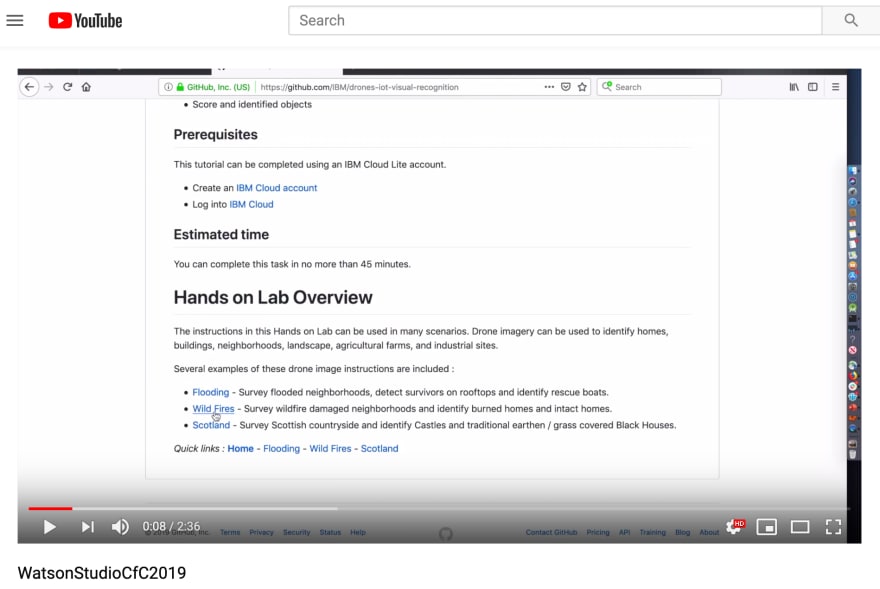

The very basic example would start to work with just dozen pictures. The perfect example is provided by John Walicki, who trained a custom classifier to detect burnt houses in the pictures taken by drones. You can use one of his labs to do such a drone classifier - the labs on creating a classifier to detect houses demolished by wild fires, or flooded are presented in this github based labs: https://github.com/IBM/drones-iot-visual-recognition

I created a short video showing how it is being done step by step (yet the video is speeded up 2-3 times): https://youtu.be/kW7cjuWuPS0

In order to train the Watson Visual Recognition you will use IBM Watson Studio. In order to recap what is required to train the Visual Recognition service is to provide individual pictures or group them together in the zip files - one for positive, and one with the negative picture set. In the free tier you can get yourself 2 classifications like this.

Now in oder to view it - just expand your existing Node-RED flow with an additional function node and fill it out with the following code more details are in the step 3 in the link here - https://github.com/blumareks/ai-visrec-under-10min

Summary

With the created custom classifier you are able to equip your drone, or software with the ability to detect survivors and victims of the disasters. The power of Watson Visual Recognition could be used for good, in the CallForCode.org hackathon and beyond that.

What solution are you going to implement with it? Let me know!

Follow me on Twitter @blumareks

Latest comments (0)