From cloud to edge

You may be familiar with various queue-like products including AWS SQS, Apache Kafka, and Redis. These technologies are at home in the datacenter where they're used to reliably and quickly hold and send events for processing. In the datacenter, the consumers of the queue are often able to scale based on the queue size to process a backlog of events more quickly. AWS Lambda, for example, will spawn new instances of the lambda to handle the events to avoid the queue getting too big.

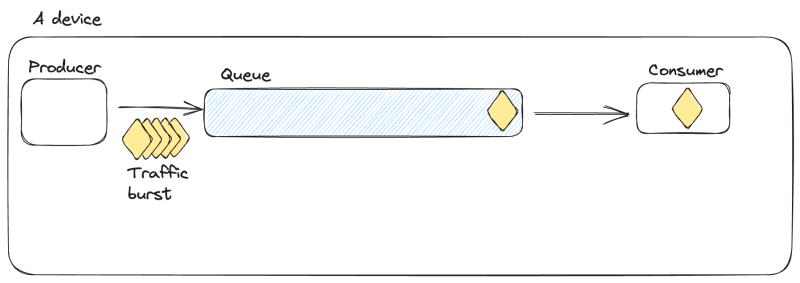

The world outside the datacenter is quite different though. Queue consumers cannot simply autoscale to handle increased load because the physical hardware is limited.

Making a queue bigger does not increase your system's transaction rate unless you can scale the processing resources based on the queue size. When processing resources are not scalable, such as within a single physical device, then increasing any queue size will not help that device process transactions any more quickly than with a smaller queue.

Where queues don't help

Something that I've seen several times when working with customers using AWS IoT Greengrass is that they'll see a log that says something to the effect of "queue is full, dropping this input" and their first instinct is to just make the queue bigger. Making the queue bigger may avoid the error, but only for so long if the underlying cause of why the queue filled is not addressed. If your system has a relatively constant transaction rate (measure in transactions per second (TPS)), then the queue will always fill up and overflow if the TPS going into the queue is higher than the TPS going out of the queue. If the queue capacity is enormous then the overflow may take quite a long time to be reached, but ultimately it will overflow because TPS in > TPS out.

Let's now make this more concrete. If we have a lambda function running on an AWS IoT Greengrass device, then that lambda will pick up events from a queue and process them.

Let's say that the lambda can complete work at a rate of 1 TPS. If new events are added to this lambda's queue at less than or equal to 1 TPS then everything will be fine. If work comes in at 10 TPS though, then the queue is going to overflow.

Assume that the lambda has a queue capacity of 100 events. Events are added to the queue at 10 TPS which means that it will fill up and then start overflowing in 11 seconds (100 capacity / (10 TPS in - 1 TPS out) = 11.1 s). So we can then make the capacity bigger, but that only extends the time to overflow; it does not prevent the overflow from happening. Fundamentally, the lambda is unable to keep up with the amount of work because 1 TPS is less than 10 TPS.

Now maybe you're thinking that lambdas should scale and fix the problem, "we just need 10 lambdas working at 1 TPS each and the problem is solved". Yes, that is technically correct that if you can perfectly scale 10 instances then the problem would be solved for this level of load, but you need to remember that AWS IoT Greengrass and these lambdas are running on a single physical device. That single device only has so much compute power so that perhaps you can scale to 5 TPS with 5 or 6 lambda instances, but you then hit a brick wall of scaling because of the hardware limits.

So what can be done at this point? Perhaps the lambda can be optimized to process more quickly, but let's just say that it is as good as it gets. If the lambda cannot be optimized, then the only options are to accept that the queue will overflow and drop events or else you need to find a way to slow down the inputs to the queue.

What good are queues then?

You may now think that queues are good for nothing, but of course queues do exist for a reason, you just need to understand what problems they can and cannot help with.

If the consumer of the queue can scale up the compute resources, such as AWS Lambda (lambda in the cloud, not on AWS IoT Greengrass) with AWS SQS, then a queue certainly makes sense and will help to process the events quickly.

On a single device, queues can help with bursty traffic. If your traffic is steady like in the example above, then queues won't help you. On the other hand, if you sometimes have 10 TPS and other times have 0 TPS input, then a queue (and even a large queue) can make sense.

Going back to the example from above, our lambda can process at 1 TPS. Let's say that our input is now very bursty where we'll get 10 TPS for 20 seconds and then 0 TPS for 200 seconds. This means that the queue would receive 200 events during the 20 second period and then would drain to 0 events in the 200 second period since no data is coming in and data is flowing out at 1 TPS. If the queue size was 100 like in the earlier example, then the queue would have overflowed and we'd lose 100 events even though in theory the lambda could have eventually processed them if the queue were large enough. So in this case, making the queue capacity at least 200 is reasonable and should minimize any overflow events.

To summarize, if average TPS input > average TPS output then the queue is going to overflow eventually and it does not matter how big you make the queue. The only options are to 1. increase the output TPS, 2. decrease the input TPS, or 3. accept that you will drop events. When your input TPS is relatively constant, keep the queue size small which will be more memory efficient and will show errors due to overflow sooner than a larger queue. Finding problems like this early on can then encourage you to understand the traffic pattern and processing transaction rate so you can then choose one of the 3 options for dealing with overflows.

Application to Greengrass

Lambda

In this post I used lambda as an example, so how about some specific recommendations for configuring a lambda's max queue size?

For a pinned lambda which will not scale based on load, start with a queue size of 10 or less. If you're able to calculate the expected incoming TPS and traffic pattern (steady or bursty) then you can change the queue size based on that data. I would not recommend going beyond perhaps 100-500. If your queue is still overflowing at those sizes then you probably need to find another solution instead of just increasing the size.

For on-demand lambdas which do scale based on load I'd recommend that you start with a queue size of 2x the number of worker lambdas that you want to have. This way effectively each worker has its own mini-queue of 2 items. Again, the same recommendations from above apply here too if you understand your traffic pattern and can calculate the optimal queue size.

Stream Manager

Stream Manager is a Greengrass component which accepts data locally and then (optionally) exports it to various cloud services. It is effectively a queue, connecting the local device to cloud services, where those cloud services are the consumer of the queue. Since it is a queue, the exact same logic applies to it. If data is written faster than the data is exported to the cloud, then eventually the queue is going to overflow and in this use case, some data would be removed from the queue before being exported. It is very important to understand how quickly data is coming into a stream and how quickly it can be exported based on the cloud service limits and your internet connection.

MQTT Publish to IoT Core

When publishing from Greengrass to AWS IoT Core, all MQTT messages are queued in what's called the "spooler". This spooler may either store messages in memory or on disk depending on your configuration. The spooler is a queue with a configurable limited size, so again the same logic as to all queues applies to the spooler. AWS IoT Core limits each connection to a maximum of 100 TPS publishing, so if you're attempting to publish faster than 100 TPS through Greengrass, the spooler will inevitably fill up and reject some messages. To resolve this, you'd need to publish more slowly.

Further reading

For some deeper understanding of queuing see the following resources.

Top comments (0)