Intro

Real-time multiplayer WebXR experiences are a really exciting prospect for the future of the internet, we are beginning to see how they could be used as the technology develops (e.g the term Metaverse).

Having recently posted about how to create WebXR experiences that work across devices, I wanted to go a step further and explain the practical steps involved in connecting the users who are on these devices together.

Ive made a gif of my partner (wearing a Oculus Quest 2), an example user (Macbook Pro Desktop) and myself (wearing a HoloLens 2) interacting with a 3D model in the shared XR space, see below.

As a side note, Im writing a library called Wrapper.js that hopefully will help make creating collaborative WebXR experiences like the above easier.

Overview

There is a lot to understand in how this web app is architected and the technologies used to develop it, this section will explain this information.

In order to make best use of this information, its important to understand three key points:

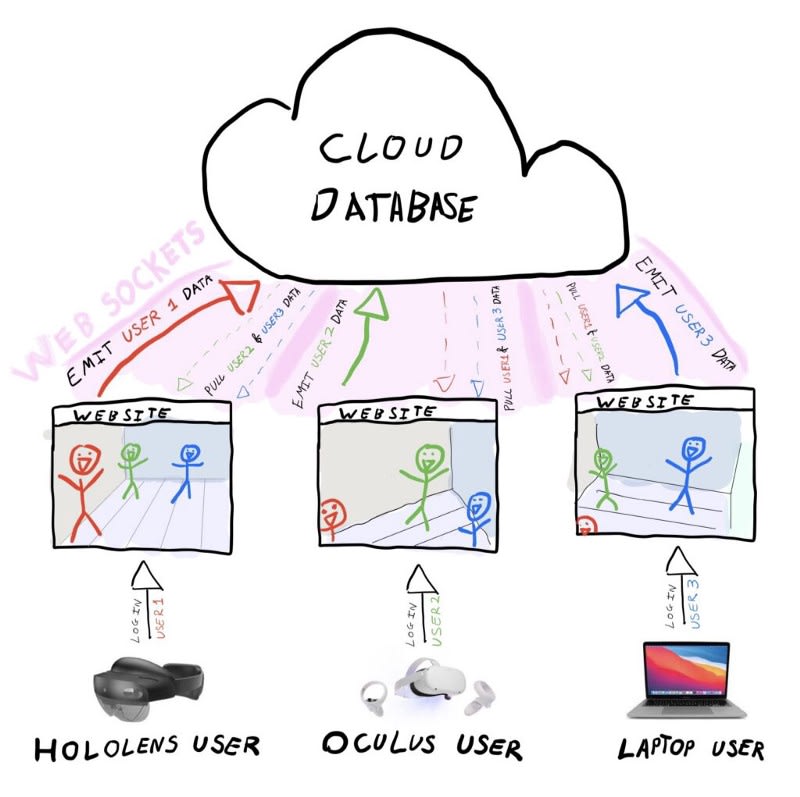

The Concept

This example works by creating an application that can render on different devices, creating a unique identifier for each device (e.g though user log in), storing that devices positional data in real time in a database, then rendering all users data in WebGL.

The below image shows how this concept works across a Mixed Reality Heaset (HoloLens 2), Virtual Reality Headset (Oculus Quest 2) and Laptop (Macbook Pro).

A topline description of how this works is:

- A user visits the website on their device and logs in using their credentials, the site will render as appropriate for their device (thanks to the WebXR API)

- As they move around in the environment, their movement co-ordinates are sent in real time to a database and stored against their username

- They can see other users who are moving around the environment, by rendering other users movement data into the 3D scene

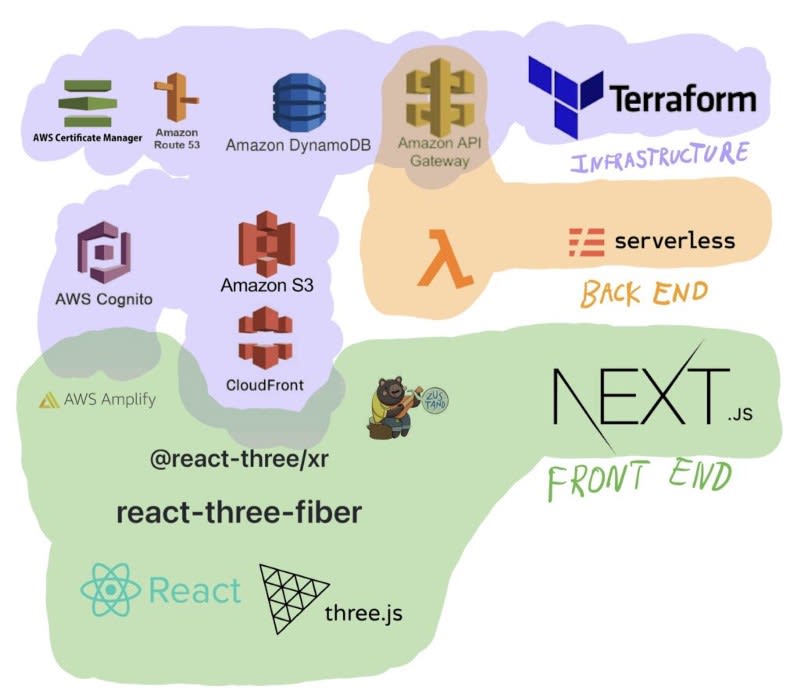

The Tech Stack

As this was originally written for Wrapper.js, it adheres to the use of Terraform, Serverless Framework and Next.js.

The below diagram details this further.

Below is an explanation that details these three categories further:

Terraform

An Infrastructure as Code library that manages all cloud infrastructure apart from the Back End logic:

- Amazon API Gateway (both HTTP and Websocket implementations), that lambda functions from Serverless Framework are deployed to

- DynamoDB , used to store data submitted and retrieved from the Front End via HTTP and Websocket requests to the API Gateway

- Amazon S3 , for deploying the front end static files that are exported by Next.js

- Cloudfront , the Content Delivery Network (CDN) that allows your content to be accessed at the edge and also allows you to access your content with a custom domain name

- Route53 , the DNS records that assign your domain name to your Cloud Front CDN and API Gateway

- Amazon Certificate Manager , for generating SSL certificates for your Domain Name

- AWS Cognito , a service for managing user login information and used as federated identity to authorise Front End entry to the web app and Back End access to data

Serverless Framework

An Infrastructure as Code library that is used to manage the local development and deployment of Lambdas for Back End logic

- Lambda functions , that are deployed to API Gateways (created in Terraform) and execute backend logic written in NodeJS

Next.js

A React.js framework that structures all Front End 2D and 3D logic into an easy to navigate component architecture

- AWS Amplify , a library used in the front end which provides out of the box components and helper functions, that make the use of user logins simple and secure

- Zustand , a library that helps you implement easy state management across your application globally

- React-Three-XR , a library that allows your React-Three-Fiber application to leverage the WebXR API

- React-Three-Fiber , a library that makes integration of Three.js into React components simple, quick and efficient

- Three.js , a library that allows you to create 3D content in the browser through the use of WebGL

- React.js , a library that allows you to componentise your javascript

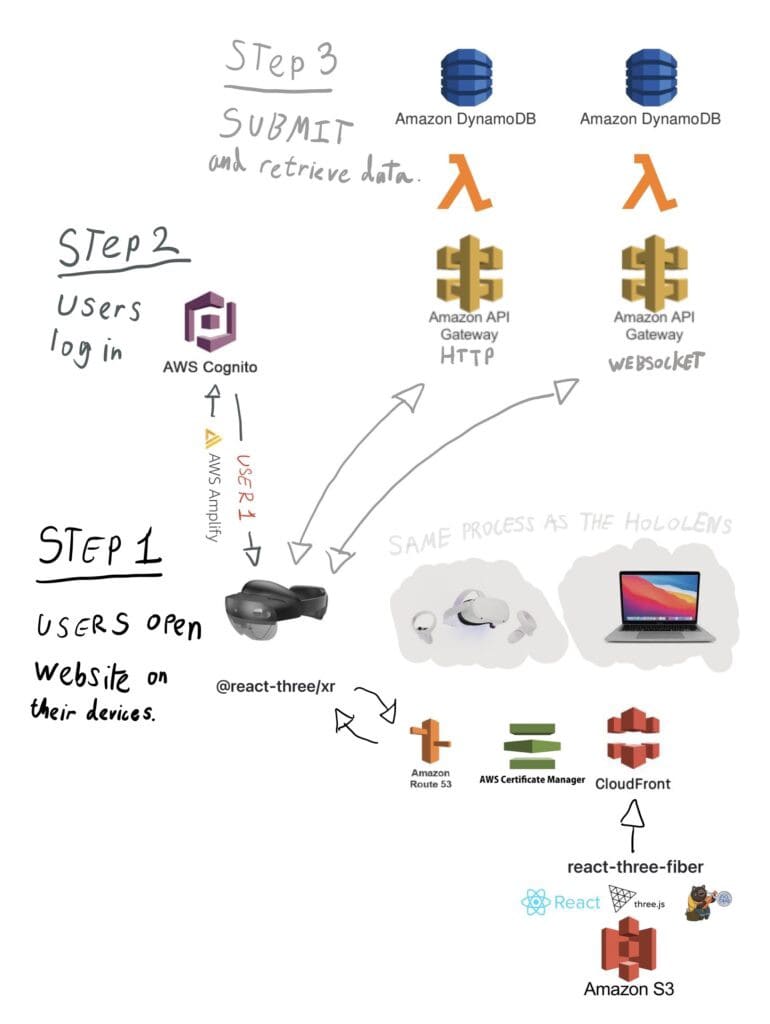

The App Flow

If you were to map out when these technologies get used within this example, it would look something like the below diagram.

If you start at the bottom of the diagram, you can see that all the Front End files are exported statically and hosted on the S3 bucket.

These files are then distributed with the Cloudfront CDN , assigned with an SSL certificate by AWS Certificate Manager and are provided with a custom domain name with Amazon Route 53.

At this point, Step 1 begins:

- The user opens the website on their device (e.g the HoloLens 2) by visiting the domain name

- At this point React-Three-XR renders the website based on the devices capability (in this case a mixed reality headset)

Next up is Step 2:

- The user logs into their AWS Cognito account, with the use of the AWS Amplify library on the Front End

- Having logged in, the website now has a unique identifier for the person that is using that device

Once the user has successfully logged in and uniqly identified themselves, this sets them up nicely for Step 3:

- There are two kinds of API that the user then interacts with HTTP and Websockets.

- The HTTP API is called once to get that users image, that can then be rendered in WebGL

- Once the API is called, a lambda is triggered that uses the users log in details to query the database for information

- The Amazon DynamoDB database stores information related to that users login credentials, such as a profile image url.

- The Websocket API is called when ever the Front End needs to update the database and when ever there is an update to the database

- As a user moves in the 3D world, their position co-ordinates are submitted to the Websocket API in real time

- Once the API is triggered, a lambda function takes the submitted data and saves it to DynamoDB database

- Once the data is saved to database, it is then all returned to the Front End, where the 3D renderer visualises all objects with positional data

Conclusion

Ive tried my best to simplify the concepts in this post, to help make understanding the processes that enable real-time multiplayer WebXR experiences easier to digest.

In the next post, I will detail the practical sides of the actual code that is used to render multiple users positions in real-time.

Meanwhile, hope you enjoyed this post and have fun :D

Top comments (0)