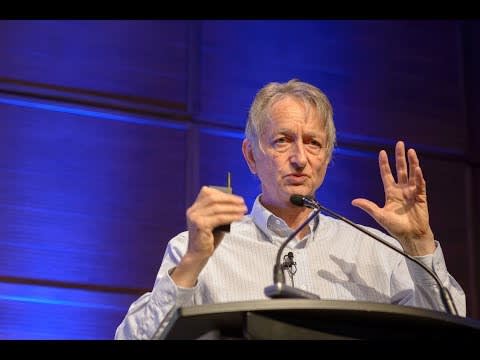

Geoffrey Hinton is referred to by deep learning community as "Godfather of AI" and "Deep Learning hero". He is one of the mastermind who helped in framing modern deep learning methods.

Artificial Intelligence is the hottest trending technology right now, thanks in particular to deep learning methods. Deep learning is a part of a broader family of machine learning techniques based on artificial neural networks which is the reason for breakthroughs for advancements in study of unstructured data like image and text recognition.

There are many heroes in the field of deep learning, Ian Goodfellow is my favorite among them. But, in particular cognitive psychologist and computer scientist, Geoffrey Hinton was co-author of highly cited paper that popularized the back propagation algorithm for training multi-layer neural networks, although they were not the first to propose the approach. It was already used earlier but in different and indirect forms. Hinton alongside Yoshua Bengio and Yann LeCun won the 2018 Turing prize for their conceptual breakthroughs in the field of deep learning. He is also co-authors of many important deep learning papers including Boltzmann machine, capsule neural network, Dropout.

I'm currently doing deep learning specialization on Coursera taught by Andrew Ng. This interview with AndrewNG is very interesting and would like to share some the papers he mentioned in the video.

Papers I found helpful:

- First back propagation paper - Learning representations by back-propagating errors Rumelhart, D., Hinton, G. & Williams, R.

- Dropout - Dropout: A Simple Way to Prevent Neural Networks from Overfitting

Other papers he mentioned:

Work on Boltzmann machines: Boltzmann Machines: Constraint satisfaction networks that learn. Hinton, Sejnowski and Ackley

Fast learning algorithm - A Fast Learning Algorithm for Deep Belief Nets Hinton, Osindero and Teh

ReLU - Rectified Linear Units Improve Restricted Boltzmann Machines Nair, Hinton

Work on Recirculation algorithm - Learning representations by recirculation. Hinton and McClelland

Work on Capsule Networks - Dynamic Routing between Capsules. Sabour, Frosst and Hinton

I also found this part of interview interesting and helpful:

Andrew: If someone wants to break into deep learning, what should they do? What advice would you have for them to get into deep learning?

Hinton: Okay, so my advice is sort of read the literature, but don't read too much of it. So this is advice I got from my adviser, which is very unlike what most people say. Most people say you should spend several years reading the literature and then you should start working on your own ideas. And that may be true for some researchers, but for creative researchers I think what you want to do is read a little bit of the literature. And notice something that you think everybody is doing wrong, I'm contrary in that sense. You look at it and it just doesn't feel right. And then figure out how to do it right. And then when people tell you, that's no good, just keep at it. And I have a very good principle for helping people keep at it, which is either your intuitions are good or they're not. If your intuitions are good, you should follow them and you'll eventually be successful. If your intuitions are not good, it doesn't matter what you do.

Andrew: I see, yeah, that's great, yeah. Let's see, any other advice for people that want to break into AI and deep learning?

Hinton: I think that's basically, read enough so you start developing intuitions. And then, trust your intuitions and go for it, don't be too worried if everybody else says it's nonsense.

Andrew: And I guess there's no way to know if others are right or wrong when they say it's nonsense, but you just have to go for it, and then find out.

Hinton: Right, but there is one thing, which is, if you think it's a really good idea, and other people tell you it's complete nonsense, then you know you're really on to something.

Andrew: I see, and research topics, new grad students should work on capsules and maybe unsupervised learning, any other?

Hinton: One good piece of advice for new grad students is, see if you can find an adviser who has beliefs similar to yours. Because if you work on stuff that your adviser feels deeply about, you'll get a lot of good advice and time from your adviser. If you work on stuff your adviser's not interested in, all you'll get is, you get some advice, but it won't be nearly so useful.

Some other interviews and talks worth watching:

Interview mentioned in the post:

Hope you find this post helpful. If you like to hear more about my journey in deep learning and data science,

Top comments (0)