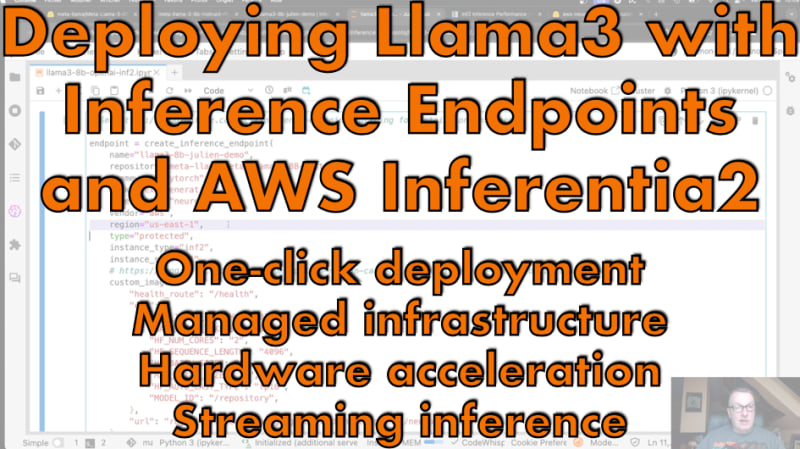

In this video, I walk you through the simple process of deploying a Meta Llama 3 8B model with Hugging Face Inference Endpoints and the AWS Inferentia2 accelerator, first with the Hugging Face UI, then with the Hugging Face API.

I use the latest version of the Hugging Face Text Generation Inference container and show you how to run streaming inference with the OpenAI client library. I also discuss Inferentia2 benchmarks.

Top comments (0)