In the rapidly evolving landscape of artificial intelligence, the integration of vision and language has opened up new avenues for applications that were once the realm of science fiction. One of the most exciting developments in this domain is the emergence of visual language models (VLMs), which can understand and interact with images using natural language. These models are not only transforming how we process and interact with visual content but are also paving the way for more intuitive and accessible AI-powered tools.

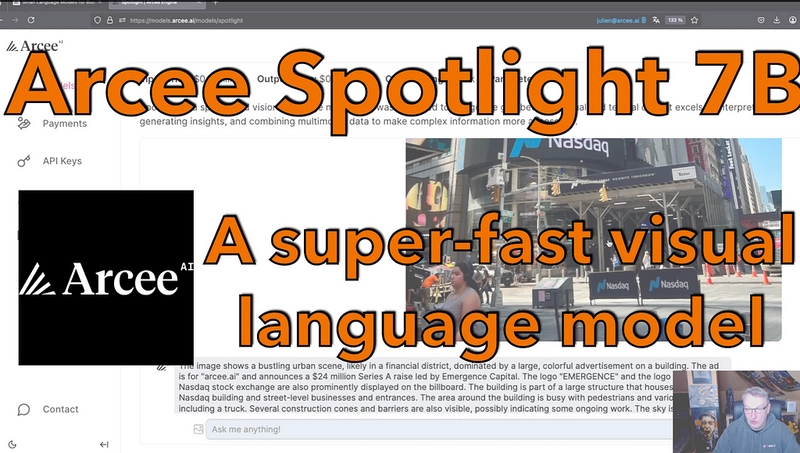

In this blog post, we will explore Spotlight , a new VLM introduced by Arcee, a leading AI company. Spotlight is designed to provide robust and efficient interaction with image content, making it a valuable tool for a wide range of applications, from content creation to data management. We will delve into the features of Spotlight, demonstrate its capabilities, and discuss its potential use cases.

What is Spotlight?

Spotlight is a 7 billion-parameter visual language model developed by Arcee. It is based on the Qwen 2.5-VL architecture, which has been further refined and enhanced by Arcee’s research team. The model is hosted on Arcee’s inference platform, Model Engine , which is designed to be compatible with OpenAI APIs, making it easy for developers to integrate Spotlight into their existing workflows.

Key Features of Spotlight

• Parameter Count : 7 billion parameters, making it a lightweight yet powerful model.

• Context Length : 32k, allowing for rich and detailed interactions with images.

• Pricing : 10 cents per million tokens for input and 40 cents per million tokens for output, making it cost-effective for large-scale applications.

• API Compatibility : Fully compatible with OpenAI APIs, ensuring a smooth integration process.

Demonstrating Spotlight

Using Model Engine

To demonstrate Spotlight, we will first use Arcee’s Model Engine, a platform that hosts various small language models, including Spotlight. Model Engine provides a user-friendly interface and API access, making it easy to test and deploy models.

Let’s start with a simple example. We have an image of the ARCEE advertisement on the NASDAQ building, which announces a 24 million series A raise led by Emergence Capital. When we input this image into Spotlight, the model generates a detailed and accurate description:

• Scene : A bustling urban scene in a financial district.

• Ad : A large advertisement for ARCEE on a building.

• Text : The ad mentions the 24 million series A raise.

• Environment : The area is busy with pedestrians and vehicles, including trucks and construction cones.

• Weather : Clear and blue sky, suggesting a sunny day.

Spotlight’s ability to accurately pick up text and logos is particularly noteworthy, as this is a challenge for many image models.

Using the OpenAI API

For developers who prefer a programmatic approach, Spotlight can be accessed via the OpenAI API. Model Engine uses the same API structure as OpenAI, making it easy to switch between different models.

To demonstrate this, we will use a Python client to interact with the API. We will pass an image to Spotlight and ask it to provide a caption and context.

- Passing the Image via URL:

Prompt: “Where was this picture taken?”

Image: A recognizable image (e.g., a famous landmark).

Output: The model correctly identifies the location and provides context, such as the event and date (e.g., Bastille Day in Paris).

- Passing the Image Inline:

Method: Encode the image in base64 format and pass it inline in the API request.

Use Case: This method is useful when dealing with local images or when you want to avoid HTTP requests.

Passing the Image via URL :

Prompt : “Where was this picture taken?”

Image : A recognizable image (e.g., a famous landmark).

Output : The model correctly identifies the location and provides context, such as the event and date (e.g., Bastille Day in Paris).

Passing the Image Inline :

Method : Encode the image in base64 format and pass it inline in the API request.

Use Case : This method is useful when dealing with local images or when you want to avoid HTTP requests.

One of the most powerful features of Spotlight is its ability to generate structured metadata from images. This is particularly useful for content management, image search, and data storage. For example, given an image of a famous landmark, Spotlight can generate metadata in JSON format, including:

• Country : France

• City : Paris

• Landmark : Arc de Triomphe

• Short Description : “Air show over the Arc de Triomphe with colorful smoke trails.”

• Detailed Description : A more elaborate description of the scene.

• Themes : “Military parade, fireworks, national holiday.”

• Keywords : “Bastille Day, Paris, Arc de Triomphe, fireworks, military parade.”

Performance and Efficiency

Spotlight’s lightweight architecture (7B parameters) ensures that it is fast and efficient, making it suitable for real-time applications and large-scale image processing. The model’s speed is a significant advantage, especially in scenarios where low latency is crucial.

Conclusion

Spotlight represents a significant advancement in the field of visual language models, offering a powerful and efficient tool for interacting with images using natural language. Its ability to generate accurate descriptions, captions, and structured metadata opens up a wide range of applications, from content creation to data management.

If you are interested in exploring Spotlight and other models by Arcee, we encourage you to:

• Learn more about Arcee Orchestra in our launch blog post Taking the Stage: Arcee Orchestra

• Watch more videos on our YouTube channel Arcee AI

• Follow Arcee AI on LinkedIn Arcee AI to stay updated on the latest developments and content.

We look forward to seeing the innovative ways in which you will use Spotlight to enhance your projects and workflows. Keep rocking!

Top comments (0)