Following up on my recent “Hugging Face on AWS accelerators” deep dive, this new video zooms in on distributed training with NeuronX Distributed Optimum Neuron and AWS Trainium.

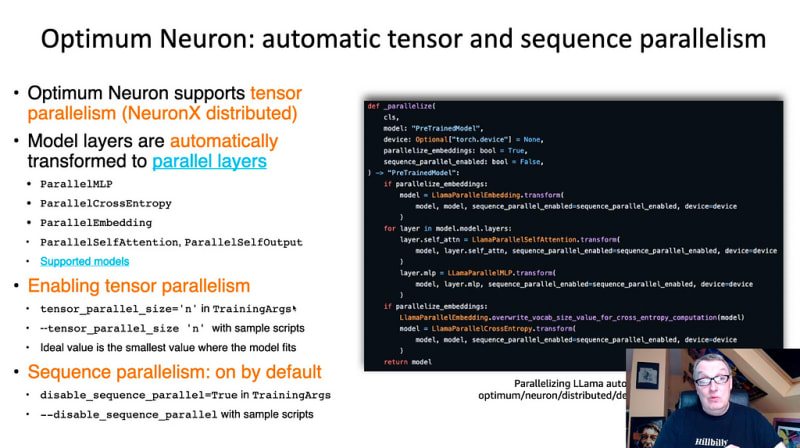

First, we explain the basics and benefits of advanced distributed techniques like tensor parallelism, pipeline parallelism, sequence parallelism, and DeepSpeed ZeRO. Then, we discuss how these techniques are implemented in NeuronX Distributed and Optimum. Finally, we launch an Amazon EC2 Trainium-powered instance and demonstrate these techniques with distributed training runs on the TinyLlama and Llama 2 7B models.

Of course, we share results on training time and cost, which will probably surprise you!

Top comments (0)