CNN - the concept behind recent breakthroughs and developments in deep learning

These are various datasets that I used for applying convolutional neural networks.

- MNIST

- CIFAR-10

- ImageNet

Before going for transfer learning, try improve your base CNN models.

Table of Contents

- Classify Hand-written Digits on MNISTS Datasets

- Identify images from CIFAR-10 Datasets using CNNs

- Categorizing images of ImageNet Datasets using CNNs

Classify Hand written digits

If you're studying deep learning, I confidently know that you already see this picture. This dataset is often used for practicing algorithm made for image classification as the dataset is fairly easy to conquer. Hence, I recommend that this should be your first dataset if you just forayed this field.

- MNIST is a well known datasets used in Computer Vision, composed of images that are handwritten digits(0-9), split into a training set of 50,000 images and a test set of 10,000 where each image is 28X28 pixels in width and height.

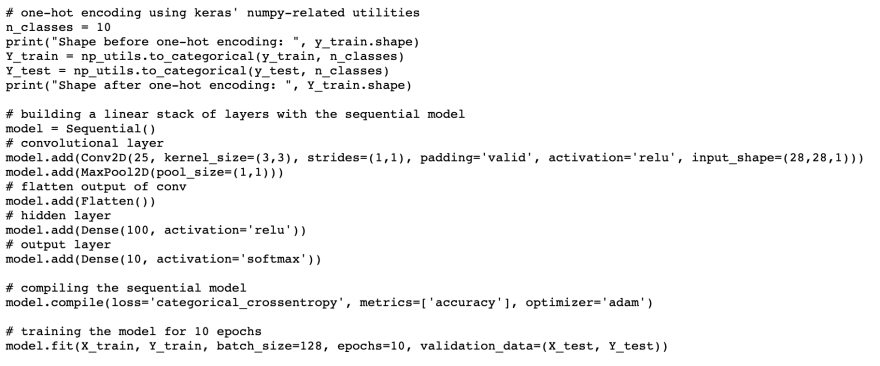

- Before we train a CNN model, we have to build a basic Fully Connected Neural Network for the dataset.

Fully Connected Neural Network

- Flatten the input image dimensions to 1D (width pixels x height pixels)

- Normalize the image pixel values (divide by 255)

- One-Hot Encode the categorical column

- Build a model architecture (Sequential) with Dense layers

-

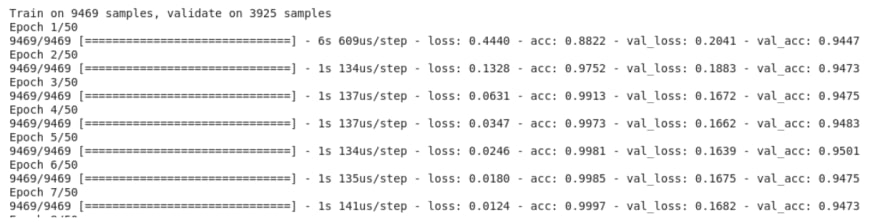

Train the model and make predictions

✔️ You can get good validation accuracy around 97%. One major advantage of using CNNs over NNs is that you do not need to flatten the input images to 1D as they are capable of working with image data in 2D. This helps in retaining the “spatial” properties of images. I additionally add more Convolution layers and hyperparameters for accuracy. So I get 98%+ accuracy.

Identify CIFAR10

Now we will distinguish images 32X32 pixel , 50000 training images and 10,000 testing images. These images are taken in varying lighting conditions and at different angles, and since these are colored images, you will see that there are many variations in the color itself of similar objects (for example, the color of ocean water). If you use the simple CNN architecture that we saw in the MNIST example above, you will get a low validation accuracy of around 60%.

model = Sequential()

// convolutional layer

model.add(Conv2D(50, kernel_size=(3,3), strides=(1,1), padding='same', activation='relu', input_shape=(32, 32, 3)))

//convolutional layer

model.add(Conv2D(75, kernel_size=(3,3), strides=(1,1), padding='same', activation='relu'))

model.add(MaxPool2D(pool_size=(2,2)))

model.add(Dropout(0.25))

model.add(Conv2D(125, kernel_size=(3,3), strides=(1,1), padding='same', activation='relu'))

model.add(MaxPool2D(pool_size=(2,2)))

model.add(Dropout(0.25))

// flatten output of conv

model.add(Flatten())

// hidden layer

model.add(Dense(500, activation='relu'))

model.add(Dropout(0.4))

model.add(Dense(250, activation='relu'))

model.add(Dropout(0.3))

// output layer

model.add(Dense(10, activation='softmax'))

- I add convolutional 2D layers for deeper model

- Also add filters for distinguish more features

- Dropout for regularization ( modified )

- I add more dense layers

✔️ In a conclusion , you can see accuracy is increasing. There’s also CIFAR-100 available in Keras that you can use for further practice.

Top comments (0)