StyleGAN2 - Official TensorFlow Implementation

Style Based Generator Architecture for Generative Adversarial Networks

Developmental Environment

I used deep learning server environment provided by Seoul Women's University.

- 64-bit Python 3.6 installation. I recommend Anaconda3 with numpy 1.14.3 or newer.

- TensorFlow 1.10.0 or newer with GPU support.

- One or more high-end NVIDIA GPUs with at least 11GB of DRAM. We recommend NVIDIA DGX-1 with 8 Tesla V100 GPUs.

- NVIDIA driver 391.35 or newer, CUDA toolkit 9.0 or newer, cuDNN 7.3.1 or newer.

Do you think these people are REAL ?

Answer is NO. These people are produced by NVIDIA's generator that allows control over different aspects of the image. The style-based GAN architecture (StyleGAN) yields state-of-the-art results in data-driven unconditional generative image modeling. We expose and analyze several of its characteristic artifacts, and propose changes in both model architecture and training methods to address them. In particular, we redesign generator normalization, revisit progressive growing, and regularize the generator to encourage good conditioning in the mapping from latent vectors to images. In addition to improving image quality, this path length regularizer yields the additional benefit that the generator becomes significantly easier to invert. This makes it possible to reliably detect if an image is generated by a particular network. We furthermore visualize how well the generator utilizes its output resolution, and identify a capacity problem, motivating us to train larger models for additional quality improvements. Overall, our improved model redefines the state of the art in unconditional image modeling, both in terms of existing distribution quality metrics as well as perceived image quality.

Resources

Material related to our paper is available via the following links:

- Paper: https://arxiv.org/abs/1812.04948

- Video: https://youtu.be/kSLJriaOumA

- Code: https://github.com/NVlabs/stylegan

- FFHQ: https://github.com/NVlabs/ffhq-dataset

| Path | Description |

|---|---|

| StyleGAN | Main folder. |

| stylegan-paper.pdf | High-quality version of the paper PDF. |

| stylegan-video.mp4 | High-quality version of the result video. |

| images | Example images produced using our generator. |

| representative-images | High-quality images to be used in articles, blog posts, etc. |

| 100k-generated-images | 100,000 generated images for different amounts of truncation. |

| ffhq-1024x1024 | Generated using Flickr-Faces-HQ dataset at 1024×1024. |

| bedrooms-256x256 | Generated using LSUN Bedroom dataset at 256×256. |

| cars-512x384 | Generated using LSUN Car dataset at 512×384. |

| cats-256x256 | Generated using LSUN Cat dataset at 256×256. |

| videos | Example videos produced using our generator. |

| high-quality-video-clips | Individual segments of the result video as high-quality MP4. |

| ffhq-dataset | Raw data for the Flickr-Faces-HQ dataset. |

| networks | Pre-trained networks as pickled instances of dnnlib.tflib.Network. |

| stylegan-ffhq-1024x1024.pkl | StyleGAN trained with Flickr-Faces-HQ dataset at 1024×1024. |

| stylegan-celebahq-1024x1024.pkl | StyleGAN trained with CelebA-HQ dataset at 1024×1024. |

| stylegan-bedrooms-256x256.pkl | StyleGAN trained with LSUN Bedroom dataset at 256×256. |

| stylegan-cars-512x384.pkl | StyleGAN trained with LSUN Car dataset at 512×384. |

| stylegan-cats-256x256.pkl | StyleGAN trained with LSUN Cat dataset at 256×256. |

| metrics | Auxiliary networks for the quality and disentanglement metrics. |

| inception_v3_features.pkl | Standard Inception-v3 classifier that outputs a raw feature vector. |

| vgg16_zhang_perceptual.pkl | Standard LPIPS metric to estimate perceptual similarity. |

| celebahq-classifier-00-male.pkl | Binary classifier trained to detect a single attribute of CelebA-HQ. |

StyleGAN's Pre-Trained Networks

Once the datasets are set up, you can train your own StyleGAN networks as follows:

- Edit train.py to specify the dataset and training configuration by uncommenting or editing specific lines.

- Run the training script with

python train.py. - The results are written to a newly created directory

results/<ID>-<DESCRIPTION>. - The training may take several days (or weeks) to complete, depending on the configuration.

By default, train.py is configured to train the highest-quality StyleGAN (configuration F in Table 1) for the FFHQ dataset at 1024×1024 resolution using 8 GPUs. Please note that we have used 8 GPUs in all of our experiments. Training with fewer GPUs may not produce identical results – if you wish to compare against our technique, we strongly recommend using the same number of GPUs.

Expected training times for the default configuration using Tesla V100 GPUs:

| GPUs | 1024×1024 | 512×512 | 256×256 |

|---|---|---|---|

| 1 | 41 days 4 hours | 24 days 21 hours | 14 days 22 hours |

| 2 | 21 days 22 hours | 13 days 7 hours | 9 days 5 hours |

| 4 | 11 days 8 hours | 7 days 0 hours | 4 days 21 hours |

| 8 | 6 days 14 hours | 4 days 10 hours | 3 days 8 hours |

It takes lot of time for training so I recommend you to charge NVIDIA's Cuda Environment

Datasets for training

The training and evaluation scripts operate on datasets stored as multi-resolution TFRecords. Each dataset is represented by a directory containing the same image data in several resolutions to enable efficient streaming. There is a separate *.tfrecords file for each resolution, and if the dataset contains labels, they are stored in a separate file as well. By default, the scripts expect to find the datasets at datasets/<NAME>/<NAME>-<RESOLUTION>.tfrecords. The directory can be changed by editing config.py:

result_dir = 'results'

data_dir = 'datasets'

cache_dir = 'cache'

To obtain the FFHQ dataset (datasets/ffhq), please refer to the Flickr-Faces-HQ repository.

To obtain the CelebA-HQ dataset (datasets/celebahq), please refer to the Progressive GAN repository.

To obtain other datasets, including LSUN, please consult their corresponding project pages. The datasets can be converted to multi-resolution TFRecords using the provided dataset_tool.py:

> python dataset_tool.py create_lsun datasets/lsun-bedroom-full ~/lsun/bedroom_lmdb --resolution 256

> python dataset_tool.py create_lsun_wide datasets/lsun-car-512x384 ~/lsun/car_lmdb --width 512 --height 384

> python dataset_tool.py create_lsun datasets/lsun-cat-full ~/lsun/cat_lmdb --resolution 256

> python dataset_tool.py create_cifar10 datasets/cifar10 ~/cifar10

> python dataset_tool.py create_from_images datasets/custom-dataset ~/custom-images

Disentanglement & Evaluating Quality

The quality and disentanglement metrics used in our paper can be evaluated using run_metrics.py. By default, the script will evaluate the Fréchet Inception Distance (fid50k) for the pre-trained FFHQ generator and write the results into a newly created directory under results. The exact behavior can be changed by uncommenting or editing specific lines in run_metrics.py.

Expected evaluation time and results for the pre-trained FFHQ generator using one Tesla V100 GPU:

| Metric | Time | Result | Description |

|---|---|---|---|

| fid50k | 16 min | 4.4159 | Fréchet Inception Distance using 50,000 images. |

| ppl_zfull | 55 min | 664.8854 | Perceptual Path Length for full paths in Z. |

| ppl_wfull | 55 min | 233.3059 | Perceptual Path Length for full paths in W. |

| ppl_zend | 55 min | 666.1057 | Perceptual Path Length for path endpoints in Z. |

| ppl_wend | 55 min | 197.2266 | Perceptual Path Length for path endpoints in W. |

| ls | 10 hours | z: 165.0106 w: 3.7447 |

Linear Separability in Z and W. |

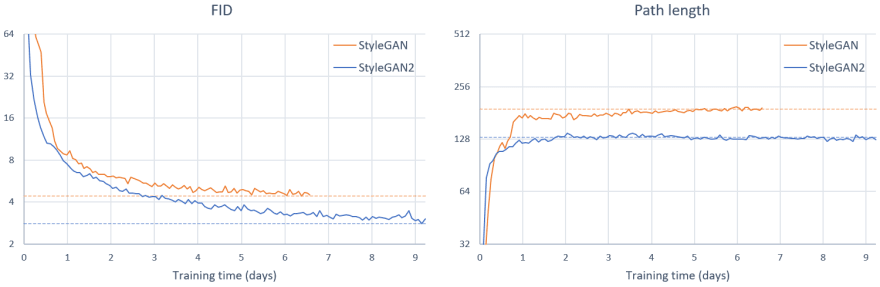

Training curves for FFHQ config F (StyleGAN2) compared to original StyleGAN using 8 GPUs:

Path Length Regularization to smooth latent space

The style-based GAN architecture (StyleGAN) yields state-of-the-art results in data-driven unconditional generative image modeling. We expose and analyze several of its characteristic artifacts, and propose changes in both model architecture and training methods to address them. In particular, we redesign generator normalization, revisit progressive growing, and regularize the generator to encourage good conditioning in the mapping from latent vectors to images. In addition to improving image quality, this path length regularizer yields the additional benefit that the generator becomes significantly easier to invert. This makes it possible to reliably detect if an image is generated by a particular network. We furthermore visualize how well the generator utilizes its output resolution, and identify a capacity problem, motivating us to train larger models for additional quality improvements. Overall, our improved model redefines the state of the art in unconditional image modeling, both in terms of existing distribution quality metrics as well as perceived image quality.

Conclusion

StyleGAN2 was my CAPSTONE DESIGN FINAL PROJECT. Next time I will post GAN basic, StyleGAN2 proposed in NVIDIA paper. Priority to main metrics and normalization. See you then!

References

https://arxiv.org/abs/1812.04948 (A Style-Based Generator Architecture for Generative Adversarial Networks)

https://arxiv.org/pdf/1912.04958.pdf (Analyzing and Improving the Image Quality of StyleGAN)

https://medium.com/analytics-vidhya/from-gan-basic-to-stylegan2-680add7abe82 (From GAN basic to StyleGAN2)

Top comments (0)