📌 Building a Slack Agent with Agentica & OpenAI

1. Introduction

- Github Repository Link: https://github.com/wrtnlabs/agentica.slack.bot

The advancement of AI technology has brought significant innovation across various industries and tasks. In particular, integrating AI into workplace tools plays a crucial role in automating everyday tasks efficiently and enhancing human work capabilities. One of the key areas of this transformation is integrating AI into communication tools like Slack to create smarter and more productive work environments.

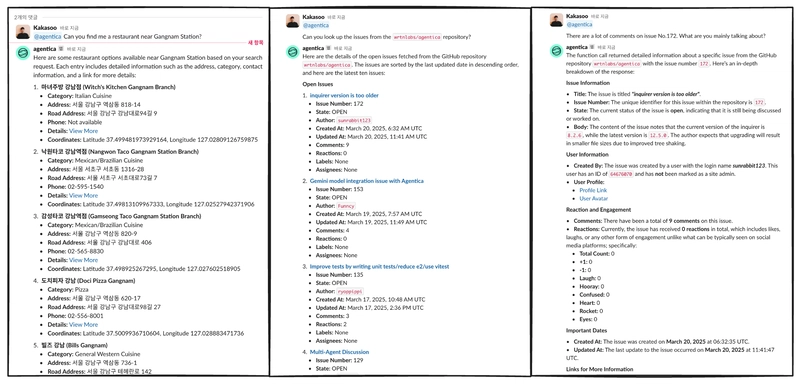

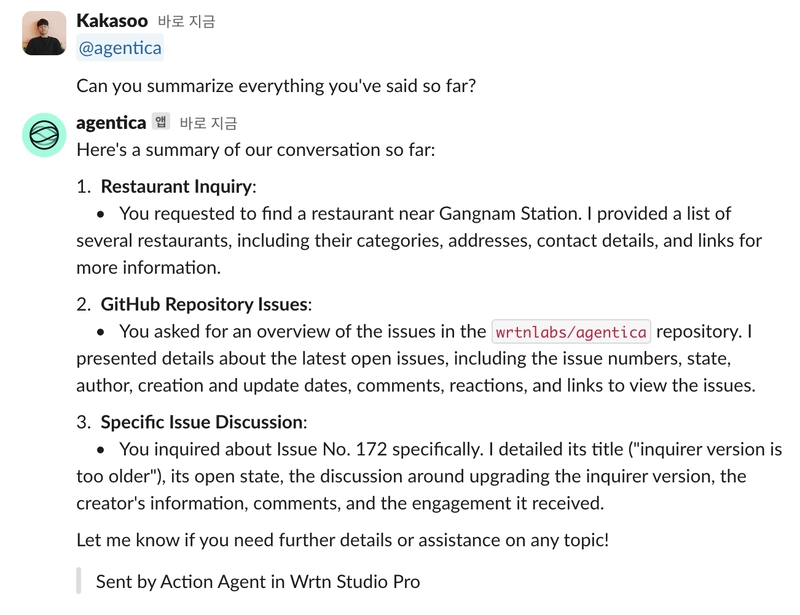

Agentica is an AI service based on TypeScript that simplifies the process of building and operating conversational bots within platforms like Slack. Leveraging LLMs (Large Language Models), it enables seamless, context-aware interactions based on Slack messages, providing users with a more efficient and natural experience. Notably, Agentica manages conversation history automatically through Slack threads without relying on external databases, allowing the AI bot to maintain continuous and contextually relevant conversations.

This document explores how to build Slack bots using Agentica, offering insights into how AI can be leveraged in the workplace. Additionally, it highlights why AI integration is important for service developers and explores potential future developments.

1.1. What is Agentica?

Agentica is an open-source TypeScript library that simplifies AI development by enabling easy function calling with LLMs (Large Language Models). It allows developers to define AI agents using TypeScript classes or Swagger (OpenAPI) definitions, making AI integration accessible for both frontend and backend developers. By leveraging the TypeScript compiler, Agentica enhances reliability by automatically validating and correcting AI-generated responses. With Agentica, developers can build AI-powered applications that not only generate text but also execute real-world actions, such as sending emails, managing files, and interacting with APIs.

1.1.1. Learn More About Agentica

For a more detailed understanding of Agentica, visit the official tutorial: Agentica Tutorial.

The tutorial provides practical examples, including how to integrate Agentica with file systems and popular services. You don’t need to understand complex AI concepts or write intricate code.

- Frontend Developers: If you know TypeScript and can work with classes, you’re good to go.

- Backend Developers: Simply insert your Swagger definition into the provided code snippets, and Agentica will handle the rest.

1.2. Why Use Agentica for Slack Agents?

1.2.1. Simplified Development of Slack Agents

Using Agentica greatly simplifies the process of developing Slack agents. You can easily see its simplicity in the example code. To integrate with a complex system like Slack, all you need to do is define a TypeScript class. Then, you simply pass the class as a generic in the application section, and in the execute section, provide an instance of that class. It’s that simple.

import { Agentica } from "@agentica/core";

import typia from "typia";

import dotenv from "dotenv";

import { OpenAI } from "openai";

import { SlackService } from "@wrtnlabs/connector-slack";

dotenv.config();

export const agent = new Agentica({

model: "chatgpt",

vendor: {

api: new OpenAI({

apiKey: process.env.OPENAI_API_KEY!,

}),

model: "gpt-4o-mini",

},

controllers: [

{

name: "Slack Connector",

protocol: "class",

application: typia.llm.application<SlackService, "chatgpt">(),

execute: new SlackService(),

},

],

});

const main = async () => {

console.log(await agent.conversate("What can you do?"));

};

main();

This structure is simple and avoids unnecessary configurations or complexity, providing much more convenience for developers. Especially when dealing with systems like Slack, which require multiple API integrations, Agentica makes it easy to combine different APIs. This is made possible with TypeScript's powerful type inference, which allows you to handle this complexity easily.

Additionally, Agentica has built-in history management, so developers don't need to manually manage the history of requests and responses. It automatically tracks this for you, and you can modify or manage the history flexibly, which makes the process smoother.

Thus, Agentica is not only useful for developing Slack agents but also for combining various tools to efficiently manage complex systems. Developers can eliminate unnecessary complexity and focus on core logic, greatly improving productivity.

1.3. Expected Benefits and Key Features

The key benefits of using Agentica to develop Slack agents are as follows:

1.3.1. Simplified Development Process

With Agentica, you don't need to deal directly with the Slack API. By leveraging TypeScript classes and clear type definitions, you can easily integrate Slack services. This significantly speeds up development as you don't need to manually parse complex responses or handle raw API requests.

- https://www.npmjs.com/search?q=%40wrtnlabs%2Fconnector

- https://github.com/wrtnlabs/connectors/tree/main/packages

1.3.2. Strong Type System and Inference with TypeScript

TypeScript’s type system is a major advantage when using Agentica. With powerful type inference, you can confidently handle complex integrations like Slack. Using typia for type validation, you can ensure that your code works as expected without worrying about errors or inconsistencies.

1.3.3. Seamless API Integration

Slack requires multiple APIs to handle different tasks. Agentica allows for smooth integration of multiple APIs, abstracting the complexity of managing various requests and responses. Developers can focus on building the logic of the agent without worrying about how systems interact with each other.

1.3.4. Developer-Friendly Documentation and Tutorials

Agentica provides easy-to-follow documentation and tutorials. With clear examples and step-by-step guides, even developers with limited AI integration experience can build powerful Slack agents without going through a steep learning curve.

2. Understanding the Tech Stack

2.1. How Agentica Simplifies LLM Function Calls

Agentica significantly simplifies making LLM function calls. Traditionally, interacting with an LLM API involves complex authentication, payload handling, and response parsing, but Agentica abstracts all of this complexity. By using Agentica, developers can focus on writing simple TypeScript classes, while the tool handles the backend complexities. With the integration of tools like typia, developers can ensure that function arguments and responses are validated with strong typing, reducing errors and improving code stability during function calls.

2.2. Overview of Slack API & Event Subscriptions

The Slack API is a powerful tool for integrating external services with Slack, enabling users to send messages, manage channels, and automate workflows within Slack. It allows developers to build custom applications, bots, and workflows that interact with Slack channels, users, and messages. The API provides a range of endpoints for tasks like posting messages, managing channels, sending files, and handling user interactions.

One key feature of the Slack API is Event Subscriptions. Event subscriptions allow developers to listen for specific events happening in Slack, such as messages being posted in a channel or a user joining a workspace. This real-time event-driven model enables applications to react instantly to changes in Slack, making it ideal for building interactive and responsive bots and integrations.

When subscribing to events, developers need to specify the types of events they want to listen to, such as message events, user events, or channel events. These events are then sent to a specified Request URL where the application can process the events and take appropriate actions, such as sending a response back to Slack or triggering other workflows. Event Subscriptions can greatly enhance the functionality and interactivity of Slack integrations by providing real-time data.

3. Setting Up the Slack Bot

3.1. Creating and Configuring a Slack App

To integrate your agent as a Slack bot, the first step is to create a Slack app. Follow these steps:

-

Create a Slack App:

- Visit the Slack API website and click on "Create New App."

- Choose "From scratch" and enter a name for your app and the workspace where it will be installed.

- Once the app is created, you’ll be directed to your app’s settings page.

-

Configure Bot Permissions:

Under "OAuth & Permissions," set the necessary scopes for your bot to interact with Slack. For example, to listen to messages and respond, the

app_mentions:readandchat:writepermissions are required.Additionally, I recommend including the following scopes to ensure your bot has comprehensive access to various Slack features:

- `channels:read`: To view basic information about channels.

- `channels:history`: To read messages in channels.

- `users.profile:read`: To read users' profile information.

- `im:read`: To read direct messages.

- `groups:read`: To read information about private groups.

- `chat:write`: To send messages.

- `users:read`: To read user information.

- `usergroups:read`: To access user group information.

- `files:read`: To access files in Slack.

- `team:read`: To read team-level information.

These scopes will provide your bot with the necessary permissions to interact seamlessly within your Slack workspace.

-

Set Up Bot Profile:

- In the "App Home" section, you can customize the bot’s display name and avatar. This helps personalize the bot’s presence in Slack channels.

-

Note your app’s credentials:

- Make sure to record the Bot User OAuth Token from the "OAuth & Permissions" page, as this will be needed for authenticating API requests from your bot.

3.2. Enabling Event Subscriptions

Event Subscriptions in Slack allow your bot to react to events such as mentions, messages, or channel activity. To set up event subscriptions for your Slack bot, follow these steps:

-

Enable Event Subscriptions:

- In your Slack app’s settings, navigate to the "Event Subscriptions" section.

- Toggle the switch to enable event subscriptions. This will allow your bot to listen for specific events happening in your Slack workspace.

-

Provide Request URL:

- You will need to enter a Request URL. This URL is the endpoint where Slack will send event data. It’s the location where your server listens for events.

- If you are testing locally, you can use a service like ngrok to expose your local server to the internet.

- Ensure that your server can respond to Slack’s verification requests by returning the

challengeparameter sent by Slack to confirm your endpoint.

-

Subscribe to Events:

- Under "Subscribe to Bot Events," select events that are relevant to your agent. For instance, you can subscribe to the

app_mentionevent, which triggers when your bot is mentioned in a Slack channel. - This ensures that your bot can receive messages whenever it is mentioned in Slack.

- Under "Subscribe to Bot Events," select events that are relevant to your agent. For instance, you can subscribe to the

By completing these steps, your bot will be able to listen for Slack events and respond to them in real-time, allowing you to create a fully interactive experience for users.

4. Building AI-Powered Slack Features

4.1. Setting Up Agentica in a TypeScript Project

There are multiple ways to set up Agentica in a TypeScript project, depending on your preferences and requirements. Here are two common approaches:

-

Using the Agentica CLI:

Quickly set up a fully functional Slack Agent by running the following command:

npx agentica start slack-agentThe CLI will guide you through the setup process, including installing required packages, choosing your package manager, selecting the SLACK controller, and entering your

OPENAI_API_KEY. Once done, Agentica automatically generates the necessary code, creates a.envfile, and installs all dependencies. Using a Pre-built Repository:

Alternatively, you can clone the wrtnlabs/agentica.slack.bot repository, configure the environment variables, and get started with a pre-made solution.

4.2. Defining AI Agent Functions with Type Annotations

You can define your AI agent’s functionality by creating TypeScript classes and specifying the input/output types. Here’s a simplified example of defining a file system agent class:

import { Agentica } from "@agentica/core";

import dotenv from "dotenv";

import OpenAI from "openai";

import typia from "typia";

dotenv.config();

const openai = new OpenAI({ apiKey: process.env.OPENAI_API_KEY });

class FsClass {

async __dirname(): Promise<string> {

return __dirname;

}

async readdirSync(input: { path: string }): Promise<string[]> {

return fs.readdirSync(input.path);

}

async readFileSync(input: { path: string }): Promise<string> {

return fs.readFileSync(input.path, { encoding: "utf-8" }) as string;

}

async writeFileSync(input: { file: string; data: string }): Promise<void> {

return fs.writeFileSync(input.file, input.data);

}

}

export const FileSystemAgent = new Agentica({

model: "chatgpt",

vendor: {

api: openai,

model: "gpt-4o-mini",

},

controllers: [

{

name: "File Connector",

protocol: "class",

application: typia.llm.application<FsClass, "chatgpt">(),

execute: new FsClass(),

},

],

});

For more detailed examples, including working with the file system module, check out this tutorial.

If you prefer to use a pre-built solution, you can use the Slack Connector provided by the wrtnlabs team.

4.3. Handling Slack Events with AI Responses

The code you provided demonstrates how to integrate Agentica with Slack to handle events and generate AI-powered responses using OpenAI's GPT model. The process involves receiving Slack events, particularly when the bot is mentioned, and responding with intelligent replies powered by AI. Here’s a breakdown of how this integration works:

4.3.1. Handling Slack Interactivity Events

The handleInteractivity method is designed to handle incoming interactivity requests from Slack. This method:

-

Verifies the URL: If the event type is

url_verification, it returns the challenge parameter to complete the Slack app verification process. -

Handles

app_mentionEvents: The bot only processes events where it’s mentioned (app_mention). It checks if the event is a mention and if the bot hasn’t already responded to avoid duplicate processing. -

Checks for Duplicated Events: The method uses the

cacheManagerto store the event and prevent overlapping responses.

4.3.2. Ensuring the Bot Responds Only to Relevant Mentions

-

Bot Mention Validation: The bot checks if it’s being explicitly mentioned by verifying if the event’s

textcontains the bot’s user ID (<@bot_id>). -

Preventing Self-Responses: The bot avoids responding to its own messages by checking if the event’s

usermatches its own user ID. -

Thread Locking: To prevent multiple responses in the same thread simultaneously, a lock mechanism is used (

threadLockmap) to ensure that only one request is processed at a time for each thread.

4.3.3. Processing Thread Replies and Building History

-

Retrieve Thread Replies: The bot fetches the conversation history (replies in the thread) using the

slackService.getRepliesmethod. - Excluding Bot’s Own Replies: If the last reply was sent by the bot, it avoids sending another response.

-

Building Conversation History: The bot constructs a conversation history (

historiesarray) from the thread’s replies. This history will be used to maintain context when generating a response using Agentica.

4.3.4. Generating AI Response

-

Creating the Agentica Instance: The bot initializes an

Agenticainstance, passing in the OpenAI API, the conversation history, and any necessary controllers (like the GitHub and KakaoMap connectors). ThesystemPromptis defined to guide the AI’s behavior during the conversation. - Generating and Sending the Response: The AI generates a response based on the latest message in the thread. The response is filtered to extract text and sent back to Slack as a reply.

4.3.5. Response Handling and Final Steps

-

Sending the Final Answer: The bot checks for a valid response (

lastAnswer) and sends it back to the Slack thread usingslackService.sendReply. - Releasing the Thread Lock: After processing, the lock is released, allowing the bot to process future events.

This code implements a Slack bot that listens for mentions, collects conversation history from thread replies, and uses AI (via Agentica) to generate responses. The response is then sent back to Slack, enhancing the interaction with intelligent, context-aware messages.

This structure ensures the bot responds only when appropriate, prevents overlapping responses, and uses AI to provide meaningful answers based on the context of the conversation. It’s an efficient way to integrate AI into Slack workflows while avoiding redundant or conflicting replies.

5. Processing Slack Messages with LLMs

5.1. Managing Slack Conversation History with Agentica

Agentica leverages Slack threads to manage conversation history when handling Slack message events. Instead of storing data separately in a database, Agentica retrieves and uses the content of the thread associated with the event. Each Slack thread serves as a container for the conversation history, and the LLM references this history to generate responses.

When a user interacts with the Slack bot, Agentica fetches the replies within the thread to build the conversation history, which guides the LLM in generating a response. By automatically managing the conversation history in this way, the bot can provide contextually relevant responses without needing an external storage system.

5.2. Generating Context-Aware Responses

By utilizing the conversation history within the Slack thread, Agentica ensures that responses reflect the context of the entire conversation. This approach prevents repetitive or contextually irrelevant answers, keeping the dialogue natural and relevant.

Since threads act like a database, users can continue interacting within the same thread, maintaining the flow of the conversation. Additionally, users can call the agent in the middle of a conversation, and the agent will seamlessly generate responses without interrupting the flow. Even when the agent is invoked during a conversation, the context is preserved, allowing for smooth interaction and maintaining the conversation without any issues.

5.3. Generating Context-Aware Responses

Agentica uses Slack threads as a sort of database to manage the context of conversations. Each Slack thread plays a key role in maintaining the flow of a discussion. When an event is received, Agentica fetches the content of the thread and understands how the conversation has progressed. This allows the conversation to continue naturally, with all interactions in Slack being part of a single cohesive flow.

What's interesting is that users can call the agent at any point, even while having a conversation with another person in Slack. For instance, if a user is chatting with a colleague and then calls the agent, the agent can pick up on the conversation’s context and generate an appropriate response. During this process, there is no need to manually store or manage previous conversations or messages—the Slack thread itself takes care of this. This means that calling the agent in the middle of a conversation doesn't cause any issues.

6. Conclusion & Future Directions

6.1. Lessons Learned from Using Agentica for Slack Bots

By integrating AI with a popular business tool like Slack, the service has become much more user-friendly and useful. AI is often said to augment human capabilities, but it does more than that—it also enhances existing services, making them more attractive and integrated. This approach not only improves user experience but also drives customer satisfaction, making products more loved by their users. This is why service developers, not just AI specialists, need to pay more attention to integrating AI into their products. It's not just the domain of AI developers; it’s a broader opportunity that can add tremendous value to any service, making it more compelling and efficient.

6.2. Potential Improvements and New Features

Through the use of Agentica, we’ve learned that relying on Slack messages alone may sometimes limit the ability of LLMs to recall deeper parts of the conversation. When an LLM processes multiple thought steps before delivering a response, but only one message is sent to Slack, the context from those intermediate steps can be lost. This can result in the LLM lacking context in future responses. To address this, we need to enhance memory management by storing and referencing the LLM’s deeper thought processes separately, ensuring that the context remains intact over time.

Additionally, expanding Agentica’s integration with other applications and improving API support will be important for allowing users to create more versatile and feature-rich bots. This can include handling conversations across different channels and providing more seamless interactions.

6.3. Open-Source Contributions and Next Steps

As an open-source project, Agentica thrives through contributions from the community. Moving forward, we aim to collaborate with users and developers to add more features, improve documentation, and provide examples that help others integrate AI-powered solutions into their own projects. By further refining the response generation logic and supporting integrations with more platforms, Agentica will empower service developers to create more intelligent, context-aware bots. Additionally, by offering real-time feedback and advanced configurations, we will help users create highly customized AI assistants tailored to their specific needs.

Top comments (4)

smart boy.

Thanks! I hope this article helped you, too.

Some comments may only be visible to logged-in visitors. Sign in to view all comments.