Prerequsite

If you don't know anything about AI SDK, you might want to read this as well.

Introduction

Hello there. Lately, I've been sharing a lot about AI, but I understand that AI can sometimes sound like it's from another world. Words like AI, artificial intelligence, singularity, agent, and MCP all carry such weight that when you dig into them, you might feel overwhelmed in just an instant. It might seem that these topics are far removed from the daily work of most developers—whether frontend or backend. Of course, when you hear discussions about the job market and such, you might think that everyone should at least learn the basics of AI to stay competitive. With that in mind, I want to introduce a recently released library. Interestingly, this library was developed by a Korean startup and is called Agentica.

https://github.com/wrtnlabs/agentica

“Interest, questions, feedback, and stars on a library serve as a tremendous source of motivation for open-source developers.”

What is Agentica?

https://github.com/wrtnlabs/agentica

The Agentica library, as shown above, converts TypeScript classes and Swagger documents into LLM Function Calling, providing assistance to those developing multi-agent orchestration and agentic AI. That may sound a bit complicated, but here’s the key takeaway:

- Anyone who knows TypeScript can develop AI. → Frontend developers can now create AI!

- Anyone who can create Swagger can develop AI → Backend developers can now create AI!

Could it really be that simple? Isn’t AI filled with daunting concepts like machine learning, regression analysis, and other hard-to-master terminologies? If you think so, consider this: how did frontend development work before React or even jQuery? And how did backend development happen before Spring, NestJS, or Django existed? Those were challenging times—probably even more challenging than what we imagine today.

“The reason AI development is challenging right now is that there aren’t yet enough libraries and frameworks to assist with it.”

My conclusion is that AI development is no different from past challenges. The AI ecosystem is still in its infancy, and surprisingly, development is lagging behind theoretical advances. That’s why a variety of libraries are starting to emerge. Personally, I find Agentica very interesting, and before diving into a detailed explanation, let me show you how you can use it.

Function Calling with TypeScript Classes in Agentica

There’s no need to think of “Function Calling” as something complicated. Function Calling simply means that an LLM can call functions during a conversation. In simpler terms, it’s not just a chatbot that talks—it can take action when needed. For example, it could order food, transfer money, or perform other actions that truly help the user.

Function calling provides a powerful and flexible way for OpenAI models to interface with your code or external services. This guide will explain how to connect the models to your own custom code to fetch data or take action.

Let’s look at a simple code example demonstrating how to use Function Calling.

Example: Creating an AI Agent That Can Use Gmail Code

You can find a detailed explanation at this tutorial.

import { Agentica } from "@agentica/core";

import typia from "typia";

import dotenv from "dotenv";

import { OpenAI } from "openai";

import { GmailService } from "@wrtnlabs/connector-gmail";

dotenv.config();

export const agent = new Agentica({

model: "chatgpt",

vendor: {

api: new OpenAI({ apiKey: process.env.OPENAI_API_KEY! }), // Insert your OpenAI API key

model: "gpt-4o-mini", // Model configuration

},

controllers: [

{

name: "Gmail Connector", // A descriptive name for the class to help the LLM select functions

protocol: "class",

application: typia.llm.application<GmailService, "chatgpt">(), // Pass the class

execute: new GmailService({ ... }), // Pass an instance of the class

},

],

});

const main = async () => {

console.log(await agent.conversate("What can you do?")); // Now conversation is enabled!

};

main();

Not too long, right? If you look closely, the definition of the agent includes:

- The

modelproperty, which determines the provider—in this case, chatgpt. - The

vendorproperty, where you choose the service providing the LLM—in this case, OpenAI.- If you need an API key, visit this link to generate one and top up with around $5.

- Finally, you define a Controller. By providing a class as shown, you enable function calls through the methods defined in that class.

More on Controllers

export const agent = new Agentica({

model: "chatgpt",

vendor: {

api: new OpenAI({ apiKey: process.env.OPENAI_API_KEY! }), // Insert your OpenAI API key

model: "gpt-4o-mini", // Model configuration

},

controllers: [],

});

In Agentica, a controller represents a set of functions that the LLM can call. When you pass a class, the LLM reads the class information as interpreted by the TypeScript compiler, understanding its public member functions. Since the actual function calls are made via an instance, you must provide one.

Install the Gmail connector package with:

npm install @wrtnlabs/connector-gmail

Then define the agent as:

export const agent = new Agentica({

model: "chatgpt",

vendor: {

api: new OpenAI({ apiKey: process.env.OPENAI_API_KEY! }), // Insert your OpenAI API key

model: "gpt-4o-mini", // Model configuration

},

controllers: [

{

name: "Gmail Connector", // A descriptive name to help the LLM choose the function

protocol: "class",

application: typia.llm.application<GmailService, "chatgpt">(), // Pass the class

execute: new GmailService({ ... }), // Pass an instance of the class

},

],

});

With this setup, you can use the GmailService code as shown here. GmailService defines public methods such as:

- Sending emails

- Drafting emails

- Listing and viewing email details

Thus, through conversation, the LLM can now call these functions.

(async function () {

await agent.conversate("Can you forward an email to my contact?");

await agent.conversate("My contact's email is ABC@gmail.com."); // You can continue the conversation!

})()

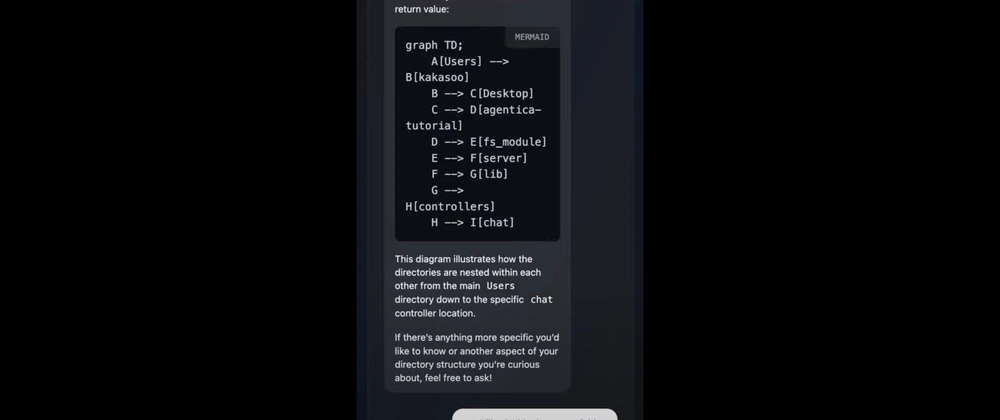

- If needed, you can even create an agent that directly manages your computer’s files by redefining the fs module as a class. See this tutorial.

- Similarly, frontend developers can define and control browser functions as classes.

There are many pre-built controllers available, so it's worth checking them out:

Function Calling with Swagger in Agentica

npm install @samchon/openapi

import { Agentica } from "@agentica/core";

import { HttpLlm, OpenApi } from "@samchon/openapi";

import dotenv from "dotenv";

import OpenAI from "openai";

dotenv.config();

const openai = new OpenAI({ apiKey: process.env.OPENAI_API_KEY });

export const SwaggerAgent = async () =>

new Agentica({

model: "chatgpt",

vendor: {

api: openai,

model: "gpt-4o-mini",

},

controllers: [

{

name: "PetStore", // Name of the connector (any descriptive name works)

protocol: "http", // Indicates an HTTP-based connector

application: HttpLlm.application({

// Convert the Swagger JSON document to an OpenAPI model for Agentica.

document: OpenApi.convert(

await fetch("https://petstore.swagger.io/v2/swagger.json").then(

(r) => r.json()

)

),

model: "chatgpt",

}),

connection: {

// This is the actual API host where API requests will be sent.

host: "https://petstore.swagger.io/v2",

},

},

],

});

Next up is Swagger. Backend developers can now create an agent simply by using the Swagger you built. Whether you load the Swagger via fetch or from a file, once you read it as JSON, you just do:

{

...

application: HttpLlm.application({

document: OpenApi.convert(swagger),

})

...

}

Instead of providing a class, you provide the Swagger document. This allows the LLM to call the API without needing a dedicated class definition!

How Agentica Works

AI’s Biggest Strength and Weakness, and How to Address It

AI’s greatest strength is that it always returns different results. If it were always the same, AI wouldn’t have taken off the way it has. However, its biggest weakness is that it always returns different results. Returning different results makes it difficult to understand using traditional frontend or backend development paradigms. Even when you write tests for defense, the only way to guarantee stability is through probabilistic methods over multiple calls. This style of development means that achieving 80% completeness in a product may take only 20% of the effort, but the remaining 20% might require 80% of the effort. That’s why truly polished AI products are still rare.

Agentica tackles this problem by:

- Using the TypeScript compiler (TSC) to provide a reliably compiled document that teaches the AI about functions.

- Also using the TypeScript compiler to correct the AI’s mistakes with remarkable precision.

For example, you can instruct it like:

“The type of the element ‘e’ inside the array located at a.b.c.d is incorrect. Fix it.”

And internally, Agentica corrects the errors like so:

{

success: false, // It failed,

errors: [{ // And it provides hints on how to fix it.

path: "$input.a.b.c.d[0].e",

expected: "number",

value: "abc"

}],

data: { // This represents the value received by the AI.

a: {

b: {

c: {

d: [{

e: "abc"

}]

}

}

}

}

}

Every time the AI calls a function, it can realize:

“The type for 'a.b.c.d[0].e' should be a number, but I passed in “abc”!”

Isn’t it amazing that AI, a field that seems so distant, can suddenly rely on compilation to fix its mistakes?

Implications of Agentica

This concludes our brief introduction to Agentica. Even beyond Agentica, many open-source projects will emerge, and we can start discussing what frontend and backend developers need to prepare for. In my view, here are four key points:

- As open-source projects grow, AI development will become easier and will trickle down to regular service development.

- Code that cannot be explained to the AI will eventually be unselectable by it, making code readability and documentation essential.

- Similarly, to provide explanations, you must be proficient not only at the code level but also in business and domain knowledge.

- Just as with compilers, learning AI development will require a strong foundation in computer science—it will only become more important.

Agentica is not exclusively targeted at TypeScript. It is a library for web developers in general—especially frontend developers who make up a large portion of the developer community. In the coming era, as interest in AI-powered services continues to grow, I believe frontend developers should familiarize themselves with libraries like this and prepare for the AI age. It will benefit your career.

We can never predict which library will emerge next. Agentica may be just a fleeting project, or it might fail to catch on. However, wasn’t the code surprisingly simple? In the future, various features to boost development productivity may be added, or a new library might emerge to further accelerate AI development.

Conclusion

If you enjoyed reading this, why not take your own project to the next level with Agentica? Whether you already have a frontend page or a server, even creating a chatbot by generating classes or Swagger documentation can give your project a more dynamic composition. Thank you for reading.

Appendix

I made slack agent without langchain

Top comments (0)