In this article we shall see the ‘xls to csv’ conversion on AWS landscape by a server-less approach.

This actually is a Demo/POC of a conversion system intended for file conversions on platforms/systems like in our case SAP,that couldn’t rely on external API’s due to security concerns and in one of the in-expensive ways.

Salient Features:

1.Secure conversion as it happens on environment that has been designed and configured by the individual.

2.In-expensive and some low load cases are covered in ‘AWS always free services’ like Lambda.

3.Event driven automated as file upload triggers Lambda and also handles multiple file conversion(Concurrency).

This approach is much feasible if the xls file has confidential or sensitive information.

Design using server less architecture

One could modify the above design accordingly once the files are converted and placed into S3.

AWS Services used for conversion are AWS Lambda ,AWS S3 , AWS Cloud watch.

We shall look into the Lambda config and code.

Python 3.8 shall be used as the runtime for the Lambda and we use XLRD,a Python Library as a layer to the Lambda because XLRD is not a default in Lambda Python runtime.

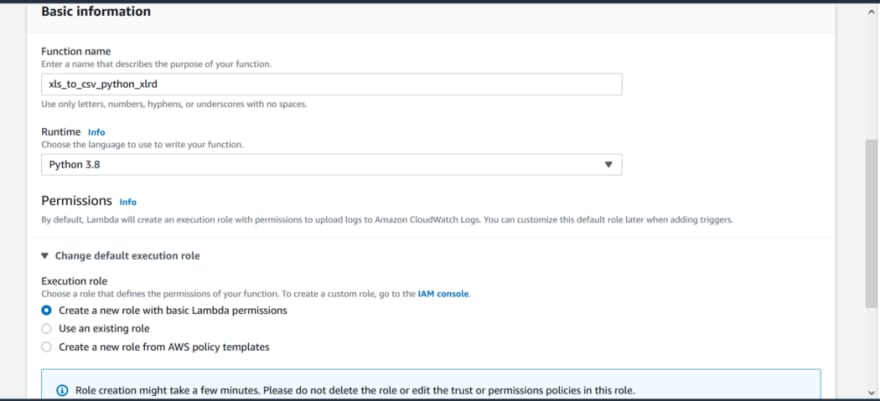

Login to AWS management console and navigate to Lambda service.

Click on create Function to create a new Lambda function.

Select runtime as Python 3.8 and choose an execution role to upload logs to cloud watch.

For additional config such as Code signing and VPC for Lambda to access ,check the Advance settings below in the page and click create function.

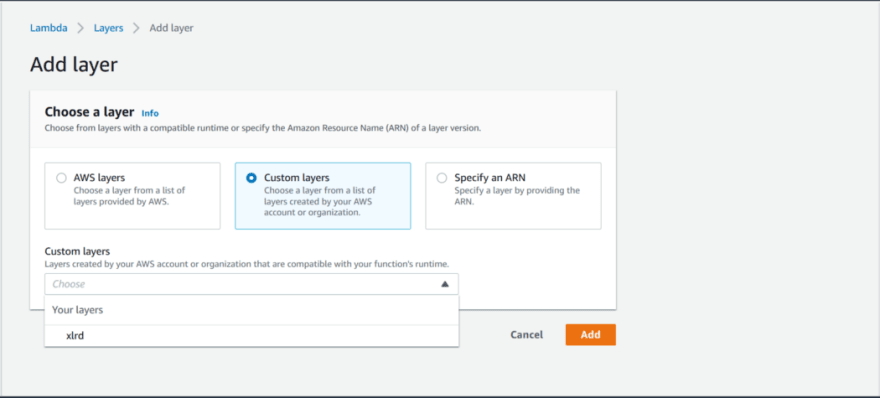

Once the Lambda function is created,Click on the Layers and it directs to the below pane.Add a layer in the layer section to add out XLRD Library.

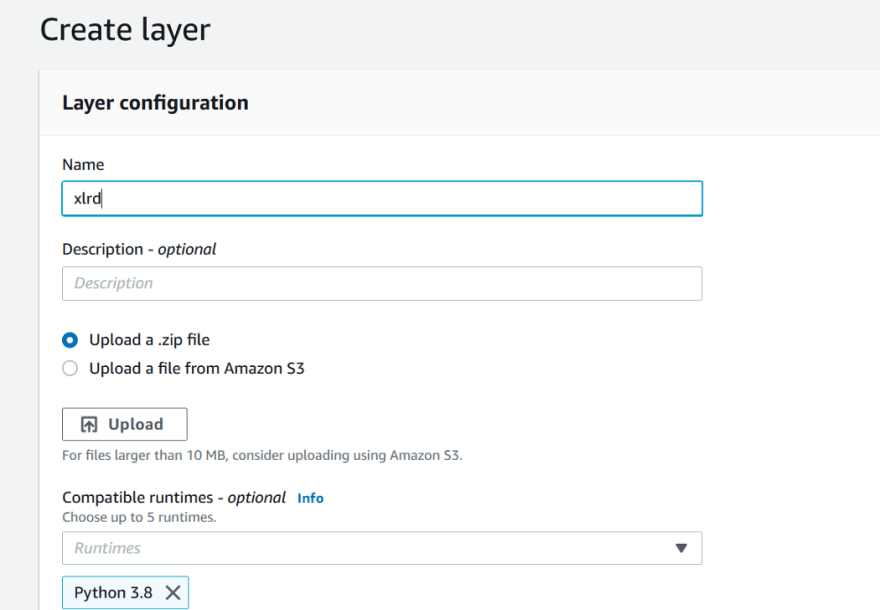

We shall create a layer and later add the layer to the Lambda function.

To create a layer navigate to layers in Lambda landing page and click on layers tab.

Once there,below screen could be seen,set them as shown and upload the a file that could be downloaded from below link and upload in the upload section of the below screen.This is the zipped layer file with xlrd library that works only for Python 3.8 runtime of Lambda.

In this link find the site-packages zip file and upload the same and select the runtime as python 3.8. https://github.com/kesavaraju-anurag/POC/

Under Custom layers section which we prepare,select the existing layer that we have previously added.In our case layer is named xlrd.

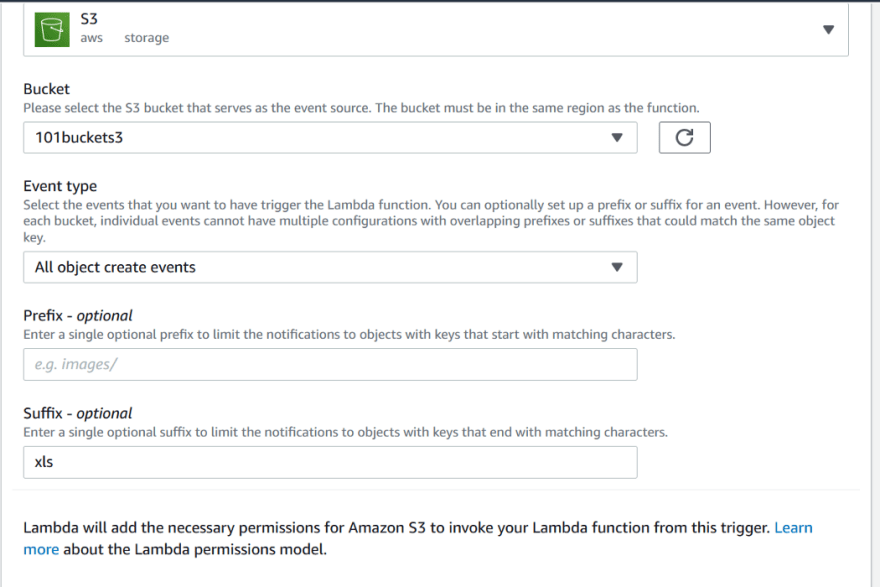

We shall add a trigger to our lambda from S3 bucket.

In the main page of Lambda function,click on Add trigger and select S3 under Trigger Configuration.

Once S3 is selected,it asks for more details.

We are triggering Lambda on S3 object upload event(All object create events). and so we select below .We can specify other event types as per the requirement.“.xls” is the file suffix and currently the files present in root directory of the S3.If files to be converted are placed inside other folders mention the same in prefix and click Add

AWS recommends to use different different S3 if Lambda writes to S3 buckets, to avoid recursive Lambda calls at times.Since this is a demo,we are using the same S3.

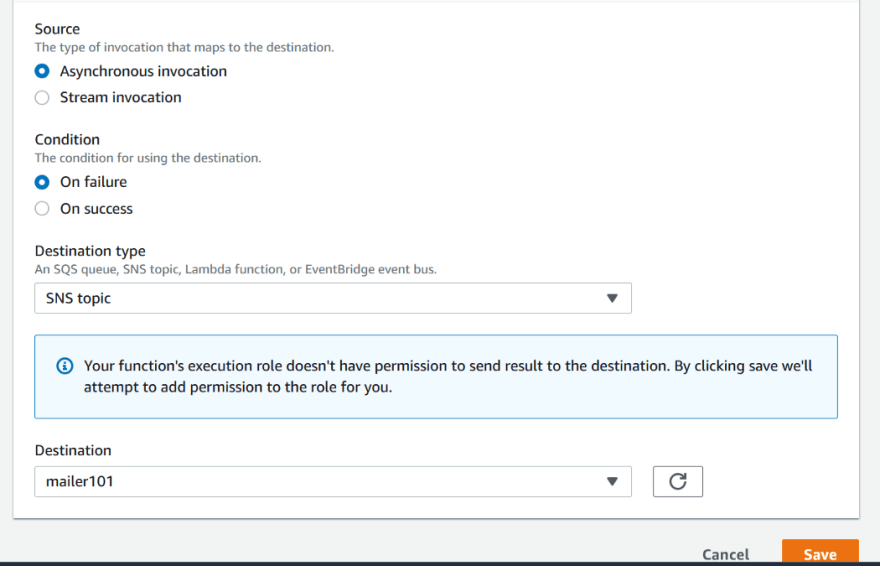

We now shall add a destination which is AWS SNS to convey us in case of Lambda failure.

On Lambda function’s landing page click on Add destination and set as below and click Save.

We need to have a subscribed SNS and in this demo it is mailer101

Once done ,landing page should look like below

Lambda Function Landing page

We also need to add policies to the role for Lambda to access Files from S3.

We have added below policies to the service role that has been auto added while we are creating Lambda function.We can find the auto created service role in AWS IAM service under roles.Alternatively we can create a new role and give Execution role along with S3 access and assign that custom role to Lambda from “Execution role” section in Permissions tab of Lambda.

We have given full S3 access since demo but advised to use restricted access

We are done with Lambda config .

Now we shall see the code.

The code we are using is a bit customized to our need we had, but the working remains same.

Code could be found from below Git book page.

https://github.com/kesavaraju-anurag/POC/

The code has few libraries like BOTO3,CSV,IO,JSON written to import along with XLRD.

Comments in code can be followed for replacing target S3 bucket name and few other details.

Enter this code in the Function code section of our Lambda and click Deploy.

Upload the file with xls format and we should see csv created few seconds later.

Look for converted file in correct locations according to prefixes specified in S3 trigger and also in code if specified any.

Known issues till now:

1.As observed in the initial testing, file name that would be uploaded to S3 shouldn’t have a non-alphanumeric character just before “.xls” in filename.

2.Few formats like date in the converted files are in raw format relative to original xls file.We have mentioned an commented if block in the code to correct it.

3.When the converted CSV file is opened ,characters if non-ASCII, are shown gibberish kind depending on encoding setting in excel application of local machine or the application which is trying to open the file.Change the encoding to verify the conversion.

Lambda concurrency could be seen at times if multiple file are uploaded and other cases, depending on the code.Advised to check for reserved concurrency if to be used in productive or test environment.

Code could be modified to handle all required known errors.

This article has been inspired from few YouTube videos ,stack over flow(code reference) and used few AWS Docs as reference.

This document will be versioned and updated after few more testings.Since this is a Demo/POC,proceed with initial deployment and proper testing at your risk in case of productive use.

Few References:

https://aws.amazon.com/iam/getting-started/?nc=sn&loc=3

https://aws.amazon.com/lambda/getting-started/

https://aws.amazon.com/s3/pricing/?nc=sn&loc=4

https://aws.amazon.com/cloudwatch/getting-started/

https://xlrd.readthedocs.io/en/latest/

Top comments (0)