ONNXRuntime

Scoring engine for ML models.

- Microsft

- Ruby binding to ONNXRuntime created by Andrew Kane

YOLOv3

- ONNX Model Zoo

- YOLOv3

Download

# model

wget https://onnxzoo.blob.core.windows.net/models/opset_10/yolov3/yolov3.onnx

# coco-labels

wget https://raw.githubusercontent.com/amikelive/coco-labels/master/coco-labels-2014_2017.txt

# gems

gem instal numo-narray mini_magick

yolov3.rb

require 'mini_magick'

require 'numo/narray'

require 'onnxruntime'

SFloat = Numo::SFloat

input_path = ARGV[0]

output_path = ARGV[1]

model = OnnxRuntime::Model.new('yolov3.onnx')

labels = File.readlines('coco-labels-2014_2017.txt')

# preprocessing

img = MiniMagick::Image.open(input_path)

image_size = [[img.height, img.width]]

img.combine_options do |b|

b.resize '416x416'

b.gravity 'center'

b.background 'transparent'

b.extent '416x416'

end

img_data = SFloat.cast(img.get_pixels)

img_data /= 255.0

image_data = img_data.transpose(2, 0, 1)

.expand_dims(0)

.to_a # NArray -> Array

# inference

output = model.predict(input_1: image_data, image_shape: image_size)

# postprocessing

boxes, scores, indices = output.values

results = indices.map do |idx|

{ class: idx[1],

score: scores[idx[0]][idx[1]][idx[2]],

box: boxes[idx[0]][idx[2]] }

end

# visualization

img = MiniMagick::Image.open(input_path)

img.colorspace 'gray'

results.each do |r|

hue = r[:class] * 100 / 80.0

label = labels[r[:class]]

score = r[:score]

# draw box

y1, x1, y2, x2 = r[:box].map(&:round)

img.combine_options do |c|

c.draw "rectangle #{x1}, #{y1}, #{x2}, #{y2}"

c.fill "hsla(#{hue}%, 20%, 80%, 0.25)"

c.stroke "hsla(#{hue}%, 70%, 60%, 1.0)"

c.strokewidth (score * 3).to_s

end

# draw text

img.combine_options do |c|

c.draw "text #{x1}, #{y1 - 5} \"#{label}\""

c.fill 'white'

c.pointsize 18

end

end

img.write output_path

Run

ruby yolov3.rb a.jpg b.jpg

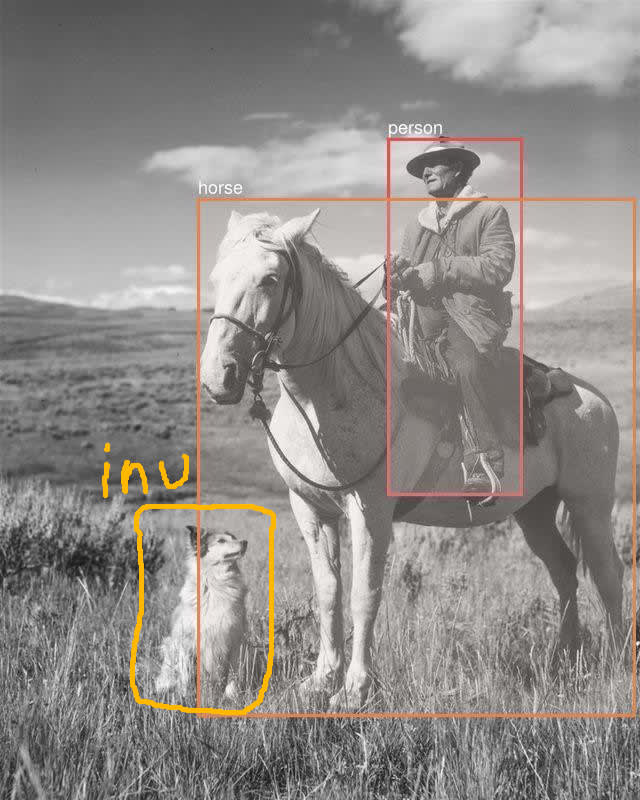

Where is a dog?

It is a pity that the dog is not recognized by my computer.

Now It's time for a human being to do something.

That's it!

(inu means dog in the Japanese dialect of human language.)

I don't want to lose the human mind even in the age of AI.

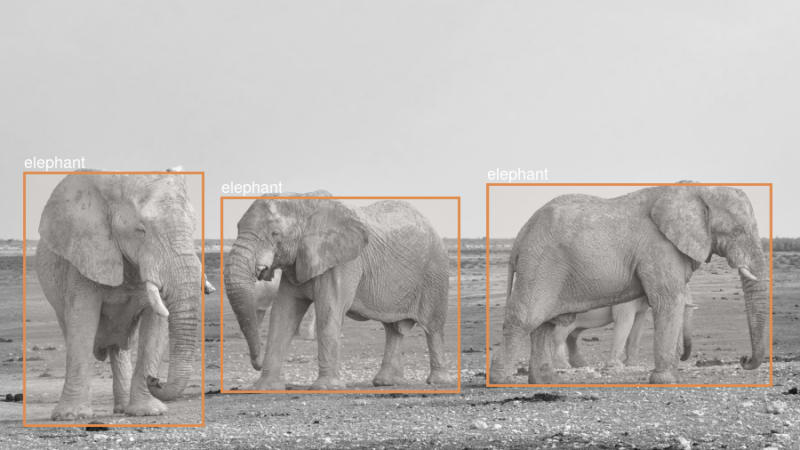

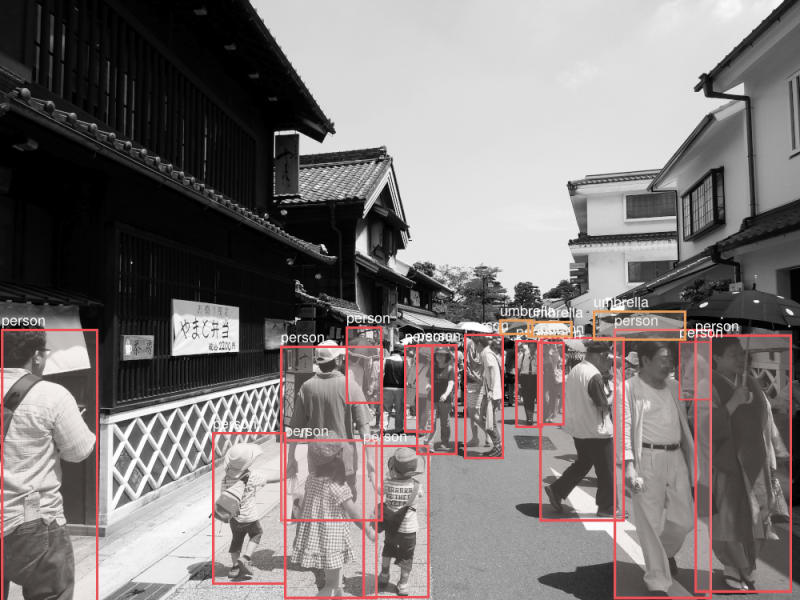

Images

Thank you for reading.

Have a nice day!

Oldest comments (8)

can be used in medical data for gallbladder image particularly? how we can Development it or improvement using python

Hi ahmed.

Nice to meet you. I am curious why you commented on this article, among the many on artificial intelligence. What is even more curious is that you are dealing with gallbladder data. I'm not an expert in artificial intelligence. And this article deals with converting a model created in Python or other languages to onnx format, and calling it from Ruby.

I do not know who you are. But I make some comments.

In the case of gallbladder data, there are modalities such as CT, MRCP and ERCP(endoscopic retrograde cholangiopancreatography), Abdominal ultrasound, Laparoscopy. Perhaps the easiest is CT, and it is rather ERCP/Laparoscopy that has the greatest social impact by adapting artificial intelligence. It will be very difficult to develop practical artificial intelligence to assist in treatment, but it is needed.

There are several diseases of the gallbladder, but the ones with the greatest social impact are maybe gallstones and gallbladder cancer...

The type of algorithm you choose to use will depend on what you want to do.

But you may well know something like this... I'd like to give you some advice, but what do you want to know?

Using yoyo v3 algorithm it can be applied to ultrasound images of the gallbladder

But I am trying to improve the accuracy results and compare them with other algorithms

My question is what good algorithms might give better results when dealing with images for ultrasound

Interesting.

To be honest, I haven't heard much about applying artificial intelligence to ultrasonography.

I used to work as a clinician in hospital. I think Abdominal echo is a device that is a little more difficult for doctors to access when compared to upper gastrointestinal endoscopy.

In hospitals, ultrasound technicians have the highest level of skill in ultrasound examinations. Doctors are not very skilled. So I think the study of ultrasound should be promoted by ultrasound technicians. In addition, abdominal ultrasound is a test that varies greatly from person to person. It depends on the condition of the patient and also on the skill of the examiner. The objective evaluation is not easy compared with CT.

I have tried to apply YOLOv3 to the image of upper gastrointestinal endoscopy before. However, I have never tried abdominal echo.

I don't know much about algorithms, so it's difficult to answer your question. However, the most difficult part of a machine learning project is creating datasets, not choosing algorithms. If you already have a good dataset, that's great. If you already have a data set of abdominal echo images, you can try the algorithm in order.

I want to know your position. Are you a doctor? Are you a clinical laboratory technician? Are you a programmer or an engineer? Or an abdominal echo developer? Or a hobbyist? I hope for your success anyway.

Thank you for your good wishes to me

I am a PhD student in computer science

If you know, the student reads and collects many ideas

Some of them are subject to implementation, and some are not acceptable or rejected by the supervisor

So I'm trying to gather information about the new algorithms and read about them

About the medical data set not long ago, and I go to medical centers to collect pictures, but unfortunately most of them send me to government hospitals

I am in a bureaucratic republic. I need a lot and a lot of time at this point for that

Of course, in the event that I obtain a set of medical data, I will register it and upload it on the Internet

thank you again

Hello Kojix2

I am getting this error

`predict': wrong number of arguments (given 0, expected 1) (ArgumentError)

in this line

output = model.predict(input_1: image_data, image_shape: image_size)

Please help me out if you are available

Very easy.

Simply change the keyword argument to a hash.

The keyword argument mechanism in Ruby has changed, so it may be related to that.

Displaying a screen in real time is easy to do with Ruby/Tk or Ruby/GTK, but I am more interested in how to connect a webcam. I haven't done this recently, so I don't know anything about this field. I used to use ruby-opencv, but this library does not support opencv3.

Are you okay here?

Yes, It worked..

Thank you very much.

and sorry to bother you about this silly thing.

I just started leaning Ruby, and there is not much stuff available for Ruby as compare to Python, so finding it little hard to learn it.

I tried to install ruby-opencv but I was not able to install it too. may be it didnt support the my current ruby version.

Anyways. Thanks alot.

I am from New Delhi, India.

Nice to have words with you.