Provisioning a Kubernetes cluster is relatively easy. However, each new cluster is the beginning of a very long journey, and every cluster you add to your Kubernetes fleet increases management complexity. In addition, many enterprises struggle to keep up with a rapidly growing number of Kubernetes clusters spread across on-prem, cloud, and edge locations — often with diverse Kubernetes configs and using different tools in different environments.

Fortunately, there are a number of K8s best practices that will help you rein in the chaos, increase your Kubernetes success, and prepare you to cope with fast-growing and dynamic Kubernetes requirements. This blog describes six strategic Kubernetes best practices that will put you on the path to successfully managing a fleet.

Best Practice 1: Think Hybrid and Multi-Cloud

The pandemic has been a reality check for organizations of all sizes, establishing the value of cloud computing and cloud-native development and accelerating their adoption.

Because Kubernetes workloads can be highly portable, you have the option to deploy workloads in any cloud to deliver an optimal experience for your customers and employees — where optimal may mean the best performance with the lowest network latency or the ability to leverage a differentiated service native to a particular cloud.

While hybrid and multi-cloud Kubernetes cluster deployments provide advantages to your business and have become a best practice, they increase the complexity of your Kubernetes fleet. However, the right SaaS tools offer significant advantages in hybrid and multi-cloud environments, enabling you to operate across multiple public clouds and data center environments with less friction while allowing a greater level of standardization.

Best Practice 2: Emphasize Automation

Managing Kubernetes with kubectl commands and a few scripts is not too difficult when you only have a few clusters, but it simply doesn’t scale. Automating and standardizing common cluster and application operations allows you to manage more clusters with less effort while avoiding misconfigurations due to human errors. For this reason, automation is considered a best practice for gaining control of your Kubernetes fleet.

Many organizations are adopting GitOps, bringing the familiar capabilities of Git tools to infrastructure management and continuous deployment (CD). In last year’s AWS Container Security Survey, 64.5% of the respondents indicated they were using GitOps already.

With GitOps, when changes are made to a Git repository, code is pushed to (or rolled back from) the production infrastructure, thus automating deployments quickly and reliably.GitOps was the subject of the recent Rafay Blog, GitOps Principles and Workflows Every Team Should Know.

But GitOps is not the only tool to increase Kubernetes automation. Some Kubernetes management tools utilize Open Policy Agent (OPA), a general-purpose policy engine used to enforce policies in microservices, Kubernetes, CI/CD pipelines, API gateways, etc. OPA can be used to enable policy-based management across your entire K8s fleet. See Managing Policies on Kubernetes using OPA Gatekeeper to learn more.

Best Practice 3: “Zero” In on Security

Security for your Kubernetes fleet should never be an afterthought. Mission-critical clusters and applications running in production require the highest level of security and control. In addition, as your fleet grows, your enterprise may be exposed to new security risks.

Applying zero-trust principles is the best practice for securing your K8s environment. Kubernetes includes all the hooks necessary for zero-trust. Unfortunately, keeping all the individual elements correctly configured and aligned across dozens of clusters is a big challenge.

Best Practice 4: Maximize Monitoring

There are a variety of open source solutions for Kubernetes monitoring. However, just like with everything else, monitoring and visibility become more challenging as the number of clusters and different cloud environments increase.

The best practice is to provide centralized logging with a base level of monitoring, alerting, and visualization across your Kubernetes fleet. Many organizations implement this on their own using open source tools such as Prometheus and Grafana.

However, in keeping with the previous best practice, there are SaaS services that will do the heavy lifting for you, providing everything you need in one place with uniform tools across diverse environments. You can learn more about visibility and monitoring in the recent blog, Best Practices, Tools, and Approaches for Kubernetes Monitoring.

Best Practice 5: Opt for Software as a Service

A complete Kubernetes environment has a lot of moving parts. Once you add developer tools, management tools, monitoring, security services, etc., it’s a significant and ongoing investment in time and energy; Substantial skill may be needed to keep all the tools up-to-date and operating as expected. Therefore, as your environment scales, it’s best to let services take the place of software you have to install and manage yourself wherever possible.

Managed Kubernetes

For example, most enterprises make use of managed Kubernetes services from public cloud providers such as AWS (EKS), Azure (AKS), and Google Cloud (GKE) to simplify cluster deployment, position applications closer to customers, and provide the ability to dynamically scale up to address peak loads without requiring a lot of CapEx. A recently released study from the CNCF found that 79% of respondents use public cloud Kubernetes services. Most public clouds also offer various related services that are easy to consume, complement your Kubernetes efforts, and accelerate development.

SaaS tools

In addition, there are an increasing number of software-as-a-service (SaaS) and hosted solutions that provide Kubernetes management, monitoring, security, and other capabilities. The SaaS model, in particular, provides fast time to value, robustness and reliability, flexible pricing, and ease of use.

Choosing SaaS tools to address business and operational needs can enable you to reduce reliance on hard-to-find technical experts. It’s also worth noting that, although many of us use the terms “hosted” and “SaaS” somewhat interchangeably, they are not the same thing. Choose wisely.

Best Practice 6: DI-Why?

Kubernetes has a reputation for being challenging to deploy and operate. When your organization was just getting started with Kubernetes, it may have made sense to do it yourself (DIY) — building dedicated infrastructure for Kubernetes, compiling upstream code, and developing your internal tools — but as Kubernetes becomes more and more critical to your production operations, it can no longer be treated as a science project. So why make Kubernetes harder than it has to be?

An entire ecosystem of services, support, and tools is arising around Kubernetes to help simplify everything from deployment to development to operations. The right services, tools, and partners will allow you to accomplish more — with much less toil. Continuing to roll your Kubernetes is a waste of developer and operations time and talent that could be spent adding more value elsewhere in your business.

Kubernetes Best Practices at Rafay

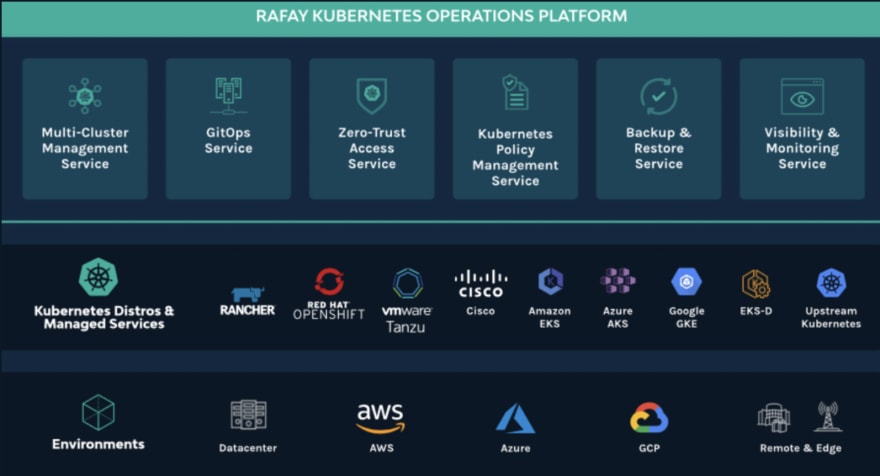

At Rafay we’ve built our entire platform around codifying Kubernetes best practices in order to streamline management of large K8s fleets. Our Kubernetes Operations Platform (KOP) unifies lifecycle management for both clusters and containerized applications, incorporating all of the best practices discussed:

Software as a Service: Rafay’s SaaS-first approach enables your organization to gain efficiencies from Kubernetes almost immediately, speeding digital transformation initiatives while keeping operating costs low.

Hybrid and multi-cloud: Rafay KOP works across cloud, data center, and edge environments, allowing you to easily deploy and operate workloads wherever needed.

Monitoring and Visibility: Rafay’s Visibility and Monitoring Service makes it simple to visualize, monitor, and manage the health of your clusters and applications.

Automation: Rafay simplifies automation with our GitOps Service. Our Multi-Cluster Management Service incorporates cluster templates, cluster blueprints, and workload templates — ensuring adherence to Kubernetes deployment best practices — as well as our Kubernetes Policy Management Service utilizing OPA.

Security: Rafay’s Zero-Trust Access Service centralizes access control for your entire fleet with automated RBAC. It ensures that Kubernetes security best practices are applied and maintained in multi-cluster, multi-cloud deployments.

Rafay delivers the fleet management capabilities you need to ensure the success of your Kubernetes environment, helping you rationalize and standardize management across your entire fleet of K8s clusters and applications.

Ready to find out why so many enterprises and platform teams have partnered with Rafay for Kubernetes fleet management? Sign up for a free trial.

Top comments (0)