Search results are not helpful

Searching for things on the web has steadily gotten worse12. Sites with so-called “content strategies” fill the results. And each article is a poorly researched pile of garbage, haphazardly copied from more legitimate sites.

Some of these “articles” even seem to be researched off of each other, rewritten in odd ways to avoid Google’s plagiarism penalty, like a game of telephone. This is also a side effect from using AI writers, which are trained on existing search results.

And there’s bad, ad-filled mirrors of Stack Overflow, GitHub issues, NPM packages, and Reddit threads, that somehow rank higher than the actual source.

I’m not going to name any of these sites because I don’t want to boost their rank any further, but I’m sure you’ve seen them.

Fed up with the SEO spam, I’ve found some ways to make my searching more productive.

Filter your results

I’ve been blocking entire domains from my results. It’s tedious, and my list grows every time I search, but my searches have been a lot more productive. You can do this with a browser extension, a different search engine, or by editing your search query.

Browser extensions

Let’s Block It!

Let’s Block It! is a handy site to generate content filters for uBlock Origin, and you can create an account and share your list, which can be added just like any other uBlock Origin list.

You’ll want to use their tool to make your own search results filter, which provides the option to easily add in some exhaustive filters from the uBlock-Origin-dev-filter project.

The filters support result filtering on Bing, DuckDuckGo, Google, Kagi, Searx, and Startpage.

They also provide a handy CLI utility so you can generate and self-host your lists without using their website.

Unfortunately, it doesn’t have a quick way to block sites straight from the results, and I’m a filthy Safari user, so uBlock Origin isn’t an option for me.

uBlacklist

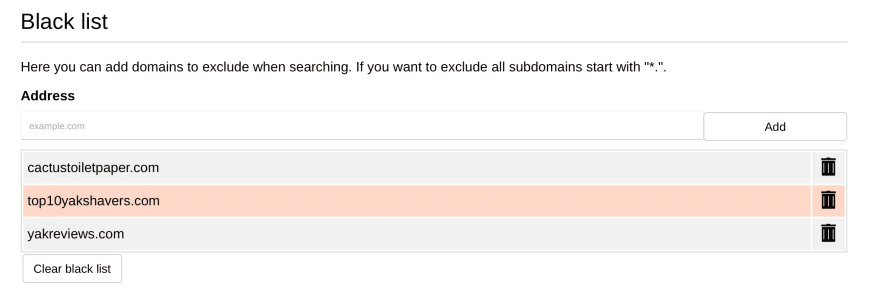

uBlacklist is what I’m currently using. It supports Chrome, Firefox, and Safari, and you can subscribe to other user’s blacklists.

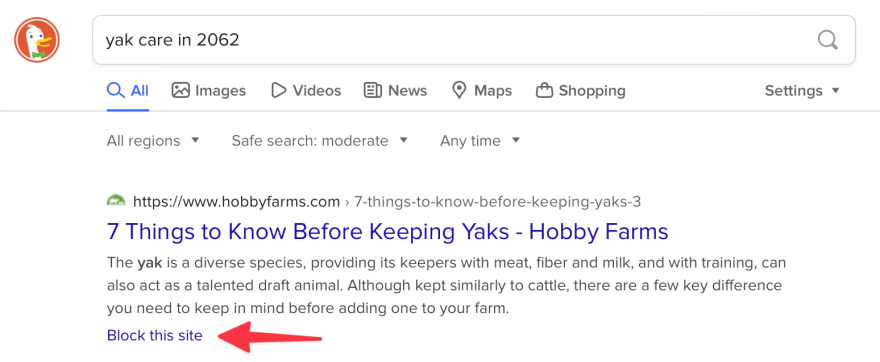

When installed, you get a “Block this site” link under each of your results, like this:

You can easily export your blacklist to a text file - here’s mine currently. And if you use Google Drive or Dropbox (I don’t), you can sync your blacklist straight from the extension.

The extension supports Bing, DuckDuckGo, Google, and Startpage, with partial support for Ecosia and Qwant.

Edit your search query

If you can’t install extensions, and alternative search engines are blocked, bookmarking a query with a bunch of unwanted domains might be your next best option.

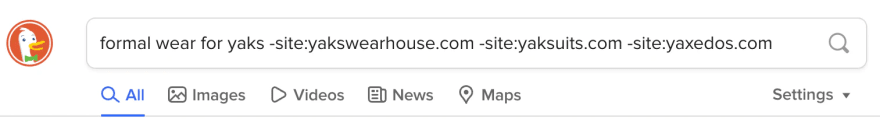

You can add -site:example.com to most search engines, and just keep adding more -site: parameters as needed. Then before you put in your search term, bookmark the page, and you’ll be able to reuse it in the future.

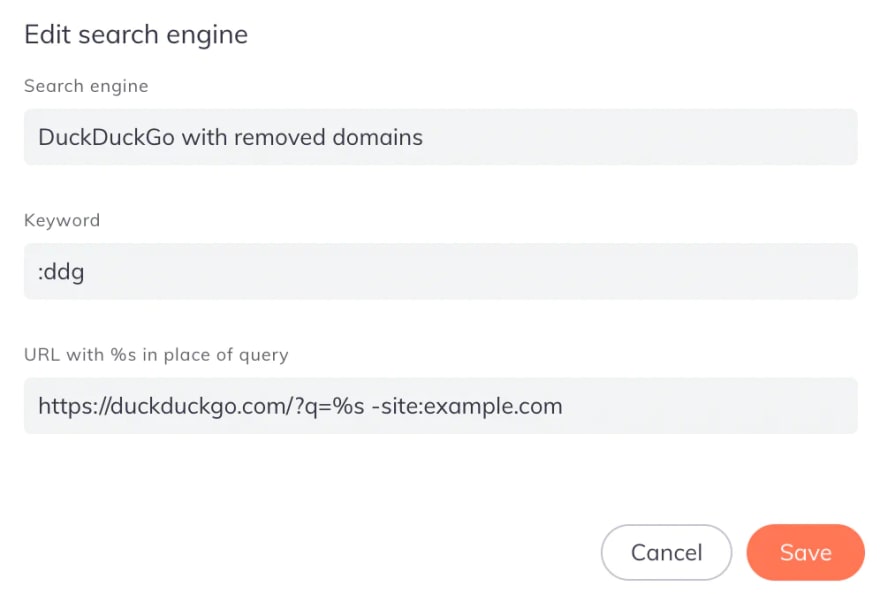

You can also edit your browser’s default search query3. Here’s an example with DuckDuckGo in Brave (note the space between %s and -site):

Alternative search engines

MetaGer

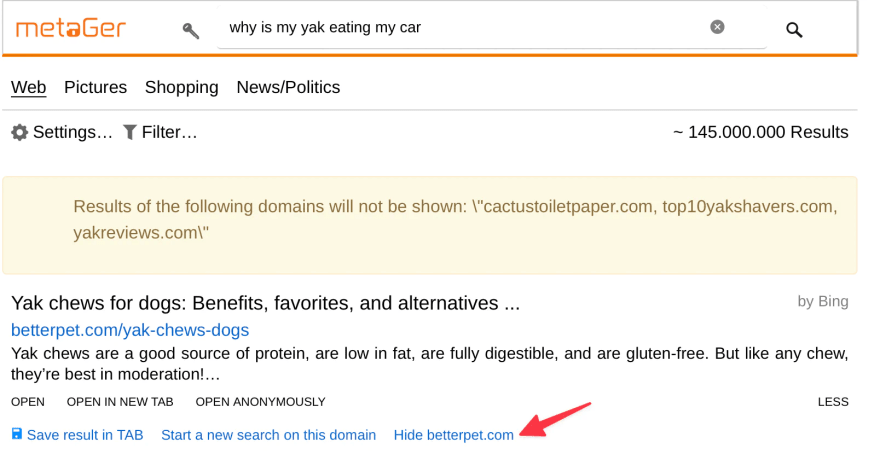

MetaGer is the only publicly available search engine I’ve found that lets you set a domain blacklist. You can filter results straight from the page, but those filters are temporary.

However, MetaGer has a configurable domain blacklist in settings, and they’ll give you a URL with all your settings in it that you can use to easily copy your settings to other browsers or devices, without an account.

Other search engines

It looks like Kagi supports domain blocking, but it’s invite-only and I haven’t been able to test it for myself. I’m also not too keen on paying for a search engine eventually, but if it makes my life easier, sure.

Self-hosted options

The only self-hosted search engine I could find that supports blacklists is YaCy, but it requires Java (ugh). The wiki page on the blacklists feature is in German.

Searx or its fork, SearxNG, might be another option, but either it doesn’t have a blacklist feature or it’s not documented. I wasn’t able to install it and find out for myself.

Use additional search terms

It’s impossible to find a legitimate review on something outside of a major media site, and I can’t always depend on those reviews either. I’ve been adding “reddit” to a lot of my search terms to try and get crowdsourced opinions. This isn’t ideal because of their slow website and dark patterns, but I do get better results.

Lim Swee Kiat recently made a site that will do this for you, called Redditle.

How can search engines fix the problem?

A better question is, what’s their incentive to fix this? If all the big search engines show the same garbage results (and they do, in my experience), why spend resources making your engine different? Especially when it makes you money because you also own the ads on the low quality sites?

The answer is, there is no incentive, at least none that I’m aware of for the current problem. It’s up to us, the users, to fix it ourselves.

That said, here are some ideas I had:

User blocklists that influence the algorithm

Google used to have a Chrome extension that allowed you to block domains directly from the search results list. While they insisted it didn’t have a direct effect on the algorithm, it did report your list of blocked domains to Google, so I like to think it at least had some effect. But like most Google products, they killed it a few years later in 2013.

Bring it back, and then weigh the results to prevent abuse. I’m sure there’s a cool techie term for it, but basically, only remove domains if the amount of users blocking the domain reaches a certain percentage of its clicks from results.

Alternatively, take it a step further and implement up/down votes , similar to link aggregators.

Expand the results review team

I’m sure both Google and Bing have a team of people whose sole job is to search for things and test the results. I think this team should be dramatically expanded, and could even be outsourced as an invite-only program to the users if you want to cut costs.

Both of these ideas will need some abuse protections put in place, so people can’t game it to downrank their competitors.

Improve the algorithm to better detect low quality content

While knowing absolutely nothing about developing algorithms, I can definitely say this would be extremely difficult to pull off and would only be suspect to more exploitation once the spammers figure it out.

Most of these SEO spammers use content farms and AI writers, both of which create content Google deems “high quality”, with images, frequent use of headings, and related keywords integrated into the text. And it’s all perfectly readable, often with better grammar skills than I will ever have.

The AI writers can integrate with your existing SEO tools, and outline a basic structure based on your targeted keywords with headings and even the intro all pre-written for you. Then as you write, the AI will suggest sentences or entire paragraphs based on the preceding heading. I was amazed while testing this out on a short-lived SEO spam experiment of my own last year.

I have no idea how you would combat this with algorithm updates, because you’d need specific knowledge on the subject of the content to tell the difference between a casual researcher/AI writer and legitimate content.

Maybe it should be based on your site’s actual subject. For example, if you’re a certain purple-themed WordPress host, maybe you shouldn’t be ranking well with articles about how to switch your default search engine.

Plagiarism is a lot easier to detect though. I’m not sure why all these bad mirrors of big sites haven’t been penalized.

Send me more ideas

If you have any ideas on additional browser extensions, search engines, or self-hosted options, please send them my way using the “Reply via email” button below and I’ll update this post.

-

https://www.surgehq.ai//blog/google-search-is-falling-behind ↩︎

-

I thought there was a utility to do this, but I couldn’t find one. Another idea for the backlog! ↩︎

Top comments (0)