This article is a follow up of the article 'what quarks is about':

Quarks - a new mindset ... (https://dev.to/lucpattyn/quarks-a-new-approach-with-a-new-mindset-to-programming-10lk).

There was another article about architecture, but over time the concept has been simplified, hence the new writeup.

Just to recap, Quarks is a fast information write and querying system and so far we have implemented a single isolated individual node. Now it is time for data replication and making multiple nodes work together for a scalable solution.

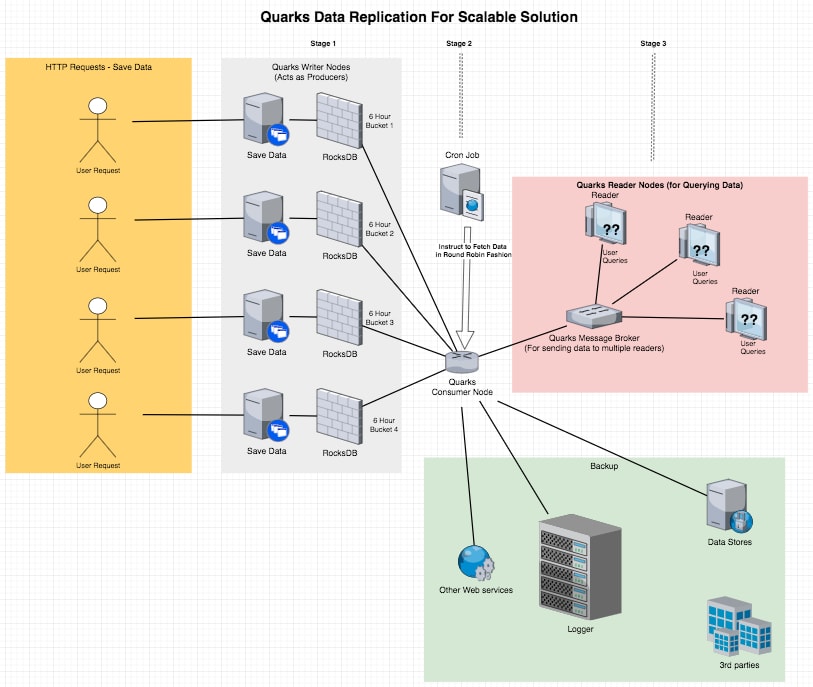

It would be easier to explain the whole system with a diagram. The diagram discusses 3 stages one after one for data saving and replication:

First and foremost we need a scalable system to save the data when there are frequent hits, which we are denoting as stage 1.

At this stage, we are using Quarks Writer nodes (we can use as many depending on the traffic) to save data.

Each node creates multiple rocksdb instances in bucket concept because later when we retrieve data from these buckets, we don't have to go over a lot of data. Once all the data of one bucket has been retrieved and replicated, we will delete the buckets, so not a lot of disk space is used. The diagram shows only one bucket per writer node for keeping the illustration clean, but actually each node will create multiple buckets. Once data saving starts, it is time for stage 2 to fetch data and replicate.

In stage 2, we run a cron job to control a Quarks Consumer node to go through all the available writer nodes in round robin fashion and fetch data in bulks. The consumer gets a bulk of data from node 1 (writer node of-course), sends it to a broker which serialises and maintain a message queue (message object is the data + instructions/hints of what to do with the data) to send to required destinations (the primary destination being the message broker, secondary being logging servers and third parties). Then the consumer picks node 2, performs same action and moves on to node 3 and so on. As data starts filling the message queue in broker node, it is time for stage 3.

In this stage, the broker picks messages from the queue, extracts data, sends to Reader Nodes which basically responds back to clients the data when requested. Quarks supports in memory data store, so if required can fetch and send date to clients really fast.

One thing to note is a single Writer Node don't have all the data. Multiple such nodes together holds the whole data.

The message broker is responsible for aggregating data and ensure individual reader nodes have all the data (since there cannot be inconsistency in data when queried).

Quarks is sharding-enabled by nature. You would be able to write logics in the broker (not implemented yet) to select the right readers for buckets of data based on sharding logic.

To re-iterate, simplicity is at the heart of Quarks, and it aims to build a scalable solutions for modern world software development in server side.

Top comments (3)

zeromq to the rescue with a very simple PUB SUB proxy :

stackoverflow.com/questions/425746...

//

// Simple message queuing broker in C++

// Same as request-reply broker but using QUEUE device

//

include "zhelpers.hpp"

int main (int argc, char *argv[])

{

zmq::context_t context(1);

}

// Broker

include

int main(int argc, char* argv[]) {

void* ctx = zmq_ctx_new();

assert(ctx);

void* frontend = zmq_socket(ctx, ZMQ_XSUB);

assert(frontend);

void* backend = zmq_socket(ctx, ZMQ_XPUB);

assert(backend);

int rc = zmq_bind(frontend, "tcp://:5570");

assert(rc==0);

rc = zmq_bind(backend, "tcp://:5571");

assert(rc==0);

zmq_proxy_steerable(frontend, backend, nullptr, nullptr);

zmq_close(frontend);

zmq_close(backend);

rc = zmq_ctx_term(ctx);

return 0;

}

// PUB

include

include

using namespace std;

using namespace chrono;

int main(int argc, char* argv[])

{

void* context = zmq_ctx_new();

assert (context);

/* Create a ZMQ_SUB socket /

void *socket = zmq_socket (context, ZMQ_PUB);

assert (socket);

/ Connect it to the host

localhost, port 5571 using a TCP transport */

int rc = zmq_connect (socket, "tcp://localhost:5570");

assert (rc == 0);

while (true)

{

int len = zmq_send(socket, "hello", 5, 0);

cout << "pub len = " << len << endl;

this_thread::sleep_for(milliseconds(1000));

}

}

// SUB

include

include

using namespace std;

int main(int argc, char* argv[])

{

void* context = zmq_ctx_new();

assert (context);

/* Create a ZMQ_SUB socket /

void *socket = zmq_socket (context, ZMQ_SUB);

assert (socket);

/ Connect it to the host localhost, port 5571 using a TCP transport */

int rc = zmq_connect (socket, "tcp://localhost:5571");

assert (rc == 0);

rc = zmq_setsockopt(socket, ZMQ_SUBSCRIBE, "", 0);

assert (rc == 0);

while (true)

{

char buffer[1024] = {0};

int len = zmq_recv(socket, buffer, sizeof(buffer), 0);

cout << "len = " << len << endl;

cout << "buffer = " << buffer << endl;

}

}