Lately, the topic of AI/ML has been growing exponentially at an absurd speed, however, what I see most are posts talking about chatbot agents (which honestly, takes about 20 minutes to develop) or "Vibe Coding" (...), so I thought I would share a little bit of this sensational universe that is machine learning. Today I want to share a little bit of knowledge in a kind of introduction to this world full of strange terms and things that people seem to like to complicate.

Data and more data

I'm sure you've heard of neural network, for a while that was all anyone talked about, how the computer is able to learn and adapt to a set of data, like... how does Google know that I want to buy a hook if I searched for fishing rod? How does this relate?

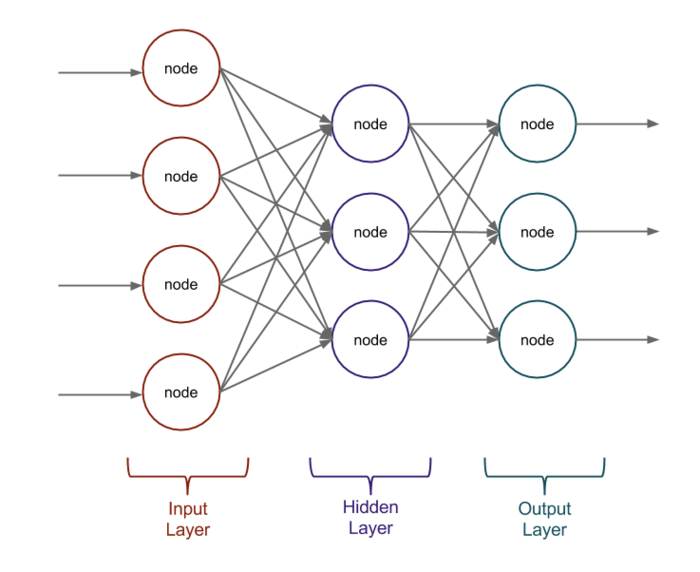

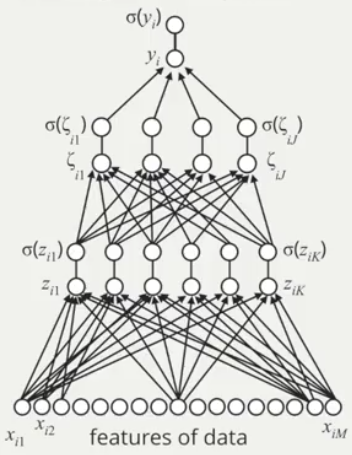

Well... that's where the X of the matter lies (literally): a neural network, this little beauty up there, learns to relate the characteristics of a dataset. Ok... what does that mean?

A dataset is literally, as the name says, a set of data. Let's say we have a streaming site and we need to recommend movies that the user will like, in this case, we would have a dataset like this:

# my_favorite_users_dataset_s2.csv

user_id,movie_id,rating

0,1034,5.0

0,1255,3.5

1,1034,4.5

...

In this example, we have users, movies and a rating that will be our main feature, in the field of ML, we call this a feature and the process of understanding these features and how they relate to each other is Feature Engineering.

The more data, the better!

Do computers dream of electric sheep?

This whole thing about dataset and features and whatever, but... how does a neural network REALLY learn?

Now that you know how data appears in Machine Learning, let's start from the beginning with a super, super simple neural network that started to be used back in the 60s and we'll understand it from scratch.

This weird thing up there is a Logistic Regression Model. Each "level" of dots is a layer of the neural network, each one has its role and makes a change in the original data. Let's break it down into parts:

First layer

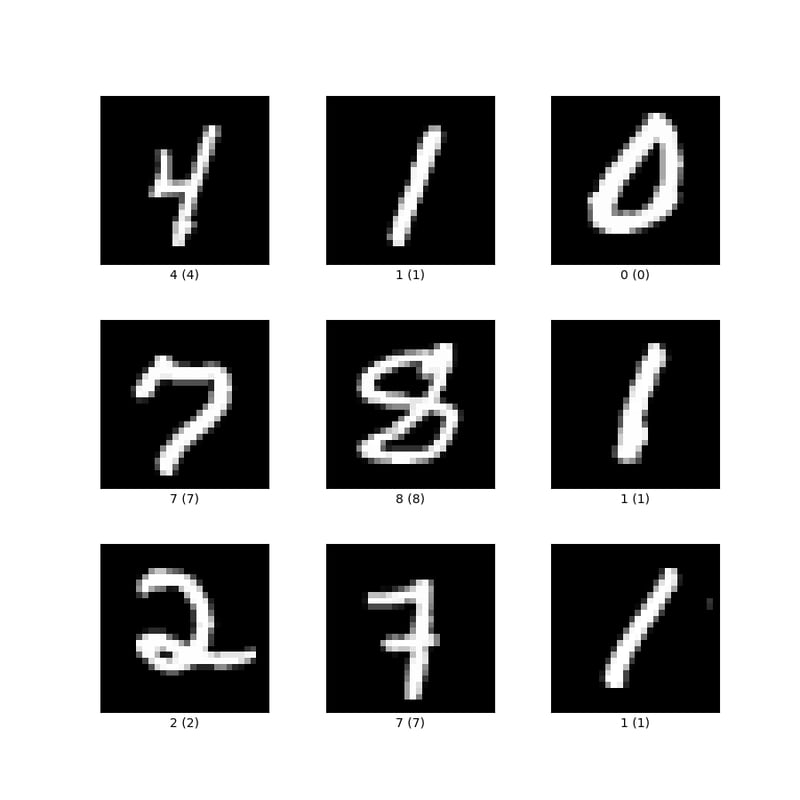

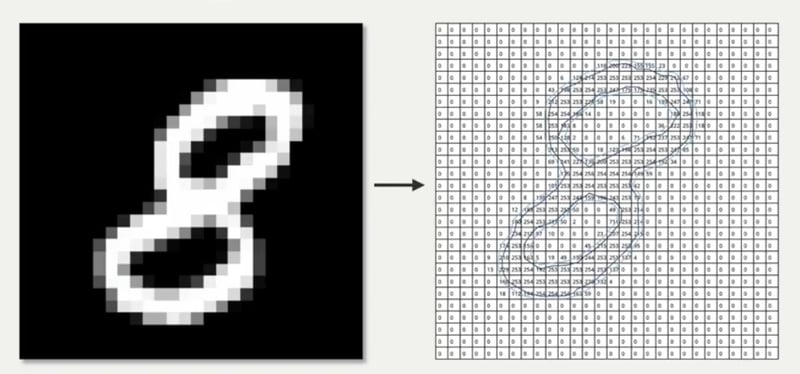

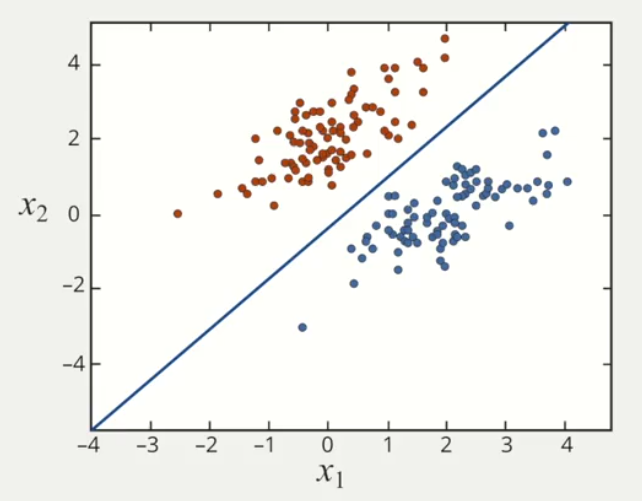

The first layer is where the data is ingested. In our mental exercise, we're going to use a super, super classic dataset in the area: MNIST, a set of images of handwritten numbers and we're going to use this neural network to learn how to identify these numbers. In this case, the first layer will receive them. Take a look at what it looks like:

Hey... but in the first example it was a CSV, how am I going to ingest an image into the neural network?

This is where one of the most important and fundamental points of Machine Learning comes in: all ingested data must be numeric. You may have noticed that in the first example, everything there was a number, which could be justified by the fact that they were IDs and a numerical classification of the movie, but this is intentional!

Every neural network will learn the relationship of the characteristics of a set of data through mathematical methods, which is why everything needs to be a number.

Okay, and how do we apply this to images?

Well, it's simpler than it seems: basically, we "pixelize" the image, that is, we transform it into a matrix of values, where each component of the matrix is a number that defines the intensity of the white color in the specific pixel:

The images will be input into the neural network in this way.

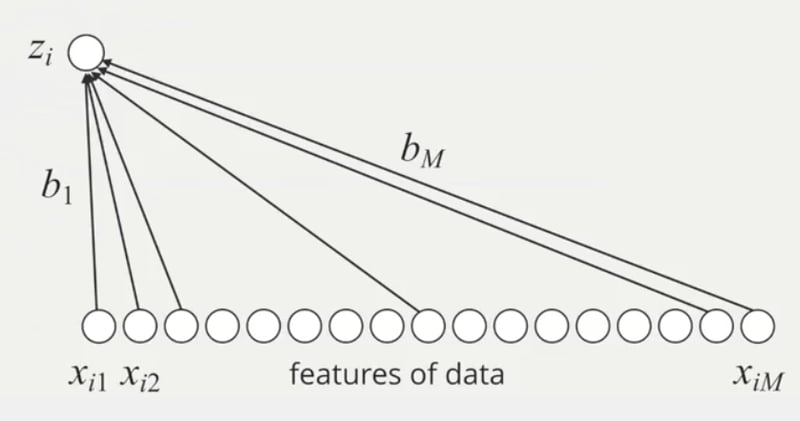

In the first layer we can see several balls, each of which, mathematically represented by:

Will be a pixelated image, that is, a matrix of numerical values.

In this example, the neural network will be able to identify whether a number is a 0 or a 1. But how?

Second layer

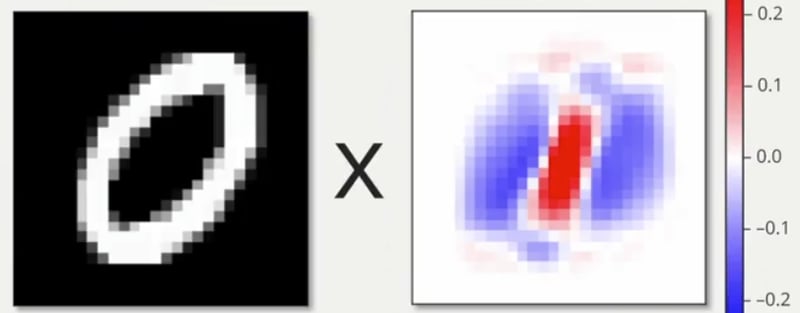

The second layer is where the magic really happens. There, a mathematical operation known as Logistic Regression takes place, it is capable of creating a filter based on the features/characteristics of the ingested data, which will define whether the image sent in the neural network's "prompt" is a 0 or a 1, this will be explained soon, first, let's take a look at the mathematical function:

To create a relationship between all the features of the dataset, we need to make them one and look for things in common. For example: the white pixels in the number one images always seem to be in a certain region of the image, you can apply a filter there, as in the image below:

This works for 0 too. 1 will be our positive result and 0, the negative, so the neural network will give N positive points for each pixel in the "prompt" image that is in the region that it learns that the number 1 usually occupies, and N negative points for each pixel that occupies the regions of the number 0. Thus, we have a Binary Classification System. The filter above is how the neural network thinks.

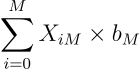

Mathematically this can be seen here:

Where:

b_0 is the bias of the neural network, a constant that keeps the data stable;

X_iM is the pixelated image;

b_M is the parameter that will be adjusted in the neural network, this defines the filter we saw above.

The symbol circle with the dot (the inner product) defines that the following operation will be performed:

In other words, for M parameters of the neural network, X_iM will be multiplied by the parameter b_M, basically, a relationship is created here!

Each parameter in a neural network starts with a random value and is adjusted as the epochs/generations pass in the training of the model, this means that the neural network is learning! In the end, we will have that filter that was generated based on the adjustment of the M parameters.

Phew... the most boring part of the math is over!

After all this, what will the neural network really return to us?

The results

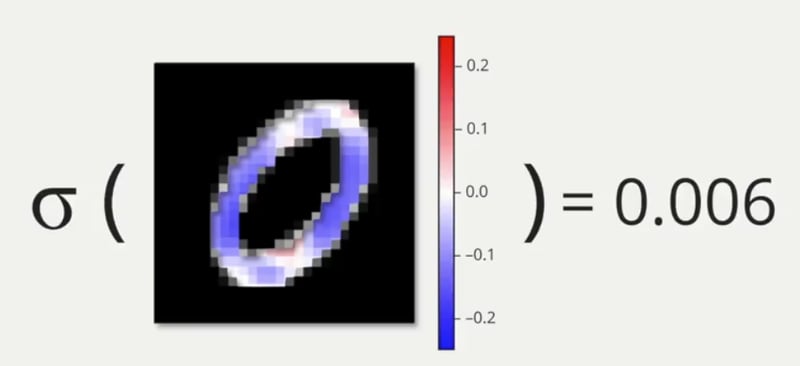

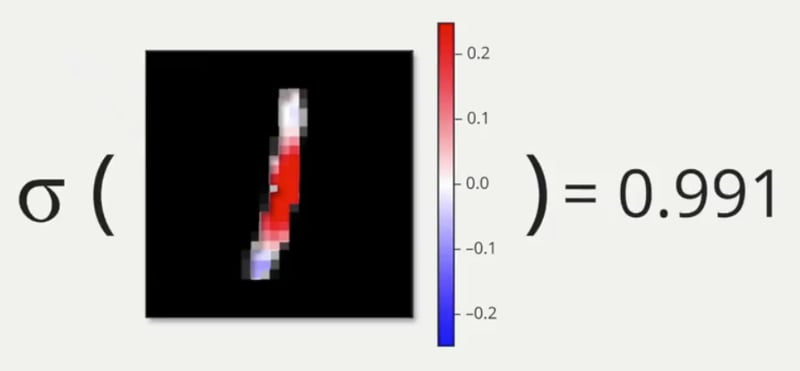

The last layer of this neural network is the final step of everything and will determine what will be returned. In the specific case of this model, the last mathematical process occurs where a Sigmoid Function is executed on the data generated by the second layer. Well... what does that mean?

The Sigmoid Function transforms values generated by a neural network into percentages, that is, probabilistic data, this makes sense because we need to know "how many percent" of this image corresponds to the number 1. The return will always be like this: 0.95, 0.01, 0.2, etc.

This indicates the correspondence rate with the number 1, so images close to the number one receive positive values and images of the number 0, negative numbers. It's all math!

The last mathematical operation is super simple and is basically this:

Where p is the percentage of correspondence with the number 1, and the sigma is the Sigmoid Function and z_i is the return of the Logistic Regression.

There you have it, we have a neural network! Now just put any MNIST image never seen before by the model of a number 0 or 1 and see the result.

A Deeper Look at Logistic Regression

Why can we only see if the number is 0 or 1? Why not identify if it is 0, 1, 2, 3...

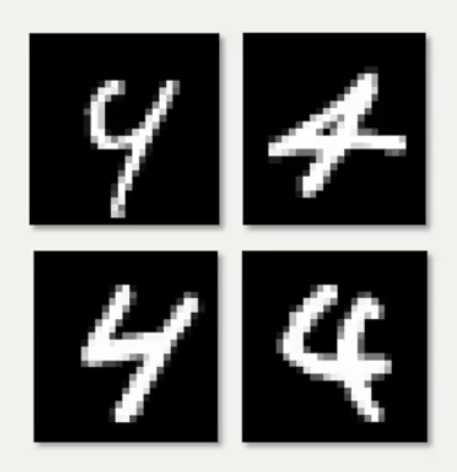

Since this is a simple example, based on Logistic Regression, we can only perform binary tests with the dataset, this is due to the nature of this mathematical operation:

The graph above shows a visualization of what the model interprets with the result of the logistic regression, where X1 are the images of the number 1 and X2 are the images of the number 0. It basically organizes the dataset into two classes based on the characteristics of the image. In addition to only being able to identify 2 classes, it is a linear model, that is, with the capacity for simple characteristics. For complex numbers, such as the number 4, which can be written in several ways:

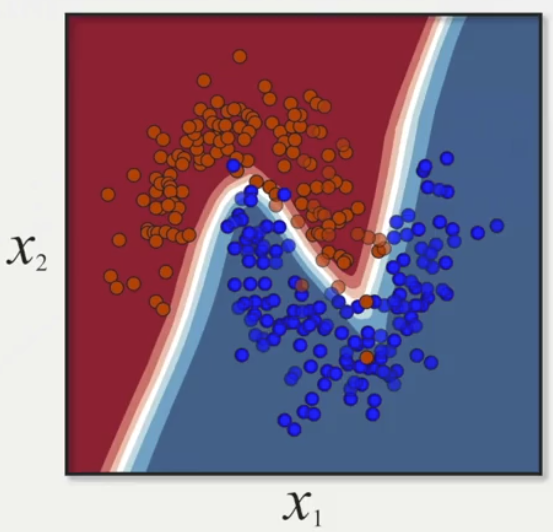

The filter gets a bit more complex and then we need to raise the level to a Multilayer Perceptron, where the Logistic Regression operation is repeated several times to obtain the complex relationship of the image features, being able to support variants of the same pattern! It's almost clustering, but it's not!

This is a visualization graph of a filter from a Multilayer Perceptron:

And this is a Multilayer Perceptron:

Yes! Computers dream of electric sheep!

I hope this article has helped to explain a little about this wonderful universe of Machine Learning. If you want to get in touch, I'll leave my social networks below. Until next time and thank you for reading!

- LinkedIn: https://www.linkedin.com/in/matjsilva/

- GitHub: https://github.com/matjsz

Top comments (2)

It’s rare to find such a clear, engaging breakdown of machine learning concepts. As someone who’s often baffled by jargon reguarding the topic, I appreciated the use of the MNIST example to ground abstract ideas in something tangible, like pixel-to-number conversion.

Thank you for demystifying the magic, keep it up!

原来像素转数字矩阵是这么玩的啊~不过好奇想问,如果数据集有缺失值的话,模型会不会直接懵掉?🤔 期待博主多写点这种“祛魅”向的科普,拯救我们这些数学苦手!👍