Problem Statement

Given dashboard images of drivers, our system aims to classify the driver on the basis of 10 predefined actions such as texting, reaching to the back etc. to detect distraction using Machine Learning (ML) and Deep Learning (DL) models.

Application

With an aim of reducing road accidents, detection and prevention (say, by activating an alarm to notify the driver) of distracted driving can have a major impact in enhancing road safety.

Link to Kaggle challenge

https://www.kaggle.com/c/state-farm-distracted-driver-detection [Last accessed: May 20, 2020]

Dataset Description

Out of the 22k labelled images, we had divided them into 70% training and 30% testing. Images belonging to the 10 classes were balanced to avoid bias in dataset.

Data Exploration

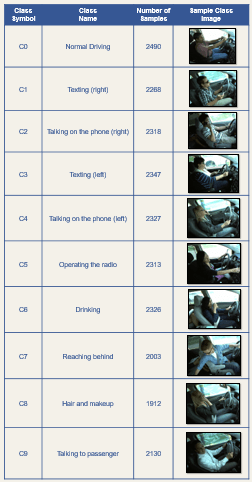

Class labels along with their description, count and sample image

Class-wise data distribution. This was balanced by pruning extra samples from each class.

Activity Workflow

Pre-processing => Feature extraction => Training => Validation => Prediction

Baseline Models

Classical Machine Learning models including Support Vector Machines, Logistic Regression etc.

Feature Engineering

We experimented with the following features:

- Intensity value

- Histogram value

- Haar wavelets

- Histogram of Oriented Gradient (HOG)

- Local Binary Pattern (LBP)

- Features derived from pre-trained AlexNet model

- Features from pre-trained VGG-16 model

Classifiers used

- Gaussian Naive Bayes (GNB)

- Logistic Regression (LR)

- Support Vector Machine (SVM)

- Multi-class Adaboost

- Bootstrap Aggregating (Bagging)

- VGG-16

- AlexNet

- Ensemble of AlexNet and VGG-16

Results

We found that upon using classical ML models, SVMs with RBF (Radial Basis Function) kernels gave the best accuracy of about 80% using Haar wavelets as features, whereas the ensemble of AlexNet and VGG-16 gave about 98% accuracy!

The evaluation metric for the challenge was log-loss and our best performing model was LR with HOG feature (log-loss value: 2.02).

Confusion Matrix for Ensemble model

For folks new to the concept of confusion matrix, think like this: the numbers along the principal diagonal state how many images were correctly classified. The more the better!

ROC curves for Ensemble model

Receiving Operator Characteristic (ROC) curves like these demonstrate a model is performing quite well.

Tools and Technologies

- Python

- Scikit-learn

- OpenCV

- PyTorch

- Scikit-image

- Seaborn

Collaborators

Wrik Bhadra, Suraj Pandey, Arjun Tyagi (IIIT Delhi)

[Note]: We didn't yet push our project to Github and would be doing it soon.

Scope for improvement

Accuracy of the system can perhaps be improved by segmenting the person and his/her action.

Additional Thoughts

Maybe adding more layers to the DL models can improve the performance?

[Final Note]: It was fun to dive deep into a Computer Vision project and collaborating with cool folks along the way!

Top comments (0)