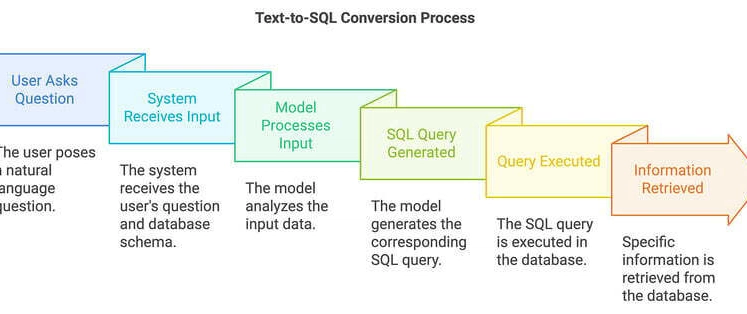

Large Language Models (LLMs) excel at understanding and generating natural language, but their non-deterministic output can be a stumbling block for applications requiring precise, structured results—like converting text into SQL queries. In this post, we’ll explore this challenge and demonstrate how Pydantic, a powerful data validation library, paired with an agent-based LLM framework, ensures reliable, structured output. We’ll walk through a practical text-to-SQL project using the provided code, explaining how Pydantic enforces structure and validates LLM responses.

The Challenge: Non-Deterministic LLM Output

LLMs, by design, are probabilistic. Given a prompt like "show me error logs from yesterday," an LLM might produce:

-

SELECT * FROM records WHERE level = 'error' AND start_timestamp > CURRENT_TIMESTAMP - INTERVAL '1 day';(correct) -

SELECT * FROM logs WHERE error = true AND date = yesterday(wrong table, vague terms) - A plain English explanation instead of SQL

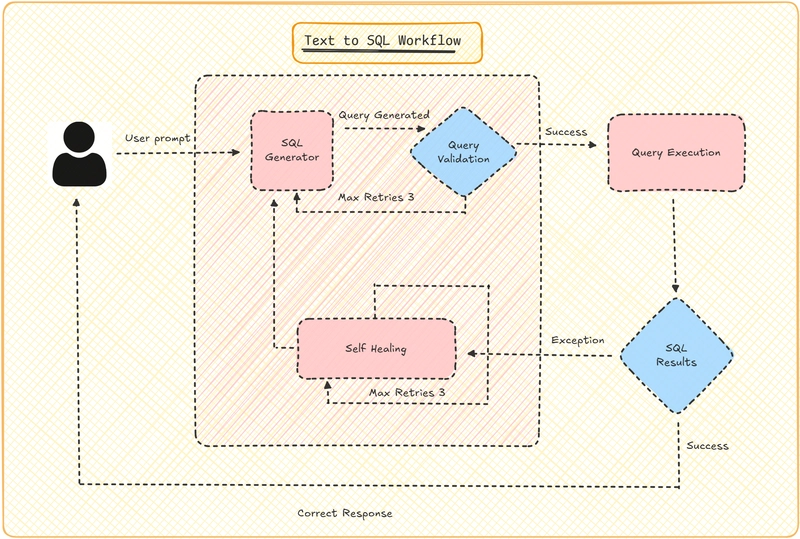

This variability stems from the model’s training and sampling methods, making it unreliable for tasks requiring consistent, executable SQL. Without intervention, integrating LLMs into production systems becomes risky. We need a way to enforce structure, validate output, and handle errors—enter Pydantic.

Pydantic: Bringing Order to Chaos

Pydantic leverages Python type hints to define data models, validate data, and catch inconsistencies. In our text-to-SQL project, Pydantic defines the expected structure of SQL responses and ensures the LLM’s output adheres to it. Paired with an agent framework and validation logic, it transforms unpredictable LLM output into reliable SQL queries.

Let’s dive into the project, built with Pydantic, an LLM agent (using Google’s Gemini 1.5 Flash), and PostgreSQL.

Project: Text-to-SQL with Pydantic and Gemini

The goal is to convert natural language requests (e.g., "show me logs from yesterday with level error") into valid PostgreSQL queries against a records table. The code uses Pydantic for structure, an agent to interface with the LLM, and async PostgreSQL for validation.

Step 1: Define the Database Schema

The project operates on a PostgreSQL table storing log records:

CREATE TABLE records (

created_at timestamptz,

start_timestamp timestamptz,

end_timestamp timestamptz,

trace_id text,

span_id text,

parent_span_id text,

level log_level,

span_name text,

message text,

attributes_json_schema text,

attributes jsonb,

tags text[],

is_exception boolean,

otel_status_message text,

service_name text

);

This schema includes a jsonb column (attributes) for key-value pairs, an array (tags), and an enum (log_level), making it rich enough to test complex SQL generation.

Step 2: Define Pydantic Models for Responses

The code defines two Pydantic models to handle LLM output:

from pydantic import BaseModel, Field

from annotated_types import MinLen

from typing import Union

from typing_extensions import TypeAlias

@dataclass

class Deps:

conn: asyncpg.Connection

class Success(BaseModel):

"""Response when SQL could be successfully generated."""

sql_query: Annotated[str, MinLen(1)]

explanation: str = Field('', description='Explanation of the SQL query, as markdown')

class InvalidRequest(BaseModel):

"""Response when the user input didn't include enough information to generate SQL."""

error_message: str

Response: TypeAlias = Union[Success, InvalidRequest]

Code Explanation:

-

Success: Represents a valid SQL response with a non-emptysql_query(enforced byMinLen(1)) and an optionalexplanation. -

InvalidRequest: Captures cases where the input lacks sufficient detail (e.g., "show me stuff"). -

Response: A type alias for the union ofSuccessandInvalidRequest, allowing the LLM to return either.

Step 3: Set Up the LLM Agent

The Agent class interfaces with Google’s Gemini 1.5 Flash model, using Pydantic to define the expected response type:

from pydantic_ai import Agent, RunContext

agent: Agent[Deps, Response] = Agent(

'google-gla:gemini-1.5-flash',

result_type=Response, # type: ignore

deps_type=Deps,

instrument=True,

)

Code Explanation:

-

Agent: A custom class (assumed frompydantic_ai) that wraps the LLM, specifying dependencies (Deps, a dataclass with a database connection) and the result type (Response). -

instrument=True: Likely enables logging or monitoring (e.g., vialogfire).

Step 4: Craft the System Prompt

The system prompt guides the LLM to generate structured output:

@agent.system_prompt

async def system_prompt() -> str:

return f"""\

Given the following PostgreSQL table of records, your job is to

write a SQL query that suits the user's request.

Database schema:

{DB_SCHEMA}

today's date = {date.today()}

{format_as_xml(SQL_EXAMPLES)}

"""

Code Explanation:

- The prompt includes the schema, current date, and XML-formatted examples (e.g., "show me records where foobar is false" →

SELECT * FROM records WHERE attributes->>'foobar' = false). - Examples help the LLM understand the desired output format, reducing non-determinism.

Step 5: Validate LLM Output with Pydantic

A custom validator ensures the LLM’s output is usable:

@agent.result_validator

async def validate_result(ctx: RunContext[Deps], result: Response) -> Response:

if isinstance(result, InvalidRequest):

return result

# Fix common LLM quirks (e.g., extra backslashes)

result.sql_query = result.sql_query.replace('\\', '')

if not result.sql_query.upper().startswith('SELECT'):

raise ModelRetry('Please create a SELECT query')

try:

await ctx.deps.conn.execute(f'EXPLAIN {result.sql_query}')

except asyncpg.exceptions.PostgresError as e:

raise ModelRetry(f'Invalid query: {e}') from e

else:

return result

Code Explanation:

-

if isinstance(result, InvalidRequest): Passes through error responses without further checks. -

replace('\\', ''): Fixes LLM quirks like escaped characters. -

startswith('SELECT'): Ensures the query is aSELECTstatement, retrying if not (viaModelRetry). -

EXPLAIN: Tests the query’s validity against the database, raisingModelRetryon errors (e.g., syntax issues).

Step 6: Run the Application

The main function ties it together:

async def main():

if len(sys.argv) == 1:

prompt = 'show me logs from yesterday, with level "error"'

else:

prompt = sys.argv[1]

async with database_connect(

'postgresql://postgres:postgres@localhost:54320', 'pydantic_ai_sql_gen'

) as conn:

deps = Deps(conn)

result = await agent.run(prompt, deps=deps)

debug(result.data)

Code Explanation:

- Accepts a command-line prompt or defaults to "show me logs from yesterday, with level 'error'".

- Uses

database_connectto set up a PostgreSQL connection and schema. - Runs the agent with the prompt and database connection, printing the result.

Step 7: Database Setup

The database_connect context manager ensures the database is ready:

@asynccontextmanager

async def database_connect(server_dsn: str, database: str) -> AsyncGenerator[Any, None]:

with logfire.span('check and create DB'):

conn = await asyncpg.connect(server_dsn)

try:

db_exists = await conn.fetchval(

'SELECT 1 FROM pg_database WHERE datname = $1', database

)

if not db_exists:

await conn.execute(f'CREATE DATABASE {database}')

finally:

await conn.close()

conn = await asyncpg.connect(f'{server_dsn}/{database}')

try:

with logfire.span('create schema'):

async with conn.transaction():

if not db_exists:

await conn.execute(

"CREATE TYPE log_level AS ENUM ('debug', 'info', 'warning', 'error', 'critical')"

)

await conn.execute(DB_SCHEMA)

yield conn

finally:

await conn.close()

Code Explanation:

- Creates the database and schema if they don’t exist, using

asyncpgfor asynchronous PostgreSQL access. - Logs operations with

logfirefor observability.

Example Output

Running python script.py "show me logs from yesterday, with level 'error'" might yield:

Success(

sql_query="SELECT * FROM records WHERE level = 'error' AND start_timestamp > CURRENT_TIMESTAMP - INTERVAL '1 day'",

explanation="Retrieves all records with error level from the past day."

)

For an invalid input like "show me stuff":

InvalidRequest(error_message="Insufficient information to generate SQL.")

How Pydantic Solves the Challenge

-

Structured Output: The

SuccessandInvalidRequestmodels enforce a clear schema, ensuring the LLM’s response is either a valid SQL query or a meaningful error. -

Validation: Pydantic’s type hints (e.g.,

MinLen) and the custom validator catch malformed output, whileModelRetryprompts the LLM to try again if needed. -

Error Handling: The union type (

Response) and database checks (EXPLAIN) provide robust error detection, preventing invalid SQL from reaching downstream systems. - Reliability: By combining schema enforcement with runtime validation, Pydantic turns non-deterministic LLM output into dependable, executable queries.

Conclusion

Non-deterministic LLM output doesn’t have to derail structured applications. In this text-to-SQL project, Pydantic’s data modeling and validation capabilities, paired with an agent framework and PostgreSQL, deliver reliable results from natural language inputs. The code is extensible—add more examples to the prompt or enhance the Success model for complex queries like JOINs or GROUP BY.

Experiment with this setup, tweak the prompt or schema, and see how Pydantic can streamline your next LLM-powered project!

Top comments (0)