This post was originally published at https://stevemerc.com

As a programmer, two of the most useful tools in my day-to-day work are the terminal and a bowl of hot soup. As the weather gets colder, I get soup almost daily from a local Hale and Hearty shop. After realizing how often I was going to their website to view the menu, I decided to do what any self respecting programmer would do and wrote a command line tool to print the list of a specific location's daily soups right in my terminal.

I used this as an opportunity to become more familiar with the Elixir programming language. I find that building small command line applications is a great way to learn a new language while also creating something useful.

By the end of this tutorial, you'll have built your own command line web scraper in Elixir from start to finish. You'll be able to just type ./soup and be presented with a list of delicious (and sometimes nutritious) bowls of goodness.

The complete source code for this app can be found here.

Spec'ing Out Our App

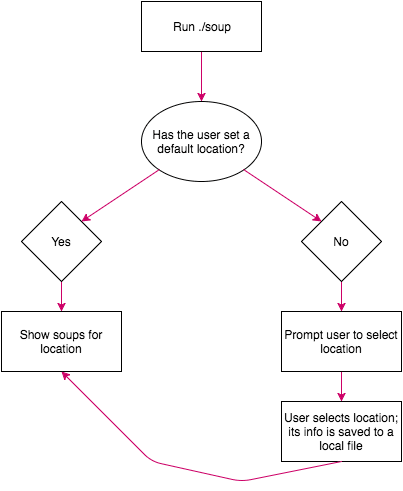

Our app just needs to print the list of soups for a given location. However, we must tell it which one we care about, and we also want to set a default, because once you have a favorite location you'll probably want to view their menu in the future. I'm not going to go to one location one day, and then another the next day - that'd be crazy.

Our app should have the following API:

-

./soup --locations: Display a list of all the locations, and ask you to save a default. -

./soup: Display the list of soups for your default location.

If you run ./soup without having set a default location, you should be prompted to select a default.

We can break this down with the following flow chart:

Let's Start Building!

Create a new Elixir app and cd into its directory:

$ mix new soup

$ cd soup

We'll need to add two dependencies:

Open up mix.exs and add the following dependencies:

defmodule Soup.Mixfile do

...

defp deps do

[

{:httpoison, "~> 0.10.0"},

{:floki, "~> 0.11.0"}

]

end

end

Install the dependencies:

$ mix deps.get

List httpoison as an application dependency:

defmodule Soup.Mixfile do

...

def application do

[applications: [:logger, :httpoison]]

end

end

Scraping the Data

The scraping logic is the most important part of our app, so let's start there. Our first task will be to fetch a list of the locations that we can display to the user.

Fetch the List of Locations

Create lib/scraper.ex and add the following:

defmodule Scraper do

@doc """

Fetch a list of all of the Hale and Hearty locations.

"""

def get_locations() do

case HTTPoison.get("https://www.haleandhearty.com/locations/") do

{:ok, response} ->

case response.status_code do

200 ->

locations =

response.body

|> Floki.find(".location-card")

|> Enum.map(&extract_location_name_and_id/1)

|> Enum.sort(&(&1.name < &2.name))

{:ok, locations}

_ -> :error

end

_ -> :error

end

end

end

Let's break this function down:

We first make a

GETrequest to https://www.haleandhearty.com/locations/ and pattern match on the response. If there was any type of error, we return an:erroratom.Assuming we got back a valid response, we then pattern match on its status code. If it was anything other than

200, we return an:erroratom.Assuming we got back a

200status code, we're ready to parse the HTML and extract the names and IDs of the locations. We do this by mapping theextract_location_name_and_id/1(which we'll implement soon) function over the.location-cardelements. Each.location-cardcontains the name and ID of the location.We sort the locations alphabetically.

The last thing we do is return a tuple of an

:okatom and the list of locations.

If you're wondering about the & character above, read up on the capture operator.

Implement extract_location_name_and_id/1:

defmodule Scraper do

...

defp extract_location_name_and_id({_tag, attrs, children}) do

{_, _, [name]} =

Floki.raw_html(children)

|> Floki.find(".location-card__name")

|> hd()

attrs = Enum.into(attrs, %{})

%{id: attrs["id"], name: name}

end

end

The one argument is a destructured tuple of the tag name, attributes and children nodes. We convert the children back into HTML so we can use Floki to drill down further and grab the .location-card__name elements. hd() is used to grab the first element of the list, which happens to be the name of the location.

Next we convert attrs from a list of tuples into a map, which makes it easier to pull out attributes by their name. Finally, we return a map of the locaton's name and ID.

That was a lot of typing! Let's see if it works by trying it out in an interactive Elixir session:

$ iex -S mix

iex> Scraper.get_locations()

{:ok,

[%{id: "17th-and-broadway", name: "17th & Broadway"},

%{id: "21st-and-6th", name: "21st & 6th"},

%{id: "29th-and-7th", name: "29th & 7th"},

%{id: "33rd-and-madison", name: "33rd & Madison"},

...

]}

You should see something similar to the above (I've omitted some of the results for the sake of brevity.) If not, you may have a typo in the above code.

Fetch the List of Soups

Now that we've got that working, let's fetch a list of soups for a given location. Fortunately this will be easier than fetching the list of locations. Add the following to lib/scraper.ex:

defmodule Scraper do

...

@doc """

Fetch a list of the daily soups for a given location.

"""

def get_soups(location_id) do

url = "https://www.haleandhearty.com/menu/?location=#{location_id}"

case HTTPoison.get(url) do

{:ok, response} ->

case response.status_code do

200 ->

soups =

response.body

# Floki uses the CSS descendant selector for the below find() call

|> Floki.find("div.category.soups p.menu-item__name")

|> Enum.map(fn({_, _, [soup]}) -> soup end)

{:ok, soups}

_ -> :error

end

_ -> :error

end

end

end

Once again, let's break this function down:

Similar to

get_locations/0, the first things we do are make aGETrequest and then do some pattern matching to make sure we got back a valid response.We then use

Floki.find/2to find all theptags with amenu-item__nameclass. The HTML actually has a bunch of these elements, but not all of them are for soups, so we tell Floki that we only care about theptags that are descendents ofdivtags that have acategoryandsoupsclass.Using

Enum.map/2, we return a list of the soup names.We return a tuple of an

:okatom and the list of soups.

Let's test our new function:

$ iex -S mix

iex> Scraper.get_soups("17th-and-broadway")

{:ok,

["7 Herb Bistro Chicken ", "Broccoli Cheddar", "Chicken & Sausage Jambalaya",

"Chicken And Rice", "Chicken Pot Pie",

"Chicken Vegetable With Couscous Or Noodles", "Classic Mac & Cheese",

"Crab & Corn Chowder", ...]}

We've made a lot of progress! Let's build the command line interface.

Building the Command Line Interface

Note: I've adapted this style of writing the command line interface from the great Programming Elixir book by Dave Thomas.

The first thing we need to do is create a module that'll be called from the command line and can handle arguments.

Create a new module, lib/soup/cli.ex and add the following:

defmodule Soup.CLI do

def main(argv) do

argv

|> parse_args()

|> process()

end

end

The main function is what will be triggered when we call our application from the command line. Its sole purpose is to take in an arbitrary number of arguments (like --help), parse them into something our app understands, and then perform the appropriate action.

Let's parse the arguments. Add the following to the Soup.CLI module:

defmodule Soup.CLI do

...

def parse_args(argv) do

args = OptionParser.parse(

argv,

strict: [help: :boolean, locations: :boolean],

alias: [h: :help]

)

case args do

{[help: true], _, _} ->

:help

{[], [], [{"-h", nil}]} ->

:help

{[locations: true], _, _} ->

:list_locations

{[], [], []} ->

:list_soups

_ ->

:invalid_arg

end

end

end

parse_args/1 uses the built in OptionParser module to convert command line arguments into tuples. We then pattern match on these tuples and return an atom that will be passed to one of the process/1 implementations defined below:

defmodule Soup.CLI do

...

def process(:help) do

IO.puts """

soup --locations # Select a default location whose soups you want to list

soup # List the soups for a default location (you'll be prompted to select a default location if you haven't already)

"""

System.halt(0)

end

def process(:list_locations) do

Soup.enter_select_location_flow()

end

def process(:list_soups) do

Soup.fetch_soup_list()

end

def process(:invalid_arg) do

IO.puts "Invalid argument(s) passed. See usage below:"

process(:help)

end

end

Let's test this out:

$ mix run -e 'Soup.CLI.main(["--help"])'

soup --locations # Select a default location whose soups you want to list

soup # List the soups for a default location (you'll be prompted to select a default location if you haven't already)

I know what you're thinking: typing mix run -e 'Soup.CLI.main(["--help"])' doesn't seem very user-friendly. You're right; it's not. But we'll only be using it like this while we develop our app. The final product will be much more user-friendly, I promise.

Building the Main Module

Now that we've got our command line interface in place, we can focus on our main module. The first thing we want to do is to prompt the user to select a default location. Add the following to lib/soup.ex:

defmodule Soup do

def enter_select_location_flow() do

IO.puts("One moment while I fetch the list of locations...")

case Scraper.get_locations() do

{:ok, locations} ->

{:ok, location} = ask_user_to_select_location(locations)

display_soup_list(location)

:error ->

IO.puts("An unexpected error occurred. Please try again.")

end

end

end

This function will be called if the user passes the --locations argument to the CLI, or if there's no default location set when calling the CLI without any arguments. It uses our Scraper module to fetch the list of locations and then pattern matches on the result. If get_locations/1 returned an :error we print "An unexpected error occurred. Please try again.". Otherwise we:

- Ask the user to select a default location, and then,

- Display the list of soups for the location the user just selected

Let's take a look at ask_user_to_select_location/1:

defmodule Soup do

...

@config_file "~/.soup"

@doc """

Prompt the user to select a location whose soup list they want to view.

The location's name and ID will be saved to @config_file for future lookups.

This function can only ever return a {:ok, location} tuple because an invalid

selection will result in this funtion being recursively called.

"""

def ask_user_to_select_location(locations) do

# Print an indexed list of the locations

locations

|> Enum.with_index(1)

|> Enum.each(fn({location, index}) -> IO.puts " #{index} - #{location.name}" end)

case IO.gets("Select a location number: ") |> Integer.parse() do

:error ->

IO.puts("Invalid selection. Try again.")

ask_user_to_select_location(locations)

{location_nb, _} ->

case Enum.at(locations, location_nb - 1) do

nil ->

IO.puts("Invalid location number. Try again.")

ask_user_to_select_location(locations)

location ->

IO.puts("You've selected the #{location.name} location.")

File.write!(Path.expand(@config_file), to_string(:erlang.term_to_binary(location)))

{:ok, location}

end

end

end

end

Notice the @config_file "~/.soup" line - this is a module attribute. It refers to the location of the local file in which we'll save our default location.

The first thing ask_user_to_select_location/1 does is take the list of locations, and prints them in the following format:

1 - 17th & Broadway

2 - 21st & 6th

3 - 29th & 7th

4 - 33rd & Madison

...

According to the docs for Enum.with_index/2, this function:

Returns the enumerable with each element wrapped in a tuple alongside its index.

If an offset is given, we will index from the given offset instead of from zero.

Since it returns a list of each location wrapped in a tuple with a number, we can iterate over this using Enum.each/2 to print out each location with the number we've assigned it.

We then use IO.gets/1 to ask the user to select a location. If they've entered a valid value (an integer that's in range of the list), we confirm their selection, save the location name and ID to @config_file, and return a {:ok, location} tuple. If they've made an invalid selection, we ask them to try again.

Notice the use of :erlang.term_to_binary/1. Because Elixir is a hosted language and Erlang already provides functions to serialize and unserialize data, we call the Erlang function directly.

The last step needed to complete enter_select_location_flow/0 is to implement display_soup_list/1.

defmodule Soup do

...

def display_soup_list(location) do

IO.puts("One moment while I fetch today's soup list for #{location.name}...")

case Scraper.get_soups(location.id) do

{:ok, soups} ->

Enum.each(soups, &(IO.puts " - " <> &1))

_ ->

IO.puts("Unexpected error. Try again, or select a location using `soup --locations`")

end

end

end

We pass this function a location map (which if you remember contains the location's name and ID). We fetch the list of soups for that location using Scraper.get_soups/1 and pattern match on the result. If we get back a {:ok, soups} tuple we iterate over soups and print each one. Otherwise we alert the user that an unexpected error has occurred and ask them to try again.

Let's try what we've built so far. Run this in your terminal: mix run -e 'Soup.CLI.main(["--locations"])' and you should see something like the below:

One moment while I fetch the list of locations...

1 - 17th & Broadway

2 - 21st & 6th

3 - 29th & 7th

4 - 33rd & Madison

...

Select a location number:

When it asks you to Select a location number:, enter 3 and you should then see something similar to:

Select a location number: 3

You've selected the 29th & 7th location.

One moment while I fetch today's soup list for 29th & 7th...

- Chicken Vegetable With Couscous Or Noodles

- Cream of Tomato with Chicken And Orzo

- Ten Vegetable

- Three Lentil Chili

- Tomato Basil With Rice

Success!

Let's move on to building the functionality to fetch the list of soups if a default location has been set. First we need a function to fetch the location's name and ID.

defmodule Soup do

...

@doc """

Fetch the name and ID of the location that was saved by `ask_user_to_select_location/1`

"""

def get_saved_location() do

case Path.expand(@config_file) |> File.read() do

{:ok, location} ->

try do

location = :erlang.binary_to_term(location)

case String.strip(location.id) do

# File contains empty location ID

"" -> {:empty_location_id}

_ -> {:ok, location}

end

rescue

e in ArgumentError -> e

end

{:error, _} -> :error

end

end

end

We use Path.expand/1 (docs) to transform a path like ~/.soup into the absolute path of /Users/steven/.soup. Next we read the contents of @config_file and attempt to unserialize the location's data using Erlang's binary_to_term/1 function. If the unserialization is successful, we return a tuple of an :ok atom and the unserialized location map, otherwise we return an :error atom. A failure to unserialize the data likely means that the user manually edited the file's contents.

defmodule Soup do

...

def fetch_soup_list() do

case get_saved_location() do

{:ok, location} ->

display_soup_list(location)

_ ->

IO.puts("It looks like you haven't selected a default location. Select one now:")

enter_select_location_flow()

end

end

end

This is a pretty simple function. We call get_saved_location/1 and match on its return value. If we get back a location map we display the list of soups for that location, otherwise we prompt the user to select a location. This acts as a catch-all for the following error conditions:

-

@config_filecan't be read for whatever reason - the location data in

@config_filecouldn't be unserialized for whatever reason

Time to try it out. In your terminal, type: mix run -e 'Soup.CLI.main([])' and you should see something similar to:

One moment while I fetch today's soup list for 29th & 7th...

- Chicken Vegetable With Couscous Or Noodles

- Cream of Tomato with Chicken And Orzo

- Ten Vegetable

- Three Lentil Chili

- Tomato Basil With Rice

Creating an Executable File

Remember when I told you we'd make the process of using this app more user-friendly than typing something like mix run -e 'Soup.CLI.main(["--locations"])'? That's what we'll do now.

We'll use escript to do this. From the Elixir docs:

An escript is an executable that can be invoked from the command line. An escript can run on any machine that has Erlang installed and by default does not require Elixir to be installed, as Elixir is embedded as part of the escript.

Open up your mix.exs file and add the following line to the project macro block:

escript: [main_module: Soup.CLI],

The entire macro should look something like the following:

def project do

[app: :soup,

version: "1.0.0",

elixir: "~> 1.3",

build_embedded: Mix.env == :prod,

start_permanent: Mix.env == :prod,

escript: [main_module: Soup.CLI],

deps: deps]

end

Now we just need to build the executable. Run this in your terminal:

$ mix escript.build

You should now see a soup executable in your project directory. Try it out with the following commands:

$ ./soup --help

$ ./soup -h

$ ./soup --locations

$ ./soup

And there we go, a fully functional command line web scraper written in Elixir. Now go out and eat soup until you burst!

👋 Enjoyed this post?

Join my newsletter and follow me on Twitter @mercatante for more content like this.

Top comments (0)