Today I am excited to share the release of AWS Lambda File Systems. A simple, scalable, fully managed network file system leveraging Amazon's Elastic File System (EFS) technology. And what better way to celebrate the accomplishments of our hard-working AWS friends than by building clever uses of their tools in ways they may not have intended... or outright feared 😱

💾 Creating Your First EFS

For some of us in the serverless space, EFS could be an entirely new thing. So let's create a simple file system with a little ClickOps™ to use with our "experiments" below. Log into the AWS Management Console and:

- Click on "Services" in the toolbar.

- Enter "EFS" into the find services field, select.

- Click on "Create file system".

- For "Network access", the default VPC, AZs, Subnets (public), & Security Groups should work.

- ➡️ Click "Next Step".

- Default "Lifecycle policy" of "None"

- Change "Throughput Mode" to "Provisioned".

- Change "Throughput (MiB/s)" to "1". Estimated up to $6.00/month.

- Default "Performance Mode" to "General Purpose"

- ➡️ Click "Next Step"

- For "Policy settings", leave empty.

- Click the "Add access point" button.

- Set the name, user, directory, and owner as pictured.

- ➡️ Click "Next Step"

- ✅ Click "Create File System"

It might take a few minutes for the state to become available. Once complete, we'll have a fun little sandbox 🏖 file system. Time to drop some 💩'y ideas in production for our co-workers to find during their next sprint.

🗂 Rails FileStore Cache

Rails developers love caching things. The framework makes it easy by exposing a simple Rails.cache interface with optional backends. Commonly used with distributed stores such as Memcached or Route53 (why else would it be on this page), they are easy enough to use with Lambda via a VPC Config. But is ElastiCache truly serverless and cost effective? Perhaps. But maybe Rails' built-in FileStore can be used with EFS instead?

We are going use our new and improved Lamby Quick Start to deploy a working Rails app to AWS Lambda in a few minutes! After the initial deploy, here are the changes we need to make to our new project. Adding the Faraday gem is first:

# Gemfile

gem 'faraday'

Next we are going to need some simulated API data. For this demo we can use the Fake Rest API service to get a list of books in XML (the monolith's true format). This request takes ~400ms and the response body is 100KB in size. Perfect use case as this gives us enough latency and cache usage on disk to find out if and when using EFS as a disk cache helps.

# app/controller/application_controller.rb

class ApplicationController < ActionController::Base

def index

id = (1..30).to_a.sample

key = "api/#{id}"

url = "https://fakerestapi.azurewebsites.net/api/Books"

Rails.cache.fetch(key) { Faraday.get(url).body }

end

end

Edit the AWS SAM YAML file using the example below. Information needed can be found in the EFS section of the AWS Console by clicking on your newly-created file system.

- Add this under the

Propertiessection of yourAWS::Serverless::Functionresource. - Under the

FileSystemConfigsconfig forArn, replace the following:-

123456789012with your AWS Account ID. -

fs-12345678with your subdomain. See "DNS name" in Console.

-

- Replace the

SubnetIdsandSecurityGroupIdswith your own. See "File system access" in Console.

# template.yaml

Resources:

RailsLambda:

Type: AWS::Serverless::Function

Properties:

# ...

Policies:

- AmazonElasticFileSystemClientFullAccess

- AWSLambdaVPCAccessExecutionRole

FileSystemConfigs:

- Arn: arn:aws:elasticfilesystem:us-east-1:123456789012:access-point/fs-12345678

LocalMountPath: /mnt/sandbox

VpcConfig:

SubnetIds:

- subnet-1ae4e78c3f32147b2

- subnet-029c281a81822b209

SecurityGroupIds:

- sg-1fd20e31e93f646b9

Lastly don't forget to configure Rails to use the :file_store for the cache backend. All file systems will be under the /mnt directory.

# config/environments/production.rb

config.cache_store = :file_store , "/mnt/sandbox"

The Results

Below are some apache bm metrics. For each we are making 1000 requests with a concurrency level set to 10. To avoid measuring cold starts, we run our benchmarks twice after each deploy and present the data from the second. Those familiar with AWS Lambda may remember a time when a VpcConfig incurred a substantial cold start. I'm happy to report that the VPC Networking Improvements announced last year are indeed real. General measurements of the cold starts were nearly identical with and without a VPC config.

API Latency/Size: ~400ms/100KB

56.556 seconds - No Cache Baseline

26.679 seconds - FileStore Cache w/EFS Provisioned (1 MiB/s) $6.00/month

28.726 seconds - FileStore Cache w/EFS Provisioned (4 MiB/s) $24.00/month

27.306 seconds - FileStore Cache w/EFS Provisioned (8 MiB/s) $48.00/month

API Latency/Size: ~100ms/5KB

32.796 seconds - No Cache Baseline

29.175 seconds - FileStore Cache w/EFS Provisioned (8 MiB/s) $48.00/month

29.598 seconds - FileStore Cache w/EFS Provisioned (16 MiB/s) $96.00/month

As you can see we did another test which simulated a ~100ms API response latency with 5KB of data and with more EFS throughput. In both we can see there is a disk read time tradeoff at play here. So if you do use EFS as some form of distributed cache, keep this in mind and configure your provisioned throughput as needed. Lastly, EFS can be configured with a lifecycle policy. So if you want to treat it as an LRU (least recently used) cache, maybe set it to something like "7 days since last access".

🤯 File System Deploys

Is your application so monolithic that it exceeds Lambda's 250MB unzipped deployment package size and yet somehow still qualifies as worthy of the Lambdalith title? Asked another way: could we "deploy" our application to EFS and have Lambda boot our Rails application from there? Let's find out!

If you are unfamiliar with how Lamby works, we basically load your Rack/Rails application from a single app.rb file at the root of your project/deployment package, like the one below. However, in this example, we have changed the relative requires to absolute paths pointing to our EFS mount. Will this work and if so, how well?

ENV['RAILS_SERVE_STATIC_FILES'] = '1'

require '/mnt/sandbox/config/boot'

require 'dotenv' ; Dotenv.load "/mnt/sandbox/.env.#{ENV['RAILS_ENV']}"

require 'lamby'

require '/mnt/sandbox/config/application'

require '/mnt/sandbox/config/environment'

$app = Rack::Builder.new { run Rails.application }.to_app

def lambda_handler(event:, context:)

Lamby.handler $app, event, context, rack: :http

end

Setup App & EFS

We are going to use the Lamby Quick Start guide again to generate a new Rails app to Lambda. Name the application disk_deploy and go through the entire process including the bin/deploy task. Please make sure to add the Policies from the YAML Rails FileStore Cache above.

We are going to reuse this Lambda's execution role and deploy artifacts located in the project's .aws-sam directory which is created after the deploy. The files there will be synced to a new EFS volume and used via a fresh "from scratch" Lambda whose only code is the few lines in the example above.

Sync Data with EFS

So, how do we get our application's files on EFS? Luckily AWS has yet another product aptly named AWS DataSync which can move data around to/from various location types. I decided to use DataSync's S3 to EFS' built in NFS support.

If you are new to DataSync, setting it up can be a little daunting. Thankfully fellow AWS Hero Namrata Shah made a YouTube video titled DataSync Demo: Copy data from S3 to EFS which was absolutely perfect! Assuming you have your VPC all set up from the test above, maybe start somewhere in the middle. Here are some critical points ⚠️ to follow:

- The S3 Source Location has an empty "Folder" prefix.

- The NFS Destination Location has a path matching the EFS access point. Ex:

/sandbox. - Make sure the "Copy ownership" task setting is OFF.

- Make sure the "Copy permissions" task setting is OFF.

- Make sure the "Keep deleted files" task setting is OFF.

- Make sure the "Overwrite files" task setting is ON.

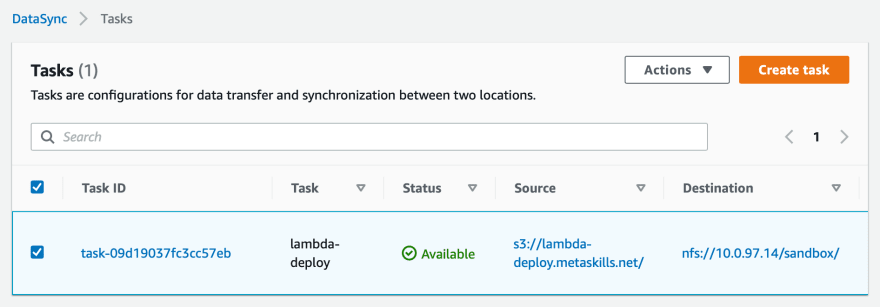

When completed, your DataSync task should look something like the image above. Starting the task to copy files from S3 to EFS is a few short CLI commands which can be run in the disk_deploy project.

$ cd .aws-sam/build/DiskDeployLambda

$ aws s3 sync . s3://lambda-deploy.metaskills.net/sandbox --delete

$ aws datasync start-task-execution \

--task-arn arn:aws:datasync:$AWS_REGION:$AWS_ACCOUNT_ID:task/task-09d19037fc3cc57eb

Please take note that we are syncing to an S3 prefix that matches our EFS access point. The ARN above is an example and you can replace the account ID and task name with your own. You could also use the aws datasync list-tasks CLI command to get your ARN too.

Lambda From Scratch

Building a Lambda from scratch is pretty straight forward. Here are the basic steps. Please remember we are reusing the execution role from the disk_deploy app which must have the AmazonElasticFileSystemClientFullAccess and AWSLambdaVPCAccessExecutionRole managed policies added prior to its deploy. Our from-scratch Lambda will not be able to use a VPC or File System without these policies in the execution role.

- Lambda Create Function -> Author from scratch

- Name:

efs-deploy-test, - Runtime:

Ruby 2.7 - Permissions -> Existing Role: Use role from

disk_deployapp.

- Name:

- Function Code Section

- Use modified

app.rbabove with absolute path require statements using/mnt/sandbox.

- Use modified

- Environment Variables Section -> Edit

- Click "Add environment variable"

- Key:

RAILS_ENVValue:production

- Basic Settings -> Edit

- Timeout:

60 seconds - Memory:

512

- Timeout:

- VPC Section -> Edit

- Custom VPC: Use Subnets & Security Group from above.

- File System Section -> Add file system

- Use EFS file system and access point from above.

- Local mount path:

/mnt/sandbox. Same path as EFS' access point path.

We do not need a true API Gateway event to test this. We can simply add this test event and trigger our Lambda from the console. If all goes well you should see a full response with headers and an HTML response for Lamby's getting started page.

The Results

First, this actually worked! Technically our Lambda is only a few bytes of code and all the application code is on the EFS volume. So does this allow everyone to break the bounds of Lambda's 250MB limit? Maybe. Maybe not. 🤷♂️

During this test I found that cold start times were drastically increased. Our application which is about 42MB uncompressed will take ~3 seconds to boot when deployed normally. However, using EFS, boot times changed to ~30 seconds!

I explored EFS provisioned throughput from 16 MiB/s to 48 MiB/s with no discernible difference. I guess at the end of the day you are going to have to benchmark EFS in different use cases to make a decision on what is right for you. I myself will certainly look forward to other's posts on EFS performance benchmarks.

Finally, here are some quickly-formed thoughts I had on DataSync while working on this experiment:

- Launching a DataSync task takes a few minutes. Why? Maybe it was the

t2.microI used? If a CI/CD pipeline used this technique, I would have to explore using events to coordinate timing issues. - It would be nice if DataSync supported using Lambda as agents vs EC2. Feels like this fits the task model better. Coming from a Lambda perspective, I did not enjoy setting up an EC2 instance. UPDATE: Michael Hart released cmda: A new command line tool for copying files to/from AWS Lambda, especially useful with EFS

- During the test I experienced out-of-sync file data and had to sync an empty source to fix it. Maybe I need to learn more about

aws s3 syncoptions besides--delete. Or maybe my DataSync file management task needed tweaking?

🎓 In All Seriousness

I welcome the addition of Lambda File Systems with EFS! We have already found use cases at Custom Ink for new workloads to move to Lambda based on this new capability. I hope these weird ideas above get your brain thinking on how Lambda File Systems can work for you. For example:

- Data processing

- Large Packages/Gems vs Layers

- Crazy EC2 File System Coordination

- Administrative Backup Tasks

Your imagination is the limit here. Explore, deliver value, and have fun!

Resources

As always, thanks for reading! Below are some links for reference. If you have any ideas or feedback, leave a comment. I'd love to hear from you. Also, please remember to delete any AWS Resources that you are not using when playing around today. No need to pay for anything you are not using!

- A Shared File System for Your Lambda Functions

- Lamby: Simple Rails & AWS Lambda Integration

- cmda: A new command line tool for copying files to/from AWS Lambda, especially useful with EFS

- Amazon Elastic File System

- Namrata Shah's DataSync Demo: Copy data from S3 to EFS

- AWS DataSync

- Sample API Gateway HTTP API Version 1 Event

With regard to caching, here are some other AWS Lambda cache posts you may find interesting.

Top comments (0)