Today’s business relies heavily on data-driven decisions. The importance of leveraging data lies in its ability to provide valuable insights into consumer behavior, market trends, and operational efficiency. Advanced technologies such as artificial intelligence, machine learning, and big data analytics enable the collection, processing, and interpretation of vast amounts of data at unprecedented speeds. This not only enhances the accuracy of decision-making but also empowers businesses to stay agile and responsive in a rapidly changing environment.

We can apply many different solutions to get more from our data. However, all of them rely on the database. To build modern observability, reporting, business intelligence, or machine learning, we need to have well-maintained data sources that hold all we need and can run queries fast. Therefore, we need to master database maintenance to make sure that our databases are in shape and can keep up with the increased load and more complex database tasks.

Database management is not easy. We need good database observability solutions that monitor database health and can save us from various issues. Also, we need to work on database optimizations, whether it’s through our database directly (like some intricate PostgreSQL tuning) or by changing the architecture of our applications.

In this article, we’re going to see how database bugs can negatively affect our business and how we can protect ourselves from dire consequences.

Recommended reading: Observability vs Monitoring: Key Differences & How They Pair

Database Bugs and Their Impact on Your Business

Slow databases affect your business in many ways. First and foremost, they can take your business down and cause an outage. Downtime disrupts regular business operations, resulting in immediate financial losses and potential damage to the organization's reputation. Additionally, lost productivity is a significant direct cost as employees may struggle to access essential information, leading to inefficiencies and delays in completing tasks.

There are also indirect costs that arise from compromised decision-making processes, as inaccurate or outdated data can mislead strategic planning and hinder the ability to make informed choices. When you base your decisions on invalid data, you get invalid decisions.

Yet another issue is around productivity. You don’t want to waste time on fixing the database. You prefer to use the time of your developers to build new features and move the business forward. Fixing the database is neither of these.

A well-maintained and optimized database serves as the backbone of organizational data management, ensuring the accuracy, reliability, and accessibility of crucial information. A healthy database not only facilitates efficient data retrieval but also enhances system performance, reducing the risk of downtime and operational disruptions. In essence, database health is a linchpin for operational excellence and a key determinant of a company's ability to outperform competitors in today's fast-paced and data-centric business environment.

Recommended reading: Troubleshooting Database Issues Like a Pro

We can face many bugs when dealing with databases. Some of them may be attributed to clients having the wrong configuration (like SQLSTATE 08006, PostgreSQL F0000, or MySQL 1087), some others may indicate problems with the database schema (like PostgreSQL 3F000or MySQL 182). No matter what the issues are, they can lead to serious business problems. GitLab faced a couple of outages because of a slow database. Sony was breached multiple times. Heroku was failing because of a data type mismatch. Many other issues could have happened just like the Halloween Problem. You simply need to keep your database in shape.

Routine Database Tasks for Optimal Performance

Let’s go through the typical routines you should follow to keep your databases healthy. This is by no means an exhaustive list. Depending on your business, you may need to do more.

Vacuuming

Defragmenting indexes

Refreshing statistics

Taking a backup

Updating your database

Checking the distribution of values

Revisiting indexes usage

Checking logs for attacks

Looking for slow queries

Partitioning your data

Verifying a backup

As mentioned above, there are other tasks you should perform depending on your business. Do not let your database break. Let’s now see the details.

Vacuuming

In normal PostgreSQL operation, tuples that are deleted or obsoleted by an update are not physically removed from their table. We need to remove them manually. This is called vacuuming.

Vacuuming is important because dead tuples slow the queries down. When there are dead tuples, the database engine needs to load more data from the drive which directly impacts the performance.

To vacuum, we need to run the command VACUUM which cleans all tables and indexes the user can access. This should be executed by the superuser.

Defragmenting Indexes

Indexes may get fragmented when you add and remove data. Since indexes need to be ordered, they can’t easily insert rows in the middle. They either need to allocate pages outside of the regular space, or they need to be reorganized. The same goes for deletion - if you delete rows, then indexes may have holes.

Fragmentation may slow down your queries and you want to avoid it.

You can use pgstattuple to get the metric around fragmentation. To fix the problem, you need to use REINDEX.

Refreshing Statistics

Outdated statistics negatively affect the queries. The database engine gets misled about the content of the tables and may not use indexes appropriately or pick inefficient execution plans.

To refresh statistics, you use the ANALYZE command.

Taking a Backup

Your database is the most precious resource of your business. If you lose data, you lose your clients and your company goes down. Unfortunately, we are never safe. People make mistakes, systems crash, and infrastructure providers fail their SLAs. We always need to take backups and be prepared to restore our systems.

Take your backups periodically. Read our guide about backups and do not lose your data.

Updating Your Database

It’s important to keep your database system up to date. New versions of database engines bring more features, fix bugs, and improve performance. Therefore, stay up to date.

There are many ways to update the database depending on how you host it. If you keep it in the cloud, then it’s probable that your cloud provider handles updates automatically. If you host it on-premise, then you need to take care of updating the operating system, the database, and everything in between. No matter how it’s done, make sure that you don’t fall behind because outdated systems slow you down and pose security risks.

Checking the Distribution of Values

It’s always a good idea to understand what is in your database. As a data expert, you should understand the business meaning of your data and be able to recognize the values that your business depends on. You should recognize and easily handle things like identifiers, abbreviations, or specific customer codes.

However, things change over time. Your database gets bigger and bigger, you have millions of rows and it’s inevitable that some of the records will be missing data. Empty values can heavily skew your queries, break your machine-learning solutions, or propagate to external systems. The worst is these things may go unnoticed for months. To avoid that, routinely check the distribution of your data. Have some understanding of the business and come up with legit rules like “most of the invoices should have the delivery date”. Next, check these rules and monitor if they significantly change over time. When you introduce a bug, you’ll quickly see the distributions changing which indicates issues with your code or data.

To ensure data integrity and avoid issues, routinely use Metis to check your data's distribution. Monitor critical metrics like empty values and adherence to business rules, such as invoice delivery dates, to quickly identify and address anomalies.

Revisiting Indexes Usage

Indexes can speed up your queries. However, not all queries can benefit from the indexes. Queries must extract proper columns or filter data in specific ways to benefit from indexing.

A typical problem with indexes is that they become unused over time. You change your application, you notice performance issues, you add an index, and then all is good. However, six months later someone else changes the application in a way that the query stops using the index. If the performance is acceptable, then you end up with an index that is not used and nobody notices the problem.

You need to review your indexes periodically and check if they are used. Unused indexes decrease the performance because every data modification needs to update both the table and the index. Drop unused indexes.

Checking Logs for Attacks

Your database may be attacked. People may try to brute force passwords or look for SQL injection issues. You need to prevent that from happening.

To keep your database safe, you need to review the logs. Go through them periodically and look for unsuccessful connections, weird queries with syntax errors, IP addresses of clients, or authentication issues. All these things may indicate that your database is under attack.

Do not think that you can be safe. Automated robots scan the networks and look for well-known ports. Your database will be attacked sooner or later.

Looking for Slow Queries

Even if your database works well, you may still have issues that go unnoticed. Slow queries may still be fast enough even though they are inefficient. Reducing the execution time decreases your costs and lets you scale better.

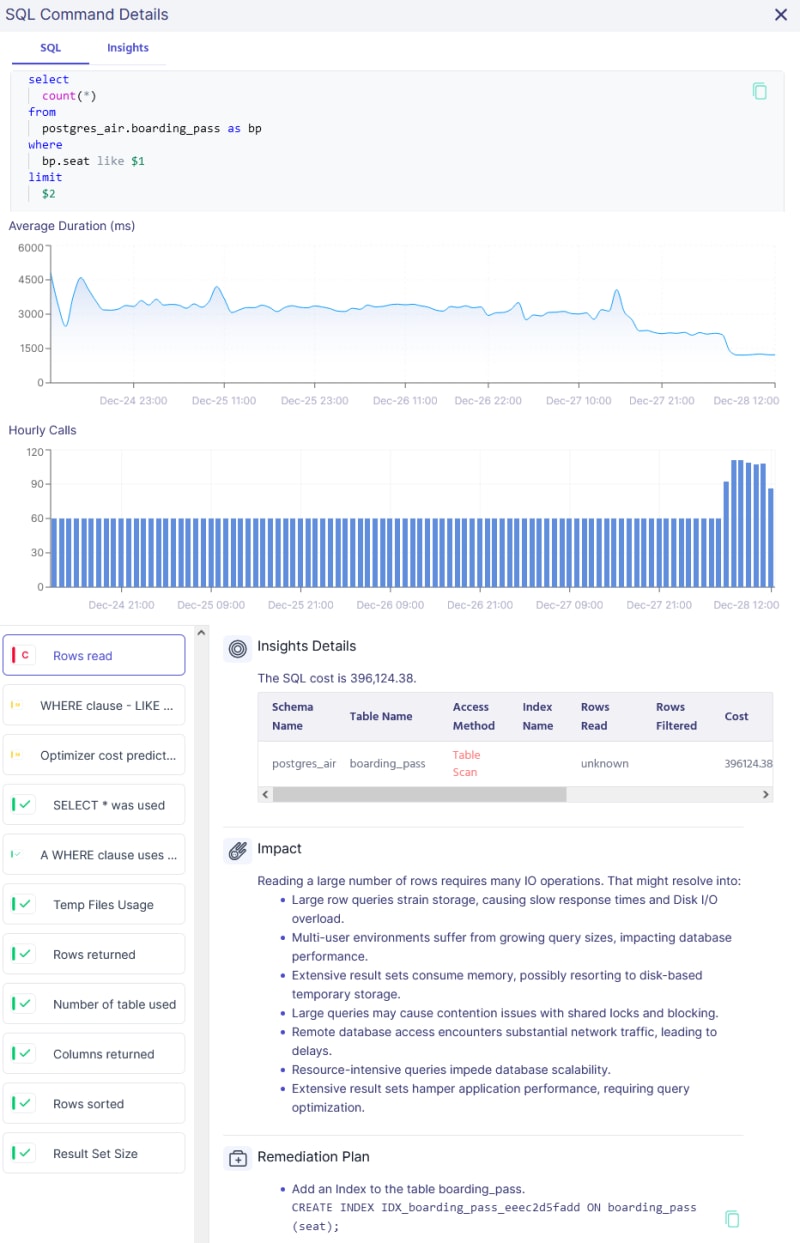

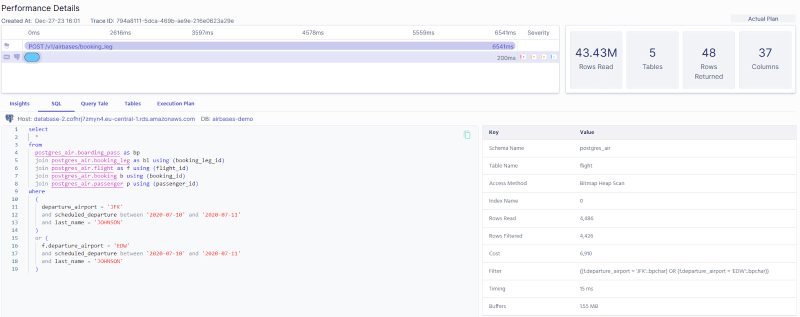

You should routinely check your logs and look for queries that execute for more than 50 milliseconds. Check their execution plans and verify if they are optimal. Fix them if needed.

Partitioning Your Data

Your business grows over time and it’s common that you don’t need historical entities to run your daily operations. However, these entities may still be scanned by your database engine which doesn’t know that they are old and irrelevant for business as usual.

Look for big tables and partition them based on time. Read our guide to learn how to partition efficiently.

Verifying a Backup

It’s not enough to take backups. You need to verify the backups as well. It’s often the case that we take backups but we don’t check them, and then they are unusable right when we need them.

Ideally, check every backup. Try recreating the database on the side and see if everything works well. This can be completely automated and protect you from catastrophic issues when the time comes.

Introducing Metis: Your Automated Solution to Database Health

We shouldn’t do the routine tasks manually. First, it takes time and resources. Second, it’s error-prone. We need to automate as much as possible. Metis can do that for you.

Metis focuses on three aspects: preventing the bad code from reaching production, providing monitoring and observability for your databases, and automatically troubleshooting the issues. For us, monitoring and observability are what we need to automate the routines that keep our database in shape.

Metis can analyze your queries and look for slow queries automatically. It can check the execution plans, detect anomalies, and suggest how to improve the performance. So don’t let a poorly-kept database ruin your business, let Metis safeguard it for you!

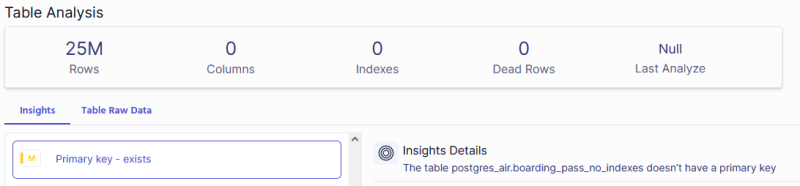

Metis can analyze your schemas, check statistics, and look for fragmentation or dead tuples. This way you don’t need to run your tasks manually. Metis alerts you when things require your attention.

Finally, Metis can track all the interactions with the database to show how they can be optimized. This streamlines the development and lets you avoid rollbacks.

Metis turns your guessing into a data-driven approach. It gives you the tools to develop your business with confidence and based on reliable data points. Metis automates tedious tasks, keeps your databases in shape, and lets you focus on your business. Thanks to database-oriented metrics and automated reasoning, Metis can provide a consistent and coherent story of what happened and why your database may be slowing down.

Making the Case for Investment in Metis

The management may be hesitant to adopt a new tool. There may be reasons to avoid that, especially in mature organizations that already have tools and processes in place. Let’s see that investing in Metis still pays off.

Your Monitoring Is Not Enough

Existing monitoring solutions are just not enough. They focus on generic metrics instead of database-specific ones. Tools like Datadog, New Relic, AppDynamics, or Dynatrace focus on raw infrastructure metrics that are easy to capture but don’t show the full picture. It’s not enough to show that the CPU spikes. We need to understand the reason and how moving pieces interoperate to cause trouble.

Similarly, monitoring tools swamp you with too many metrics and charts. You need to manually slice and dice them to find the root causes. There is no reasoning that can explain the coherent story.

Metis addresses these cases. Metis brings database-oriented metrics and can connect the dots from many places. Effectively, Metis can improve the MTTR and MTBF metrics. Developers can reliably work with the databases, and the operations team is released from manual tasks.

You Can’t Use Old Solutions For The New World

The world has changed significantly. We have many databases, multi-tenant applications, and hundreds of clusters, and we deploy changes many times a day. We learned DevOps and changed our deployment procedures significantly in the last few years.

However, our maintenance procedures didn’t change much. We still rely on monitoring tools (and we already know they are not enough), we manually debug slow queries, and we still lack understanding of the databases. Just like we changed the way we build and deploy applications, we need to change the way we own and maintain databases.

Metis does exactly that. Metis turns things around by detecting anomalies, automating troubleshooting, and alerting you when things really need your attention. There is no need to look after databases when you have Metis integrated. Metis is like a good maintenance team of DBAs. It can fix issues before they even manifest themselves.

Developers Don’t Have Tools For Maintaining Databases

Developers tend to claim that they test their solutions whereas in fact they don’t. They focus on the correctness of the data, but they ignore the performance and implications over time. This leads to long deployments, rollbacks, and reluctance to deploy changes.

Metis can help with that. Developers can own their databases and can reliably develop, maintain, and fix them. This effectively reduces the work of developers! While they own another scope (database maintenance), they have less work to be done because they can do that on their own. They don’t need to interact with other teams, they don’t need to wait for approvals or logs, and they don’t need to communicate. They can own everything end-to-end. What’s more, we can reduce the number of teams or unlock people to do more.

Conclusion

Neglecting database maintenance is a recipe for failure. You can’t let your database go down because it will bring down your whole business. There are many tasks that you should perform periodically to make sure that your database runs well.

Most of these tasks can be automated. Metis does exactly that. Metis can protect your databases from bad code modifications, slow queries, inefficient configurations, and breaking changes. You can deploy Metis in no time and get proactively notified when there are issues.

Take the next step in safeguarding your company. Use Metis and never go blind again.

FAQs

How can database optimization improve my company's bottom line?

Many companies lost their clients due to database issues. GitLab, Sony, or Heroku are just some of the examples. If your database is slow or unreliable, then your clients will move to other companies and you will get out of business.

What are the most common database bugs and how can they be prevented?

Most issues are around invalid configuration, bad data types, lack of indexes, or inefficient database schema. They can all be prevented by routinely checking how your database performs and fixing issues as you go.

What routine database tasks should be prioritized for optimal performance?

You should vacuum and defragment your databases. You should keep it up to date and install all the updates. You should refresh statistics, look for slow queries, and revisit the usage of the indexes. Finally, you should backup your database and always make sure that the backups are correct.

Top comments (0)