Introduction

Welcome to part one of our new series on Firebase Extensions. Here we explore what we can gain from using these features and how to fully optimize usage.

What is Firestore?

Firestore is a real-time, highly scalable NoSQL document database. It is most often found on the client-side web and mobile applications.

Data management within this type of database allows users to be fast-paced and flexible when editing data.

This provides developers with a flexible approach to storing data as well as easy integration when using one of the language SDKs.

Why BigQuery?

BigQuery can be considered the opposite of Firestore from a data structured point of view. Using an advanced querying engine, large complex datasets can be found and organized with minimal effort.

Using a form of SQL(structured-query-language), BigQuery allows data to be queried with minimal time between queries.

The defined relationships between tables mean data can be formed between multiple

Google Data Studio

The final piece of the puzzle. This application allows users to visualize data through a series of tools.

We have all experienced a time when simply showing data on a screen is simply not enough - charts, diagrams, and other advanced visualizations have provided an easier and more concise way to view and understand complex data.

As an advanced reporting tool, Google Data Studio is ideal for generating easy-to-present information to any audience. One of the main strengths of this tool is the range of databases that can be connected to import data and this includes BigQuery data!

What are Firebase Extensions?

Extensions allow well-tested pre-defined functions to be deployed and used in conjunction with the Firebase ecosystem like Auth, Firestore, Cloud Storage, etc.

Designed to increase productivity, a suite of features is available to improve automation through highly configurable functions.

Authentication, Database, and Cloud Storage triggers are just some of the functions used to enhance database data.

The plugins allow custom code to be built on top of Google product-based events, allowing many forms of integration (local or third party) or to even enriching Firestore data.

You can read more about triggers here:

Putting it all together, via the extension

Combining the above technologies would normally require a lot of manual setup and configuration, such as manually creating tables, and views and importing Firestore data.

The Firestore BigQuery extension does all of the heavy liftings and ensures that data is consistently synchronized between Firestore and BigQuery.

To get started, follow these steps:

- Install the extension here.

- Create some data on Firestore

- Query data via big query

- Present your data all fancy in Google Data Studio

Firestore-BigQuery-Export

Why an extension? Manually importing data to BigQuery can be a tedious task and even more so when data is required to be synchronized in real-time between two databases. This is where the extension comes in!

Using well-tested and designed cloud functions, the extension utilizes triggers to update specified BigQuery tables with data synced from Firestore!

Basic configuration

Configuration options make this easy to choose which data will be copied to BigQuery, with the use of wildcards as a way of choosing your document data, for example:

chatrooms/{chatid}/message

The above configuration will sync all chatroom sub-collections along with the document contained in the message.

Syncing to Big Query

One aspect to note is that the relevant BigQuery tables are not created on the installation of the extension. Table development is organized when the first record sync is recorded, on Firestore write the extension by default will create a table and a view specific to the configuration entered when setting up the extension.

raw_changelog

The change-log is a standard SQL table that contains all of the updates that have occurred when syncing from Firestore. Following a pre-defined schema this will provide:

document_id: The document id of the synchronized Firestore document upon creation.

event_id: The id of the document that triggered the update.

operation: What type of Firestore CRUD event triggered the update. This can be INSERT, UPDATE, DELETE, or IMPORT.

document_name: This is the Firestore path of the updated document.

data: A plain text JSON representation of the Firestore document.

timestamp: The Firestore timestamp at which the event took place.

raw_latest

This is a predefined BigQuery View that selects the latest unique changes from the changelog table.

Custom Schemas

The raw_latest view provided by the extension provides a basic view of the imported data, however, this does not mean that more advanced views can be created to show the data.

All Firestore data types are covered in the toolkit which means you can complex data structures.

{

"fields": [

{

"name": "name",

"type": "string"

},

{

"name": "favorite_numbers",

"type": "array"

},

{

"name": "last_login",

"type": "timestamp"

},

{

"name": "last_location",

"type": "geopoint"

},

{

"name": "geo_point",

"type": "stringified_map"

},

{

"fields": [

{

"name": "name",

"type": "string"

}

],

"name": "friends",

"type": "map"

}

]

}

Simply, define a test schema as above and run the following command:

$ npx @firebaseextensions/fs-bq-schema-views \

--non-interactive \

--project=${param:PROJECT_ID} \

--dataset=${param:DATASET_ID} \

--table-name-prefix=${param:TABLE_ID} \

--schema-files=./test_schema.json

An advanced view modeled on your imported data will now exist in BigQuery!

Backfilling Data

As mentioned previously, table and view creation occur on the first record change following the installation of the extension.

So this provides an obvious question, how do I import previously existing data? We have an import script for that!

npx @firebaseextensions/fs-bq-import-collection

Similar to the generate-schema tool, this can be run interactively and also in a non-interactive. The following information is required to run an import:

-

${PROJECT_ID}: the ID of the Firebase project in which you installed the extension -

${COLLECTION_PATH}: the collection path that you specified during extension installation -

${COLLECTION_GROUP_QUERY}: uses acollectionGroupquery if this value is"true". For any other value, acollectionquery is used. -

${DATASET_ID}: the ID that you specified for your dataset during extension installation

For larger datasets, this tool also has a multi-threaded option to improve performance and memory! Use the -multi-threaded flag for non-interactive or select to run multiple threads when prompted interactively.

Running Multiple Instances

A question that is asked quite often with extensions is how to handle different configurations once an extension has been installed. The answer here is easy, install another instance!

Firebase extensions will allow more than one instance of an extension to be installed, they will simply create another set of resources and operate under another ID.

If for example a wildcard query, does not cover all the required collections, install another extension and point the configuration to the required collections.

Visualizing the results

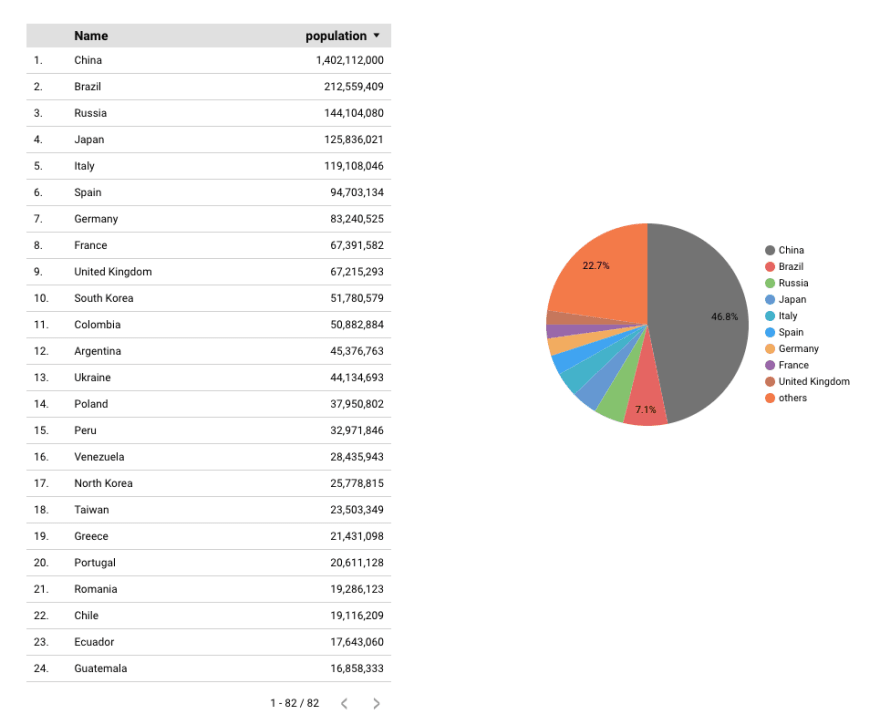

For this example, we are going to demonstrate, the wildcard path using the information on countries around the world.

To begin, with an extension instance will be required with the minimum following configuration:

Collection path: regions/{regionId}/countries

Dataset name: regions

Table name: countries

Wildcards: true

Firestore data can be added using the sample script located here.

Now we have data synced from Firestore to BigQuery, we can use reporting tools to provide a clearer view of the information.

Navigate to https://datastudio.google.com/reporting/ to create a new report.

(Sign up if prompted.)

Select BigQuery as your data source and authorize if required.

In this example, we are going to use a Custom Query to allow specific data to appear in our dataset.

Select:

CustomQuery ⇒ {Billing ProjectId} and enter the following query...

SELECT document_id as Name, cast(JSON_VALUE(c.data, "$.population") as INTEGER) as Population

FROM `{my-project-id}.regions.countries_raw_changelog` c

This will present the queried dataset that we can now use to visualise the data.

To include the relevant field, you can customize the columns. In this case, select metric and choose population:

Once the dataset is ready, we can now create a pie chart.

Choose Add a chart, select Pie Chart, and place it onto your editor canvas.

Finally, repeat the previous steps. Try updating the Metric data source on the Pie chart to show population if the chart does not automatically include the correct metric.

Tada! 🎉

We can now visualize our Firestore data through BigQuery and DataStudio.

New Features

The team behind Firebase Extensions has been hard at work ensuring that the cutting-edge features from BigQuery have now been adopted as part of the extension. From the latest release, you can now take advantage of those features when configuring your extension.

Wildcards

By default, data synchronized from Firestore is stored in a single column called “Data”. This will store all data based on a pre-configured collection path, for example…

“categories/{category}/products”

Before this feature, engineers would struggle to associate data in a query without knowing the wildcard path of how the data was synchronized.

Now you can query with the following:

SELECT * FROM Countries c WHERE c.wildcard.region = "europe".

So let's look at an example.

As a developer, I have been asked if I can provide a report on countries around the world! I have been provided a handy API for providing the data for my database https://restcountries.com/#api-endpoints-v3-all.

After scanning the API it seems reasonable that the collections and sub-collections would be divided into the following categories.

Regions (Collection)

Countries (SubCollection)

This provides me with the following wildcard path for my BigQuery installation:

regions/{regionId}/countries

Once installed. I can give the new parameters a test! Run the following query to get all countries that are currently in South America.

SELECT document_id, JSON_VALUE(c.path_params, "$.regionId")

FROM `extensions-testing.countries_example.regions_raw_changelog` c

WHERE JSON_VALUE(c.path_params, "$.regionId") = "South America"

Partitioning

As databases continue to grow, query performance and costs can often be the first to suffer when it comes to querying data. One solution to this is Table partitioning.

To improve performance BigQuery will divide table content based on the specific column data used in partitioning. Smaller and specific tables will mean faster querying and better results. Additionally, this will reduce costs as less data read will mean less cost-per-read for the owner of the project!

There are three types of partitioning offered by BigQuery

- Time-unit allows a user to partition on three different data types, specifically TIMESTAMP, DATE, and DATETIME.

- Ingestion Time based on the TIMESTAMP value of when the data was created

- Integer Range specifically partitions based on an integer value.

The BigQuery Firebase extension will automatically create the column and data type partitioning based on which configuration is used when installing the extension.

Users can then query data based on the specified partitioning field.

SELECT * FROM `extensions-testing.20220309_6.20220309_6_raw_changelog`

WHERE created = "2022-04-18"

LIMIT 1000

Clustering

Clustering is a feature that organizes table data by sorting specified columns and is an ideal solution for reducing the number of reads made on a table through a query.

When defining clustering, multiple columns can be defined allowing specific ordering of records to minimize the number of unnecessary reads on a query.

Through the extension, adding clustering is as simple as adding a comma-separated list of the columns you would like to cluster by - this is then applied to any new table.

Big Query will then take care of the rest!

Cross Project Support

Previously Firestore and Big Query databases were synchronized based on the Firestore project name.

The extension now allows support for choosing which Big Query project is used for synchronization.

A typical scenario for this would be to install multiple instances of the extension to share the data across multiple (separate) instances without having to support multiple Firestore instances.

Transform Function

As some data may require additional processing, developers are offered the opportunity to intercept the payload and modify it as needed.

When data from Firestore has been created or modified, the entire payload is copied to the BigQuery table under the data column in the following format.

{

data: [{

insertId: int;

json: {

timestamp: int;

event_id: int;

document_name: string;

document_id: int;

operation: ChangeType; //CREATE, UPDATE, DELETE or IMPORT

data: string;

},

}]

}

By providing a custom URL, users can receive the original payload and modify it as required. The returned data from the response is then saved into the BigQuery table.

You can access example functions provided by Invertase here.

You may follow us on Twitter, Linkedin, and Youtube to get our latest updates.

PS: This post was initially written by Darren Ackers, the lead developer at invertase.io. You may find original content at https://invertase.link/koCT

Top comments (0)