Note: Originally posted by my colleague on the Mux blog.

One of my first projects as an engineer at Mux was to add a bit of validation logic to stream.new to block uploads of videos with extremely long durations (1+ hours). stream.new is a simple web application the team built to both serve as an example application for users and to help us dogfood our own product. The site allows users to upload an input video file to Mux, where it is processed and encoded, then made easily streamable and shareable (you can also record directly from your camera or screen -- check it out!) Adding a client-side duration check is a commonly requested feature: imagine building an application where users upload videos up to a minute long (think TikTok). It would be a huge bummer to spend a bunch of time uploading a file just to be told at the end that your video was too long!

As an uninitiated beginner in the world of video, I did some preliminary research into the canonical method of determining a video file’s duration. After skimming through some disappointingly sparse search results, I posed the “simple” question in the team Slack channel. I then proceeded to do my best to follow a 45 minute minute conversation between six of my coworkers (with dozens of years of video experience between them) demonstrating just how non-trivial the problem actually is.

As it turns out, video file formats are a bit more complex than I had previously thought. Fun! Accordingly, in the rest of this post I’ll be doing my best to provide a beginner’s introduction into how video file formats work -- no prior video experience required.

How the sausage gets made

Video files are generally serialized into files with a container format: multiple streams of data are encoded and multiplexed (or “muxed”, if you will) together in separate containers within a single file. These files hold multiple streams of data because the visual, audio, captions, etc. components are all stored separately and tied together by a shared playback timeline. Let’s discuss one of the most common and well-known file formats, MP4.

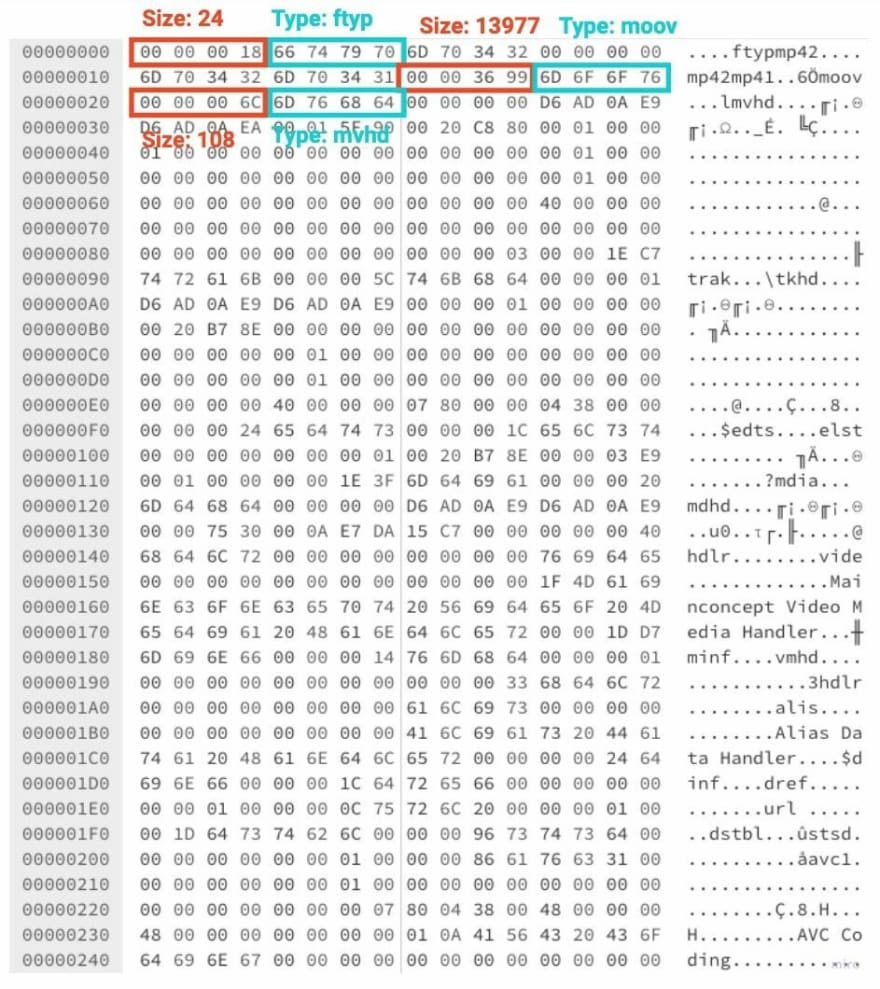

Each container is called a box (or atom) and is a serialized array of bytes formatted with a prefix that specifies (1) the box type with a canonical four character label and (2) the serialized box’s length so a parser can know how far into the box to read. Boxes are hierarchical, meaning there can be multiple boxes within a box.

So mp4s are just a collection of boxes within boxes… what do they all mean?

By convention, the first box in an mp4 file is an ftyp box. This contains some high level metadata about the file, including the types of decoders that can be used on the rest of the file. “Decoders” are code that transforms serialized data into a signal that humans can understand, while codecs do the exact opposite.

After the ftyp box usually comes a moov box. This is where, in my opinion, things start getting more interesting. The moov box generally contains a few trak boxes within it which provide reference information for interpreting the encoded data streams. For example, in a normal MP4 there might be a trak for video (visual) and a trak for audio. In addition to describing more details about the appropriate decoders for each stream, the trak includes offset information into the rest of the file (basically serving like pointers to a video player) about where the encoded streams can be found. The actual encoded bitstreams are, in turn, contained within the following mdat (Media Data) box! So to play back a video, the player would need to first load the moov box to find the relevant offsets into the mdat box for the audio and visual streams to start decoding them for a device’s physical output.

Streaming Video

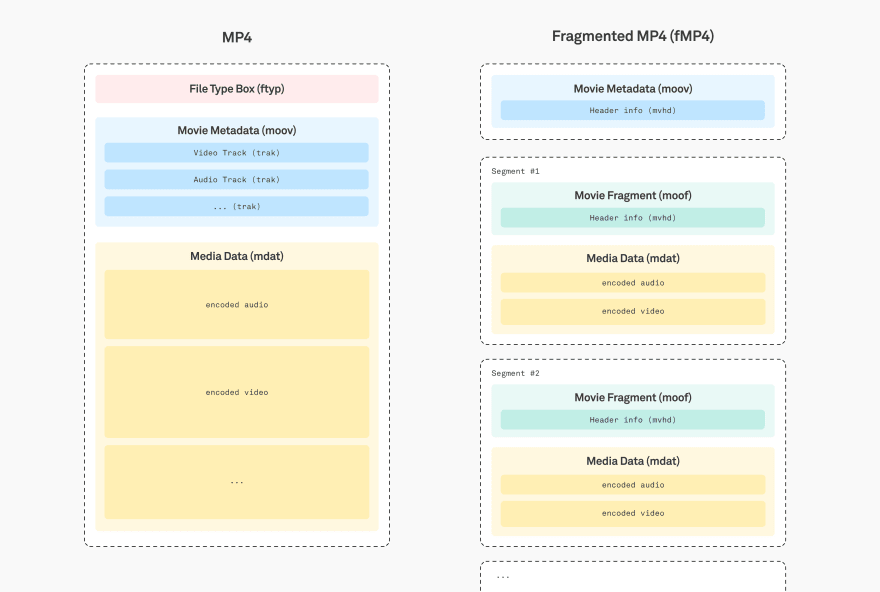

Things get fun when we start thinking about fragmented mp4s (fMP4s), which are used for streaming video. After all, if you’re serving a large video file you wouldn’t want to make viewers download the whole thing before they can start watching. Or, let’s say you want to seek to a specific location in the video (say, 11:42). If the video and audio streams were stored in two large, contiguous ranges of bytes then you would need to iterate through the whole thing to find your desired seek location. Instead, in a fMP4 file the mdat box is segmented into pieces (conceptually, think of the video being chopped up every few seconds), and a sidx box provides indexing information so a player knows which timestamps in the video correspond to which segmented mdat boxes.

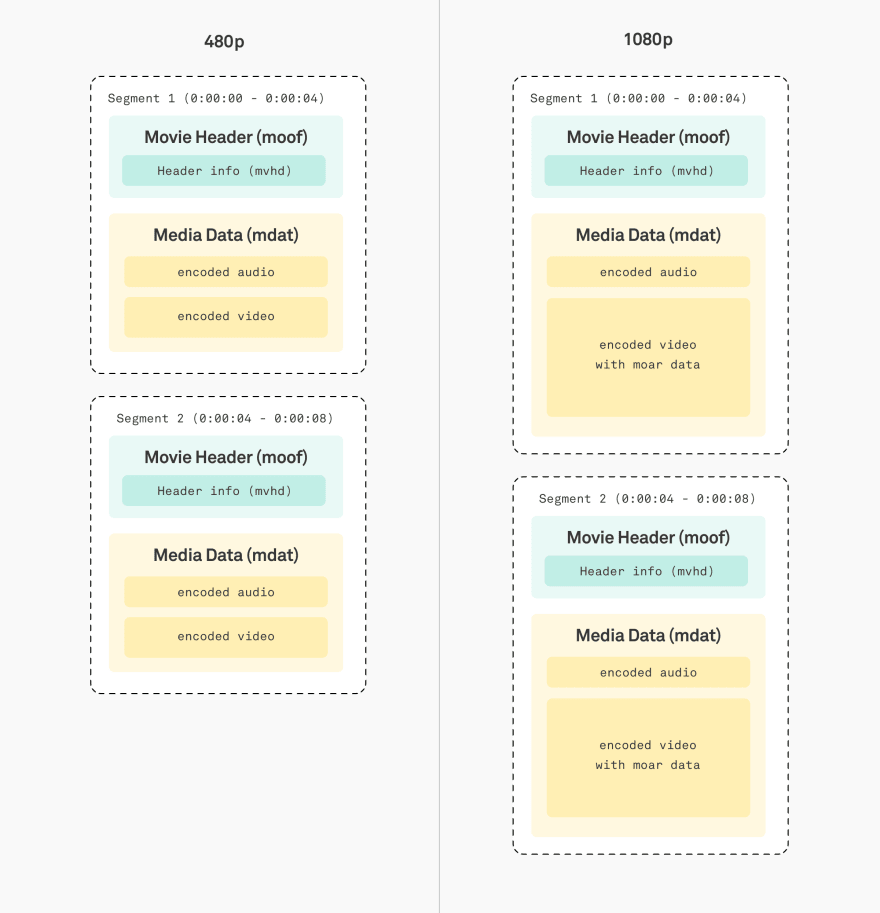

Segmentation of the mdat box also unlocks the benefit of adaptive bitrates. Since all client bandwidths and user devices are not created equal, you might want to seamlessly deliver versions of the video with a higher or lower bitrate (think 240p, 480p, 1080p…) in different situations. With segmented video files, your player can detect bandwidth changes or slowdowns and switch bitrate accordingly. The original content is encoded into multiple versions with different bitrates when it is ingested, and the player can decide which one to iteratively download during playback. For example, if your user is watching something on a mobile device and they walk out of range of wifi, then you may want to switch from 1080p to 480p rather than subject the user to a poor viewing experience when their device begins to struggle.

Returning to the original problem at hand… so how do we find the duration of a video file? The short answer is that (at least for MP4s) you should be able to just inspect the header values stored in the file’s moov box. However, there is no guarantee that the header metadata is even accurate! Check out my co-worker Phil Cluff’s (aka “other Phil”) talk on this topic at Demuxed -- it turns out you can pretty easily munge/delete/edit boxes within the file even while [certain players] continue to play them normally! Therefore, to derive an authoritative answer for what the duration of a video is, one would actually need to parse and iterate through the entire contents of the file. (And that’s not even taking alternative formats into account!) That being said, in general, the header information should serve as a reasonable signal of the video’s length. For our use case of a simple preliminary check, we made do in stream.new with loading the file as a video element and checking its metadata -- a simple change of just a few lines of code.

Hopefully this was a helpful introduction to the world of video formats! If you’re interested in learning more about how playback works, check out howvideo.works. If you’re itching to just get started with streaming video, go to mux.com. Thanks for reading!

Top comments (0)