What is a Kubernetes Tenant?

The Kubernetes multi-tenancy SIG defines a tenant as representing a group of Kubernetes users that has access to a subset of cluster resources (compute, storage, networking, control plane and API resources) as well as resource limits and quotas for the use of those resources. Resource limits and quotas lay out tenant boundaries. These boundaries extend to the control plane allowing for grouping of the resources owned by the tenant, limited access or visibility to resources outside of the control plane domain and tenant authentication.

What is multi-tenancy?

A multi-tenant cluster is shared by multiple users and/or workloads which are referred to as "tenants". The operators of multi-tenant clusters must isolate tenants from each other to minimize the damage that a compromised or malicious tenant can do to the cluster and other tenants. Also, cluster resources must be fairly allocated among tenants.

When you plan a multi-tenant architecture you should consider the layers of resource isolation in Kubernetes: cluster, namespace, node, pod, and container. You should also consider the security implications of sharing different types of resources among tenants. For example, scheduling pods from different tenants on the same node could reduce the number of machines needed in the cluster. On the other hand, you might need to prevent certain workloads from being colocated. For example, you might not allow untrusted code from outside of your organization to run on the same node as containers that process sensitive information.

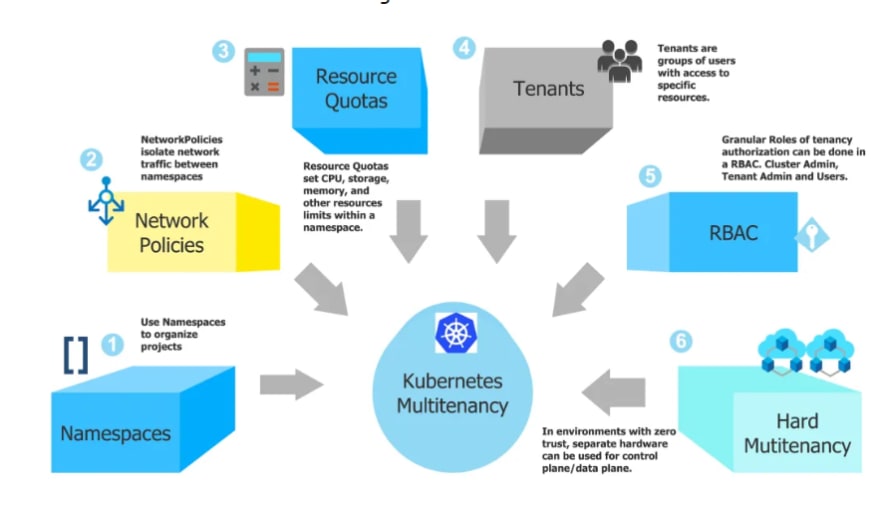

Although Kubernetes cannot guarantee perfectly secure isolation between tenants, it does offer features that may be sufficient for specific use cases. You can separate each tenant and their Kubernetes resources into their own namespaces. You can then use policies to enforce tenant isolation. Policies are usually scoped by namespace and can be used to restrict API access, to constrain resource usage, and to restrict what containers are allowed to do.

There are two multi-tenancy models in Kubernetes: Soft and Hard multi-tenancy.

Soft Multi-tenancy

Soft multi-tenancy trusts tenants to be good actors and assumes them to be non-malicious. Soft multi-tenancy is focused on minimising accidents and managing the fallout if they do.

Hard Multi-tenancy

Hard multi-tenancy assumes tenants to be malicious and therefore advocates zero trust between them. Tenant resources are isolated and access to other tenant’s resources is not allowed. Clusters are configured in a way that isolate tenant resources and prevent access to other tenant’s resources.

Why Muti Tenancy?

When you start out with Kubernetes, usually what happens at a very high level is, you have a user, and the user interacts via a command-line tool or the API, or UI with a master. The master, as we just heard, runs the API server and the scheduler, and the controller. This master is responsible for orchestrating and controlling the actual cluster. The cluster consists of multiple nodes that you schedule your pods on, Let's say these nodes are machines or virtual machines, or whatever the case may be. Usually, you have one logical master that controls one single cluster. Looks relatively straightforward. When you have one user and one cluster, that's what it is.

Now, what happens when you start having multiple users? Let's say your company decides to use Kubernetes for a variety of maybe internal applications, and so you have one developer over here, creating their Kubernetes cluster, and you have another one over here creating their Kubernetes cluster, and your poor administrators now have to manage two of them. This is starting to get a little bit more interesting. Now you have two completely separate deployments of Kubernetes with two completely separate masters and sets of nodes. Then, before you know it, you have something that looks more like this. You have a sprawl of clusters. You get more and more clusters that you now have to work with.

What happens now, some people call this cube sprawl, this is actually a pretty well-understood phenomenon at this point. What happens now is, I will ask you two questions of how does this scale? Let's think a little bit about how this model scales financially. How much does it cost you to run these clusters? The first thing that might stand out is that you now have all of these masters hanging out. Now you have to run all these masters. In general, it is best practice, not to run just one master node, but three or six, so that you get better high availability. If one of them fails, the other ones can take over. When you look at all these masters here, they're not one single node normally per master, they're usually three. This is starting to look a little bit more expensive. That's number one.

Then number two, one of the things that we see a lot is, we see the customers that say, "I have all of these applications, and some of them run during the day, and they take user traffic." They need a lot of resources during the day, but they really lie idle at night. They don't really do anything at night, but you have all these nodes.

Then you have some applications that are batch applications, maybe back processing of logs or whatever the case may be, and you can run them at any time you want. You could run them at night, you could have this model where some applications run during the day and then the other applications run at night, and uses the same nodes. That seems reasonable. With this model, where you have completely separate clusters on completely separate nodes, now, you've just made that much harder for yourself. That's one consideration.

Another consideration that people bring up a lot is operational overhead, meaning how hard it is to operate all of these clusters. If you've been in a situation like this before, maybe not even with Kubernetes, what you will have noticed is that oftentimes what happens is that all of these clusters look very similar at the beginning, maybe they run very different applications, but the Kubernetes cluster, like the masters are all at the same version of Kubernetes, and so forth, but over time, they tend to drift. They tend to become all of these special snowflakes. The more you have these special snowflakes, the harder it is to operate them. You get alerts all the time, and you don't know, is it like a specific version, and you have to do a bunch of work. Now we have tens or hundreds of sets of dashboards to look at, to figure out what's going on. This now becomes operationally very difficult and actually ends up slowing you down.

Now, with all of that being said, there is a model that is actually a very appropriate model under some circumstances. Lots of people choose this model, maybe not for hundreds or thousands, but lots of people choose this model of having completely separate clusters because it has some advantages, such as being easier to reason about and having very tight security boundaries. Let's say you're in this situation, and you have hundreds of clusters, and it's becoming just this huge pain. One thing you can consider is what we call multi-tenancy in Kubernetes.

Challenges of Kubernetes multi-tenancy:

Namespace isolation

A basic best practice for handling multiple tenants is to assign each tenant a separate namespace. Kubernetes was designed for this approach. Most of the isolation features that it provides expect you to have a separate namespace for each entity that you want to isolate.

Keep in mind, too, that in some cases it may be desirable to assign multiple namespaces to the same group within your Kubernetes deployment. For example, the same team of developers might need multiple namespaces for hosting different builds of their application.

Adding namespaces is relatively easy (it takes just a simple kubectl create namespace your-namespace command), and it’s always better to have the ability to separate workloads in a granular way using namespaces than to try to cram different workloads with different needs into the same namespace.

Block traffic between namespaces

By default, most Kubernetes deployments allow network communication between namespaces. If you need to support multiple tenants, you’ll want to change this in order to add isolation to each namespace.

Resource Quotas

When you want to ensure that all Kubernetes tenants have fair access to the resources that they need, Resource Quotas are the solution to use. As the name of this feature implies, it lets you set quotas on how much CPU, storage, memory, and other resources can be consumed by all pods within a namespace.

Secure your nodes

A final multi-tenancy best practice to keep in mind is the importance of making sure that your master and worker nodes are secure at the level of the host operating system.

Node security doesn’t reinforce namespace isolation in a direct way; however, since an attacker who is able to compromise a node on the operating system level can potentially use that breach to take control of any workloads that depend on the node, node security is important to keep in mind. (It would be important in a single-tenant environment too, but it’s even more important when you have multiple workloads, which makes the security stakes higher.)

Multi-tenancy – all the way

An important aspect of multi-tenancy is having multi-tenancy at a layer above kubernetes cluster – so that your DevOps and developers can have one or more clusters belonging to different users or teams of users within your organization. This concept isn’t built into Kubernetes itself. Platform9 supports this by adding a layer of multi-tenancy on top of Kubernetes via the concept of ‘regions’ and ‘tenants’. A region in Platform9 maps to a geographical location. A tenant can belong to multiple regions. A group of users can be given access to one or more tenants. Once in a tenant, the group of users can create one or more clusters, that will be isolated and accessible only to the users within that tenant. This provides separation of concerns across different teams and departments.

Recommended best practices for Multi-tenant Kubernetes clusters:

1) Limit Tenant’s use of Shared Resources

2) Enable Built-in Admission Controllers

3) Isolate Tenant Namespaces using Network Policy

4) Enable RBAC

5) Create Cluster Personas

6) Map Kubernetes Namespaces to Tenants

7) Categorize Namespaces

8) Limit Tenant’s Access to non-namespaced Resources

9) Limit Tenant’s Access to Resources from other Tenants

10) Limit Tenant’s Access to Multi-tenancy Resources

11) Prevent use of HostPath Volumes

12) Run Multi-tenancy e-2-e Validation Test

Top comments (0)