There’s a persistent belief among web security people that cross-site scripting (XSS) is a “game over” event for defence: there is no effective way to recover if an attacker can inject code into your site. Brian Campbell refers to this as “XSS Nihilism”, which is a great description. But is this bleak assessment actually true? For the most part yes, but in this post I want to talk about a faint glimmer on the horizon that might just be a ray of sunshine after all.

Why is XSS so catastrophic anyway?

A naïve view of the dangers of XSS is that the attacker primarily wants to steal your authentication tokens or cookies so that they can use them from their own machine to perform malicious actions in their own time. This used to be a very common attack pattern, but it has a lot of drawbacks for the attacker:

- It is easily defeated by simple measures such as using HttpOnly cookies, which stop the attacker’s script being able to steal your session cookie in the first place.

- If the web app in question is only accessible from a corporate network or VPN, then the attacker won’t be able to connect to it from their own machine even if they have your cookie.

- By using their own machine (or a compromised machine they have access to already) they make it easier to detect the attack and block their access. There will be clues given away by the change of IP address, geo-location, browser version, and so on. This is by no means guaranteed, but it certainly increases the risk of the attacker being spotted.

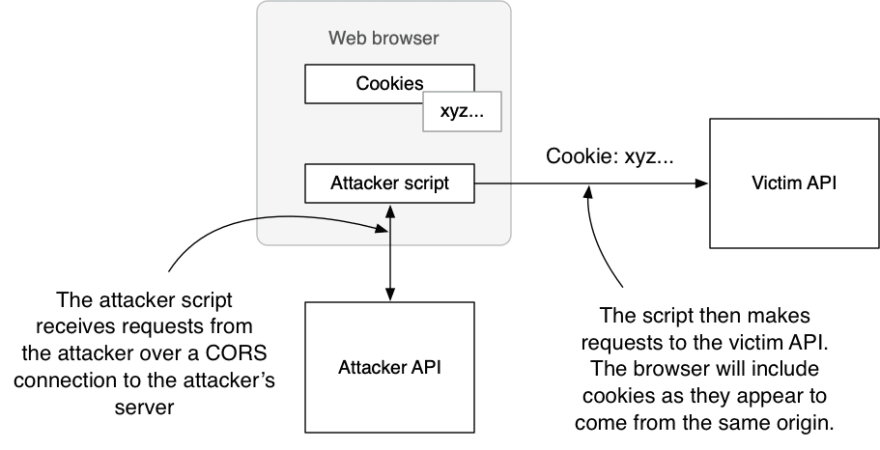

Attackers are well aware of these issues and have developed a solution to all of them. Rather than stealing your session cookie and using it from their own machine, they will instead use the XSS attack to proxy their requests through your web browser. This is similar to how a Cross-Site Request Forgery (CSRF) attack occurs, but with XSS the requests come from the same site (same origin) as legitimate requests and so almost all CSRF defences can be bypassed, as shown in the image below from chapter 5 of my book. SameSite cookies do not protect against this attack, and nor do typical anti-CSRF tokens because the attacker’s script running in the same origin as the legitimate code can extract these from the DOM or local storage.

This technique of proxying requests through the victim’s browser can even defeat more advanced protection measures such as the in-development DPoP method for securing OAuth tokens against token theft or misuse. There are various advanced tricks you can try, such as using a Web Worker to control access to tokens and keys, but that is only a partial defence as handily summarised by Philippe De Ryck in this post to the OAuth mailing list.

This is why web security experts are often so gloomy about XSS and describe it as Game Over. This is especially distressing given that XSS is still one of the most prevalent vulnerabilities in web applications, with OWASP claiming that around two-thirds of applications have an XSS vulnerability. Although better JavaScript frameworks and technologies such as CSP and Trusted Types should reduce this over time, it seems likely that we’ll have to deal with XSS for a long time to come, so it would be nice if things weren’t quite so bleak.

One bad solution

Thankfully, there are some possible solutions. One quite poor solution would be to simply confirm each request with the user before allowing it to proceed. After all, if we can’t distinguish between legitimate actions performed by a user and malicious ones injected by the attacker, why not simply ask the user? “Do you really want to email all your photos to hacker@example.com?” This assumes that the application has access to some kind of trusted UI which it can use to confirm requests with the user, and which can’t be interfered with by the attacker. An example would be to send a push authorization request to an app on the user’s phone, asking them to confirm each request.

This kind of solution can work for occasional high-value transactions, and is often used in banking for exactly that use-case. But it’s not a general solution that could be used for every request made by your app. Users would quickly get tired of manually approving requests every time they click on a link or added an item to their shopping cart. Anyone who’s ever installed Little Snitch will know this feeling. Also many legitimate requests made by an app are not made in direct response to a user action or are not meaningful to users, so it would be hard for them to even know whether it was something they wanted to do or not.

So this specific solution is not very practical, but it does illustrate that solutions are possible: XSS is not necessarily Game Over. But it probably is within the mental models we’re used to using to think about web security.

A ray of sunlight

If we can’t ask the user to confirm every request, is there another way that we can distinguish between legitimate and malicious requests that can’t be easily defeated? The key is to notice that proxying requests through a web browser is an example of a Confused Deputy attack, just like CSRF. The attacker instructs the web browser (or JavaScript app) to make requests of their choosing, and the browser/app happily adds the user’s authority to those requests in the form of a cookie, access token, DPoP proof, or whatever. This type of attack can only occur because an attacker is able to construct a valid request independently of having the authority to perform it, and can then trick another person/process (the “deputy”) to perform it for them.

A systematic solution to confused deputy problems is provided by capability security, which I discuss in some detail in chapter 9 of my book and also in an older post on this blog. A fundamental principle of capability security is to combine designation with authority: it shouldn’t be possible even name a resource that you don’t have access to, much less craft a legitimate request to access it. For a web application, the idea is that rather than having a single cookie or token that provides access to everything, you would instead have lots of individual tokens that provide access to specific objects—one particular photo, for example—and that you encode these individual tokens directly into the URLs that are used to access these objects. The only way to have a legitimate URL is to be given one, and it’s impossible for any user to create one from scratch. (In the book I go into more detail about how to make this secure and convenient, which I won’t repeat here).

If access to a website was driven by capability URLs rather than cookies or all-powerful access tokens, then the attacker’s job after exploiting an XSS vulnerability is much harder. They cannot simply proxy requests through the victim’s web browser because, without access to any capability URLs, they cannot even begin to create those requests. Instead they must try to steal capability URLs from the app or intercept them in use, and hope that the ones they capture correspond to objects they want to access or manipulate. By storing capability URLs inside closures or using other security boundaries, an app can make it very hard for an attacker to intercept these URLs. I also believe that browser vendors could provide further protection by supporting a special URL scheme for capability URLs, but I’ll write about that another time.

I believe that such an approach can be made very secure against XSS attacks, while also being immune to CSRF attacks. But it’s very different to how most web apps are written today, and would require a fundamental change in security architecture. I have some ideas about how future versions of OAuth could incorporate some of these ideas, and with a bit of work you could retrofit it using techniques such as macaroons to create many individual tokens from a single all-powerful one (and then throw that one away). I believe that the security advantages are worth it, and I further believe that a capability-security model is the only viable long-term approach for securing the web. The same-origin policy has never been very effective, as the continued impact of XSS shows, and things like CSP and SameSite cookies are at best a poorly-fitting sticking plaster. At some point we need to rip it off and adopt a more systematic approach.

Insert coin to continue playing.

Latest comments (0)