In this article, We are going to be covering Amazon SageMaker, and we will start this by mainly answering three questions:

What is SageMaker?

Why use SageMaker?

How does SageMaker work?

What is SageMaker?

AWS (or Amazon) SageMaker is a fully managed service that provides the ability to quickly build, train, tune, deploy, and manage large-scale machine learning (ML) models. SageMaker is a combination of two valuable tools. The first tool is a managed jupyter notebook instance(yes, the same Jupyter notebook you probably have locally installed on your system) except instead of running in a workspace or on your personal computer. Instead, it runs on a virtual machine hosted by Amazon, while the second tool is an API, which simplifies some of the more computationally difficult tasks that we might need to perform, like when we want to train and deploy a machine learning model.

Why Use SageMaker?

The simple answer is that it makes a lot of the machine learning tasks we need to perform much easier For instance, in a machine learning workflow like below:

The notebook can be used to explore and process the data in the highlighted box below:

while the API can help simplify the modeling and deploying steps as highlighted:

How does SageMaker work?

Working in a managed notebook has the added advantage of having access to the SageMaker API. The SageMaker API itself can be thought of as a collection of tools dealing with the training and inference processes. The training process is where the computational task is constructed. Generally, this task is meant to fit a machine learning model to some data, then this task is executed on a virtual machine. The resulting model, such as the tree is constructed in a random tree model or the layers in a neural network, is then saved to a file, and this saved data is called the model artifacts.

The inference process is similar to the training process. First, a computational task is constructed to perform inference, then this task is executed on a virtual machine. In this case, however, the virtual machine waits for us to send it some data. When we do, it takes that data, along with the model artifacts created during the training process, and performs inference, returning the results.

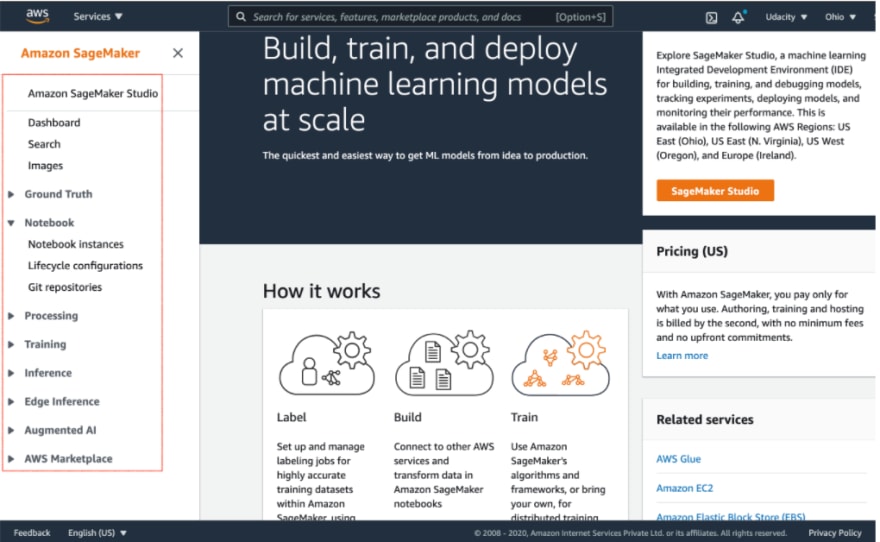

So, in essence, Amazon Sagemaker provides the following tools:

Ground Truth - To label the jobs, datasets, and workforces

Notebook - To create Jupyter notebook instances, configure the lifecycle of the notebooks, and attache Git repositories

Training - To choose an ML algorithm, define the training jobs, and tune the hyperparameter

Inference - To compile and configure the trained models, and endpoints for deployments

The snapshot of the Sagemaker Dashboard below shows the tools mentioned above.

Notice: The AWS UI shown above is subject to change regularly. We advise students to refer to AWS documentation for the above processes.

What makes Amazon SageMaker a "fully managed" service?

SageMaker helps to reduce the complexity of building, training and deploying your ML models by offering all these steps on a single platform. In addition, SageMaker supports building the ML models with modularity, which means you can reuse a model you have already built earlier in other projects.

SageMaker Instances

SageMaker instances are the dedicated VMs optimized to fit different machine learning (ML) use cases. An instance type is characterized by CPU, memory, GPU, GPU memory, and networking capacity. As a result, the supported instance types, names, and pricing in SageMaker are different than that of EC2.

This has been AWS SageMaker in a nutshell, and I hope this aided your understanding and answered any question you had about SageMaker.

Top comments (0)