Of course, there is never a single truth during software development. We always come up with multiple options and try to determine which one suits us the best according to the requirements we have.

For example, there may be an exchange of information between “client” and “server” at times. However, the information needed by the client may not be ready there yet.

Sample Scenario

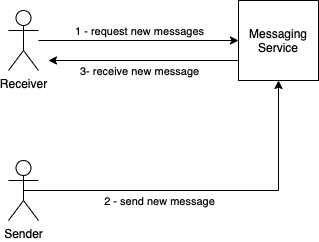

If you say what are such scenarios, the simplest example I can give is the messaging application. Let’s call “Jake 👨💼(Client1)” to the person who sends the message in the messaging application and “Emily 👩 (Client2)” to the person who receives the message. Jake and Emily have both phones in their hands, messaging with each other. In this case, when Jake sends a message to the messaging app, Emily should be able to receive it in near real-time. Likewise, Emily should be able to receive the message sent by Jake near real-time. Imagine that you are designing a messaging application and rolled-up sleeves to meet this need. In this case, what solutions could we produce?

Option 1: Jake and Emily send requests to our messaging app (server) at regular intervals (for example, each 1 second) and they can ask “Are there any new messages?”. Whenever they ask, if there is a new message, that message is returned, or our application returns “no message yet” response. This loop of constantly asking and receiving answers continues until new messages arrive.

Option 2: Jake and Emily ask our messaging app “bring me whenever there is a new message.” Our application starts to hold these two requests. Whenever Jake or Emily sends a message, our app responds back to the hanging request from the other person, “Hey, this is your new message”.

If we go with our first option, then the server needs to know which messages pending delivery. Because the server should respond to the receiver whenever the receiver becomes online. If we want real-time messaging, we have to ask the server very often for a new message. In addition, we will be spending unnecessary resources since mostly new messages will not be received.

If we continue with the second option, that is, if we use the option that active users keep an active connection with the server, the server will be able to inform clients in real-time when a new message has arrived.

Once we think about two options, it would be clearly better to choose the second solution, right? Here we call the first solution as the “request-response (pull)” model and the second solution as “long-polling”.

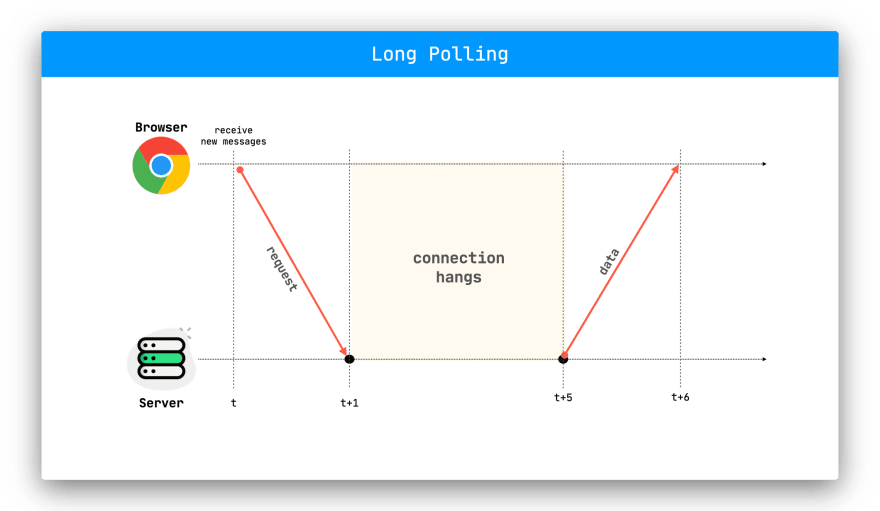

Long Polling

Long polling is the simplest way of having a persistent connection with the server, that doesn’t use any specific protocol like WebSocket. It is simply still HTTP but with hanging out the connection.

Let’s think according to the diagram I shared. Let’s say Jake wants to be notified when a new message comes from Emily, and Jake is in time “t” right now. The flow will be as follows;

Jake sends the “Request New Messages” request to the server.

The server receives the request from Jake and does not close the request by responding. Instead, server starts hanging the request until Emily sends a message.

If for any reason the connection is lost, Jake automatically repeats the same request.

Emily sends a message. As soon as the server is aware of this message event, it returns a response to the hanging request from Jake.

Real-Life Example with NodeJS

In this section, I want to make a mini messaging application that works using long polling. To reduce the complexity of the sample, let’s assume that we have only one functional requirement which is the client should be able to send and receive messages.

For this, we can have 2 simple endpoints.

Send Message: POST /new-message

Receive Message: GET /messages

const express = require('express');

const events = require('events');

const moment = require('moment');

const bodyParser = require("body-parser");

const app = express();

const port = 3000;

const messageEventEmitter = new events.EventEmitter();

app.use(bodyParser.urlencoded({ extended: false }));

app.use(bodyParser.json());

app.get('/messages', (req, res) => {

console.log(`${moment()} - Waiting for new message...`);

messageEventEmitter.once('newMessage', (from, message) => {

console.log(`${moment()} - Message Received - from: ${from} - message: ${message}`);

res.send({ok: true, from, message});

});

});

app.post('/new-message', (req, res) => {

const {from, message} = req.body;

console.log(`${moment()} - New Message - from: ${from} - message: ${message}`);

messageEventEmitter.emit('newMessage', from, message);

res.send({ok: true, description: 'Message Sent!'});

});

app.listen(port, () => {

console.log(`Server listening at port ${port}`);

});

Let’s test the code as follows;

As seen above, Jake does not receive a response from the server while waiting for messages. Whenever Emily sends a message, Jake receives the response in real-time so that the exchange of messages is complete without any latency.

Top comments (1)

It was very useful to me, thank you!