Do you remember when computers were fun to explore? Perhaps you've always thought computers were fun to explore, but there was a time before the In...

For further actions, you may consider blocking this person and/or reporting abuse

Very good observation!

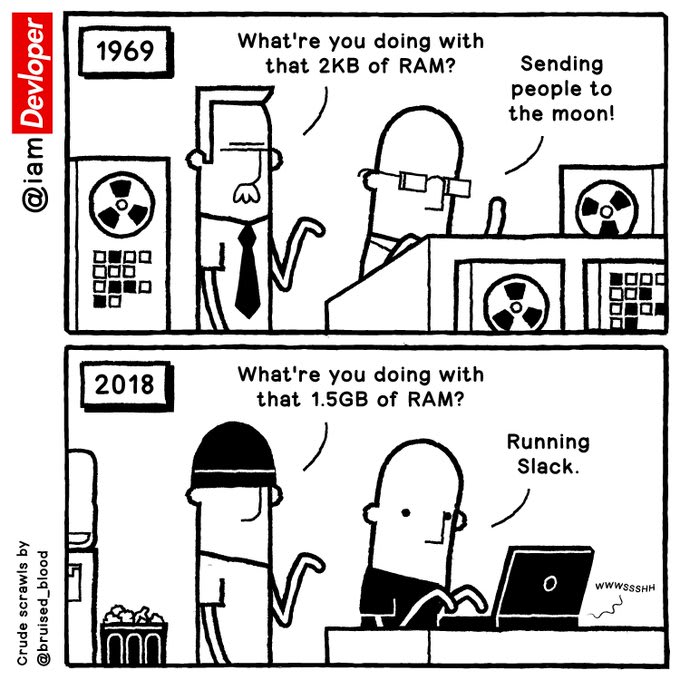

I think a large part of the problem is that we no longer care about optimization. Too often, we do less in a program that takes up 2 GB of RAM than some programs did in under than 128 MB. In part, it may be our desire for whiz-bang interfaces (beauty over function); part is our irresponsible coding practices.

Shoot, some laptops and desktops surely have more processing power than the entire render farm at Industrial Light & Magic (Lucasfilms) in the late 80s. So, why do we accomplish far less with our modern machines?

Case in point, I refuse to carry a smartphone on basis of countless technical, ethical (internet privacy), and personal (productivity) objections. A few days ago, I found my old Palm Tungsten T in a drawer...and it still works like new! It has no internet access, the interface is dated, and I can't play music on it, and yet, I love it! I haven't needed to charge it in three days of continual use, I can sync it to my Ubuntu laptop with

pilot-linkandjpilot, it has half-decent handwriting recognition (anyone remember Graffiti?). It makes a great calendar, address book, note pad, voice recorder, and to-do list.So, how is it that this dinosaur is much faster, with longer battery life, than even the fanciest modern smartphone? It does a few things very well. It was never intended to be everything to everyone ("an app for that") - it was intended to be a pocket PIM for busy business people.

What would happen if we traded the fireworks for functionality, and the modern, lazy coding shortcuts for good old fashioned optimized programming? I think we'd find our modern hardware could do far more than we ever imagined.

As someone who started computing with C64, the optimization issue is very true, and sometimes drives me crazy. Also, we have way too more abstractions, so doing simple things are not very easy anymore. This abstraction is result of software reuse, collaborative mind and commercialization which needs speed (more job done in less time) and results.

Because of a single sentence ("Hardware is cheap, people are expensive"), I refused to learn Python for years. I still write all my applications in C++. Only toys are written in Python and ported to C++ after they matured.

With this latest Electron craze, we are running whole browser instances to highlight text in various colors. Atom starts up with 660MBs of RAM. Only Eclipse is topping it with 720MBs, which is a proper IDE, and is acceptable in my book. When written correctly, a 2MB application with 170MB RAM usage can make very hard and accurate scientific calculations while kneeling down whole CPUs and using them effectively.

BTW, PalmOS and Palm devices are very very powerful for their era. I used a Handspring Neo and PalmOne LifeDrive for a very long time.

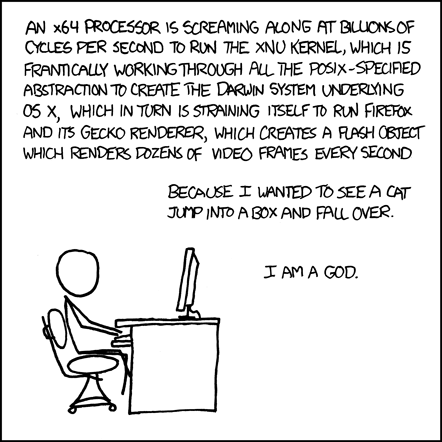

I will leave two comics here. One is an "obligatory XKCD".

Ha! Very true.

Now, with all that said, I am in fact a Python developer as well. Performance isn't entirely lost in that language, especially if you're running the Pypy interpreter (instead of the standard Python interpreter, which is much slower). There are many abstractions the language does well, including immutable objects, which make for serious performance advantages. The thing I like about Python is that you can come to understand what's going on under the hood, and make wise performance decisions as a result.

In my opinion, C, C++, and Python all have their places in responsible development, and I wouldn't regard any of them as only good for "toys". You just need to know when to use which, and how to get the most out of them.

Of course, horses for courses. I'm working on scientific, high performance computing both as a researcher and system administrator.

Python has improved a lot in ML, DL areas and very performant with underlying platform native libraries and PyPy, you're true on that regard. Also it's joyful to write Python, because it really does some tasks well with few lines and with great felxibility. It's a very productive language.

However, I generally work on very big matrices filled with double precision floating point numbers and perform operations on these, and C++ is much faster in these cases, at least in my domain.

My relationship with Python is much better now, but if I'm coding something serious I'd use Python for prototyping. If it's a small utility or something, I'd directly implement it in Python. However I'm very resource concious while developing applications, so I'm still not very comfortable to leave an interpreted language 7/24 as a service at the background.

Also, I'd like acknowledge that modern C++ isn't the easiest tool to work with. It's like a double edge blade with no handle. :D

P.S.: Yes, Calling Python a language for "programming toys" was a wrong and childish. Maybe I should be clearer on that part, sorry.

Edit: Writing comments fast while being tired is not a good idea. Corrected all the half sentences, polished the structure. IOW refactored the post completely.

Great clarification. I think we're very much on the same page regarding C++ and Python, and their respective uses. I've been the lead on a game engine project for a while, and we use C++ for that, not Python. We need the additional control.

However, GUI is almost always a lot friendlier in Python than in C++, so I tend towards that for standalone application development.

Incidentally, C++14 and C++17 have added a number of "handles" to that double-edged blade. For example,

std::unique_ptrandstd::shared_ptrhandle a lot of memory safety (and deallocation) challenges quite deftly.P.S. You'd probably find some stuff interesting in PawLIB, especially the development version (v1.1). It attempts to merge performance with some sweeter syntax.

Thanks, it seems so. GUI was never a strong part of C++ programming, but I intend to learn Qt both on Python and C++. I've some small projects in my mind. Also, I really want to be able to use ncurses on C++.

I have big C++11 code base I'm working on (sorry, can't give many details about it but it's a scientific code), and I may migrate to the new pointers, but I must do extensive testing on performance. Some parts of the code is very very sensitive and very hot, so fiddling with these parts affects just about everything.

Thanks for the pointer to the PawLIB. It really looks interesting, however it doesn't address any of my bottlenecks for now. If I find anything limiting, will give its data structures a try. I'm using Eigen for matrices and algebra, Catch for testing, and Easylogging++ for logging. They addressed what I need so far.

"...What would happen if we traded the fireworks for functionality, and the modern, lazy coding shortcuts for good old fashioned optimized programming? I think we'd find our modern hardware could do far more than we ever imagined...."

Preach it brother!

You'de be amazed how a well written (but not optimized to death) software can do on a relatively recent hardware. When you optimize, things can become borderline insane.

Totally agree. I found myself thinking about my smartphone battery life the other day and how I have upgraded over the years, yet the battery life is basically the same. It lasts about one day even though I got the new fancy one it still lasts that long. Phones have ended up being the device that does everything instead of us using a handful of devices that do their one thing really well.

I really agree with this sentiment.

But interestingly, I don't come from a background of ever being truly into personal computers. I never had the opportunity to hack on machines or really truly own my own computer until later in life, so my relationship with all of this is fairly specifically software. For people like me, it's been hard to embrace the magic of personal computers as you are describing that.

And then once I had the money to spend on personal computing if I cared to and the technical expertise to get good at it if I ever cared to... I found that I didn't really have the setup for it. I lived in New York City. Smallish apartments and I did my computing at work. Even though I could have gotten into this game a bit more, I felt like I was always fighting a bit of an uphill battle.

Long story short, recently I moved a bit out of the city and I finally feel like I can finally be a part of this world without having to make a bunch of tradeoffs in my life. And if remote work becomes more of a thing, and I think it will, I think it will lead to a big renaissance in personal computing.

I remember as a kid using a computer was this amazing experience and now as an adult a computer is a tool to get things done. It would be great to get back to that feeling of awe and wonder about the possibilities of what could be done with a computer.

I almost never actually build the app that scratches my itch, even when it feels fully formed in my mind. Somehow it just feels too difficult. Was it easier once? So much configuration and boilerplate and so many decisions to make before the first line of code is even written. The thought of getting started is usually enough to make me settle for the close-enough app I can download. I'm not sure how I feel about it.

Great post!

I strongly agree that the majority of people hardly use the potential power of the devices they own and the software they have access to. We have to take into consideration that a big part of consumer software isn't doing anything innovative as much as it is putting technology that has existed for years into the hands of people who up to now have been incapable of using it due to lack of technical knowledge.

Think about that pesky Winrar. There's nothing that software does that we cant accomplish for free using open-source tools. What we end up paying for is that UI that wraps all the complicated (yet free) tools that do the actual work.

The fact that Apple is the "in" computer company is evidence that people would rather pay for bells and whistles over functionality. You can get a computer of equal specs for half the price if you plan on running Linux or BSD instead of Mac OSX bloatware but the Mac comes with an artist quality screen and Apple exclusive software (as in yes, please, make me pay extra to get locked in). Most people wouldn't be able to build a better computer if they tried due to lack of knowledge.

Having been born with Linux installed and not owning a Mac until I was already a man, having absolute control over my system and my tools is more important than the flashiness of my window minimizing animation.

Jeff, your article hit a home run for me. I love the comparison you used in the last sentence - "A tool that can become any tool is nothing less than an imagination compiler". I've never been much of a hardware guy but over the last year, I found myself gravitating more and more to IoT and embedded systems. This has very little to do with my day-to-day job but I just want to understand how it all works. There's been an itch I felt that over the last 10 years of programming I haven't made anything that would be useful for me or people close to me. I want to build something for myself, my family and my small business.

Your article put it into words much better than I could. And it threw me into a deep reflection.

Thank you, and have a wonderful day.

I can relate very much to the feeling, that somewhere along the way something fundamentally changed in the field of computing.

I suspect, the reason for this and my inability to really pin it down, has something to do with a fundamental problem of grasping exponential growth. And that outlook also might be a generational experience. I started out with a then (1995) outdated 80286 (for which my uncle had no use anymore). And for the next years, each successor machine I owned more than doubled its immediate predecessor in clock speed and memory etc. But Moore's law has reached saturation a few years ago and neither the number of cores, nor the clock rate increased significantly, and frankly I stopped bothering.

I have more than once wondered, what would have happened if I had entered the field when the plateau was already reached. Had I ever gotten into programming, if it had not been a necessity to do anything really fun with my first computer?

Commercialization, as you rightly pointed out, is a double edged sword. Computers now are a commodity, or a utility even, more akin to electricity and water than to most physical products. It managed to lower the barrier to entry and raise it at the same time, because the industry found it to be more profitable to lure users into a permanently locked-in & vendor-dependent, albeit comfortable, position. It transformed what was a maker culture to a consumer culture.

Everybody in this community is firmly on the maker side, which also places us firmly and probably permanently in an absolute minority position. The majority is not to blame for lacking perspective, because we, as a field and an industry, have worked hard to create a silo. We are still, with the words of Bob Barton, the high-priests of a low cult. Call me naive, but I don't give up on the thought, that better ways of thinking about and doing things are still to be discovered.

Came for the nice Commodore 64 images, got a great article instead.

Great article Jeff.

Hyperfiddle is an application builder built on horizontally scalable, immutable, hyper relational primitives

It's the biggest breakthrough I've seen since meteorjs, in a web environment that feels lacking in imagination

"Imagination Engineer" is now my new job title. Thank you.

Never

Rust seems to be going against this trend.

Most importantly, it's a joy to use, and the tradeoffs that still exist are tradeoffs for more than just performance, such as correctness.

And what's better than correctness? :)

Off-topic - is your progrium.com domain down? Link is dead for me.

Yes it is. :'(