When administering any technology stack, we've all asked the following questions either internally or to our peers:

- Is the stack optimally configured?

- Are there any issues that require my (or my team's) immediate attention?

- Are there any potential "gotcha's" that need to be addressed in the coming weeks/months?

In the case of Puppet, to answer these questions, administrators historically would turn to:

- Examining the output of various HTTP API calls.

- Reviewing operational dashboards containing visual representations of various metrics.

- Parsing the log file(s) for various components.

While all of these sources provide invaluable insight as to how a Puppet installation is operating, they all share one thing in common. That is an overwhelming amount of information presented to the administrator. Information that requires prior experience as to what to look for, where to look, and how to obtain. Experience aside, groking all of that information takes time. Time that you likely don't have to spare in the middle of an overnight on-call shift. Even if you do find the culprit, where did that notepad go with your cryptic notes on how to resolve it from the last time you encountered this scenario? Or maybe this is your first time in this scenario and your Google-Fu is failing you in your caffeine deprived state?

Alright, enough with the hypotheticals. What if I told you there was a simpler option? Well, you're in luck, the puppetlabs/puppet_status_check module provides a proactive way to determine when a Puppet installation is not in an ideal state. This module uses pre-configured indicators to not only provide a simplified output to determine what is wrong but also direct you to the next steps for resolution.

"That sounds great and all, but how do I setup and use this thing?" Well let's dive in shall we?

Setup

First things first we need to get the module into our codebase. If you are using a control repository and a Puppetfile to manage the contents of your codebase, it's as easy as adding the following to your Puppetfile, as there are no dependency modules:

mod 'puppetlabs-puppet_status_check', '0.9.3'

Be sure to deploy your code if you don't have a webhook or some other automation in place to handle this for you. For example, r10k users may deploy locally on their Puppet server(s) by running the following:

r10k deploy environment production -mv

Now the next time the Puppet agent runs on nodes in this environment, the puppet_status_check and puppet_status_check_role facts will be plugin-synced onto each node. These facts are what ultimately provide us with the information that we are after.

However, before we can start using these facts, first we need to enable them and optionally configure which subset of checks we'd like to take into account.

To enable the status check facts, we simply need to classify our node(s) with the puppet_status_check class. For example, you might add the following to your control repository’s manifests/site.pp:

node default {

include 'puppet_status_check'

}

Of note, the default node definition applies to all nodes that do not have a more specific node definition in the manifests/site.pp.

It is worth mentioning that the default behavior of the puppet_status_check class is to configure the status check role to agent. This means that for these nodes, only the status checks related to the operation of the puppet agent are enabled.

For your Puppet server, while it is useful to know the Puppet agent is operating correctly, what about the other important services that run on a Puppet server? To enable the status checks for these other services, all we need to do is set the role parameter of the puppet_status_check class to primary for the specific node(s). For example, your manifests/site.pp might also have a node definition like so:

node 'puppet-primary.corp.com' {

class { 'puppet_status_check':

role => 'primary',

}

}

Once these classification changes have been deployed to your Puppet server(s) and the Puppet agent has run on your node(s), we are finally ready to check out all of our hard work.

Reviewing Output

Let's start by looking at the puppet_status_check's fact directly on one of our nodes with the status check role set to agent:

root@agent01:~# puppet facts puppet_status_check

{

"puppet_status_check": {

"AS001": true,

"AS003": true,

"AS004": true,

"S0001": false,

"S0003": true,

"S0012": true,

"S0013": true,

"S0021": true,

"S0030": true

}

}

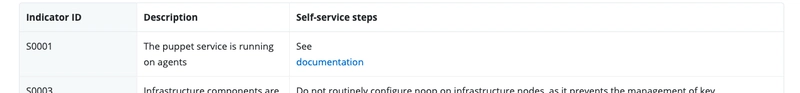

Ideally, in this output each one of the status checks will return true, meaning that whatever the particular check is looking for is as expected. The keys such as AS001 are the specific indicator IDs that can be cross-referenced with a table in the puppetlabs/puppet_status_check's documentation.

On my example node, it appears that S0001 is returning false, meaning something is not quite right with the Puppet agent on this particular node. Referencing the table in the modules documentation, I am quickly able to determine that the puppet service is not running on this node and there are even steps listed that tell me how to rectify the situation (in this case it's a documentation link).

Let's try following the self-service steps to see if we can't resolve the issue.

root@agent01:~# puppet resource service puppet ensure=running

Notice: /Service[puppet]/ensure: ensure changed 'stopped' to 'running'

service { 'puppet':

ensure => 'running',

provider => 'systemd',

}

root@agent01:~# puppet facts puppet_status_check

{

"puppet_status_check": {

"AS001": true,

"AS003": true,

"AS004": true,

"S0001": true,

"S0003": true,

"S0012": true,

"S0013": true,

"S0021": true,

"S0030": true

}

}

Great! Now that all the checks are returning true on this node, the Puppet agent is in good shape again.

All right, now I know what you are probably thinking: "You've only looked at the puppet_status_check fact directly on one node. I have hundreds (or thousands) of nodes, wouldn't that be tedious to check each one individually?" Yes, indeed it would! Let's take a look at our options.

Viewing status for multiple nodes - Bolt

For Bolt users, the puppetlabs/puppet_status_check module conveniently provides a puppet_status_check::summary plan to resolve this information across multiple nodes. For example:

someuser@jumpbox:~#bolt plan run puppet_status_check::summary targets=puppet-primary.corm.com,agent01.corp.com

Starting: plan puppet_status_check::summary

Finished: plan puppet_status_check::summary in 7.85 sec

{

"nodes": {

"details": {

"puppet-primary.corp.com": {

"passing_tests_count": 32,

"failed_tests_count": 0,

"failed_tests_details": [

]

},

"agent01.corp.com": {

"passing_tests_count": 9,

"failed_tests_count": 0,

"failed_tests_details": [

]

}

},

"passing": [

"puppet-primary.corp.com",

"agent01.corp.com"

],

"failing": [

]

},

"errors": {

},

"status": "passing",

"passing_node_count": 2,

"failing_node_count": 0

}

As seen in this example I am explicitly targeting the two nodes we set up earlier, though in practice you might want to leverage inventory files or connect Bolt to PuppetDB to define groups so that you don't need to target nodes individually.

Viewing status for multiple nodes - PuppetDB

For PuppetDB users, you can also leverage the PuppetDB API to query the database for the most recent puppet_status_check fact output reported by each node.

An example querying for all nodes classified with the puppet_status_check class:

root@puppet-primary:~# curl -s -X GET https://$(puppet config print certname):8081/pdb/query/v4 \

--cacert $(puppet config print localcacert) \

--cert $(puppet config print hostcert) \

--key $(puppet config print hostprivkey) \

--data-urlencode 'pretty=true' \

--data-urlencode 'query=facts[certname,value] { name = "puppet_status_check" }'

[ {

"certname": "agent01.corp.com",

"value": {

"AS001": true,

"S0003": true,

"S0001": true,

"S0021": true,

"S0030": true,

"S0013": true,

"AS003": true,

"S0012": true,

"AS004": true

}

},

{

"certname": "puppet-primary.corp.com",

"value": {

"S0035": true,

"S0039": true,

"AS001": true,

"S0036": true,

"S0038": true,

"S0003": true,

"S0001": true,

"S0007": true,

"S0008": true,

"S0021": true,

"S0014": true,

"S0030": true,

"S0024": true,

"S0010": true,

"S0034": true,

"S0009": true,

"S0005": true,

"S0019": true,

"S0045": true,

"S0011": true,

"S0017": true,

"S0004": true,

"S0033": true,

"S0029": true,

"S0013": true,

"S0027": true,

"AS003": true,

"S0023": true,

"S0016": true,

"S0012": true,

"S0026": true,

"AS004": true

}

} ]

An example querying for a particular puppet_status_check_role, in this case primary:

root@puppet-primary:~# curl -s -X GET https://$(puppet config print certname):8081/pdb/query/v4 \

--cacert $(puppet config print localcacert) \

--cert $(puppet config print hostcert) \

--key $(puppet config print hostprivkey) \

--data-urlencode 'pretty=true' \

--data-urlencode 'query=facts[certname,value] { name = "puppet_status_check" and certname in facts[certname] { name = "puppet_status_check_role" and value = "primary"}}'

[ {

"certname": "puppet-primary.corp.com",

"value": {

"S0035": true,

"S0039": true,

"AS001": true,

"S0036": true,

"S0038": true,

"S0003": true,

"S0001": true,

"S0007": true,

"S0008": true,

"S0021": true,

"S0014": true,

"S0030": true,

"S0024": true,

"S0010": true,

"S0034": true,

"S0009": true,

"S0005": true,

"S0019": true,

"S0045": true,

"S0011": true,

"S0017": true,

"S0004": true,

"S0033": true,

"S0029": true,

"S0013": true,

"S0027": true,

"AS003": true,

"S0023": true,

"S0016": true,

"S0012": true,

"S0026": true,

"AS004": true

}

} ]

Bonus Tip

If you are like me and it irks you that the status indicators are not sorted in the output, you can always leverage the vendored in ruby runtime that the Puppet agent comes with and some quick inline ruby code:

root@puppet-primary:~# curl -s -X GET https://$(puppet config print certname):8081/pdb/query/v4 \

--cacert $(puppet config print localcacert) \

--cert $(puppet config print hostcert) \

--key $(puppet config print hostprivkey) \

--data-urlencode 'pretty=true' \

--data-urlencode 'query=facts[certname,value] { name = "puppet_status_check" and certname in facts[certname] { name = "puppet_status_check_role" and value = "primary"}}' \

| /opt/puppetlabs/puppet/bin/ruby -rjson -e "puts JSON.pretty_generate(JSON.parse(STDIN.read).each {|h| h['value'] = h['value'].sort.to_h })"

[

{

"certname": "puppet-primary.corp.com",

"value": {

"AS001": true,

"AS003": true,

"AS004": true,

"S0001": true,

"S0003": true,

"S0004": true,

"S0005": true,

"S0007": true,

"S0008": true,

"S0009": true,

"S0010": true,

"S0011": true,

"S0012": true,

"S0013": true,

"S0014": true,

"S0016": true,

"S0017": true,

"S0019": true,

"S0021": true,

"S0023": true,

"S0024": true,

"S0026": true,

"S0027": true,

"S0029": true,

"S0030": true,

"S0033": true,

"S0034": true,

"S0035": true,

"S0036": true,

"S0038": true,

"S0039": true,

"S0045": true

}

}

]

Indicator Exclusions

While I recommend by starting to report on all the default indicators, there may be scenarios where you want to exclude some indicators within your organization.

For example, let's say you configured your primary Puppet server exactly as described above with the role of primary. This enables indicators for the Puppet agent, Puppet server, PuppetDB, and the Certificate Authority. However, let's say you do not have PuppetDB installed on that node. Every time the Puppet agent runs on that node you'd have output similar to:

root@puppet-primary:~# puppet agent -t

Info: Using environment 'production'

Info: Retrieving pluginfacts

Info: Retrieving plugin

Info: Loading facts

Warning: Facter: Error in fact 'puppet_status_check.S0007' when checking postgres info: statvfs() function failed: No such file or directory

Warning: Facter: Error in fact 'puppet_status_check.S0011' failed to get service name: undefined method `[]' for nil:NilClass

tail: cannot open '/var/log/puppetlabs/puppetdb/puppetdb.log' for reading: No such file or directory

Notice: Requesting catalog from puppet-primary.corp.com:8140 (172.31.112.200)

Notice: Catalog compiled by puppet-primary.corp.com

Info: Caching catalog for puppet-primary.corp.com

Info: Applying configuration version 'puppet-primary-production-f457e3d2333'

Notice: S0007 is at fault, Checks that there is at least 20% disk space free on PostgreSQL data partition. Refer to documentation for required action.

Notice: /Stage[main]/Puppet_status_check/Notify[puppet_status_check S0007]/message: defined 'message' as 'S0007 is at fault, Checks that there is at least 20% disk space free on PostgreSQL data partition. Refer to documentation for required action.'

Notice: S0010 is at fault, Checks that puppetdb service is running and enabled on relevant components. Refer to documentation for required action.

Notice: /Stage[main]/Puppet_status_check/Notify[puppet_status_check S0010]/message: defined 'message' as 'S0010 is at fault, Checks that puppetdb service is running and enabled on relevant components. Refer to documentation for required action.'

Notice: S0011 is at fault, Checks that postgres service is running and enabled on relevant components. Refer to documentation for required action.

Notice: /Stage[main]/Puppet_status_check/Notify[puppet_status_check S0011]/message: defined 'message' as 'S0011 is at fault, Checks that postgres service is running and enabled on relevant components. Refer to documentation for required action.'

Notice: S0027 is at fault, Checks that the Puppetdb JVM heap max is set to an efficient volume. Refer to documentation for required action.

Notice: /Stage[main]/Puppet_status_check/Notify[puppet_status_check S0027]/message: defined 'message' as 'S0027 is at fault, Checks that the Puppetdb JVM heap max is set to an efficient volume. Refer to documentation for required action.'

Notice: S0029 is at fault, Checks whether the number of current connections to PostgreSQL DB is approaching 90% of the max_connections defined. Refer to documentation for required action.

Notice: /Stage[main]/Puppet_status_check/Notify[puppet_status_check S0029]/message: defined 'message' as 'S0029 is at fault, Checks whether the number of current connections to PostgreSQL DB is approaching 90% of the max_connections defined. Refer to documentation for required action.'

Notice: Applied catalog in 0.02 seconds

In this particular scenario, all of this output is erroneous noise, as you already know that PuppetDB is not installed. Do you really want to be alerted that the puppetdb service is not running, etcetera in this scenario? I'd wager not, so let's silence that noise shall we?

To disable indicators, all we need to do is set the indicator_exclusions parameter of the puppet_status_check class and tell it which indicators to exclude.

For example:

node 'puppet-primary.corp.com' {

class { 'puppet_status_check':

role => 'primary',

indicator_exclusions => [

'S0007', 'S0010', 'S0011',

'S0017', 'S0027', 'S0029'

],

}

}

After deploying your code, run the Puppet agent for the configuration to take effect.

root@puppet-primary:~# puppet agent -t

Info: Using environment 'production'

Info: Retrieving pluginfacts

Info: Retrieving plugin

Info: Loading facts

Warning: Facter: Error in fact 'puppet_status_check.S0007' when checking postgres info: statvfs() function failed: No such file or directory

Warning: Facter: Error in fact 'puppet_status_check.S0011' failed to get service name: undefined method `[]' for nil:NilClass

tail: cannot open '/var/log/puppetlabs/puppetdb/puppetdb.log' for reading: No such file or directory

Notice: Requesting catalog from puppet-primary.corp.com:8140 (172.31.112.200)

Notice: Catalog compiled by puppet-primary.corp.com

Info: Caching catalog for puppet-primary.corp.com

Info: Applying configuration version 'puppet-primary-5bc7859de41'

Notice: /Stage[main]/Puppet_status_check/File[/opt/puppetlabs/puppet/cache/state/status_check.json]/content:

--- /opt/puppetlabs/puppet/cache/state/status_check.json 2024-07-31 22:18:33.311548817 +0000

+++ /tmp/puppet-file20240731-23598-baocro 2024-07-31 22:29:21.437758201 +0000

@@ -2,5 +2,5 @@

"role": "primary",

"pg_config": "pg_config",

"postgresql_service": "postgresql@%{pg_major_version}-main",

- "indicator_exclusions": "[]"

+ "indicator_exclusions": "[S0007, S0010, S0011, S0017, S0027, S0029]"

}

Notice: /Stage[main]/Puppet_status_check/File[/opt/puppetlabs/puppet/cache/state/status_check.json]/content: content changed '{sha256}e62e3a6ce0380a07dc426d2c936a74061daba893846696a19c0d91ef69f4cc6c' to '{sha256}6db3bfddabe0b2f6e78d25a229184746ca3d003caa7980c9fbc20370d5ccb265'

Notice: Applied catalog in 0.05 seconds

The next time the Puppet agent runs, all that noise should be gone!

root@puppet-primary:~# puppet agent -t

Info: Using environment 'production'

Info: Retrieving pluginfacts

Info: Retrieving plugin

Info: Loading facts

Notice: Requesting catalog from puppet-primary.corp.com:8140 (172.31.112.200)

Notice: Catalog compiled by puppet-primary.corp.com

Info: Caching catalog for puppet-primary.corp.com

Info: Applying configuration version 'puppet-primary-production-5bc7859de41'

Notice: Applied catalog in 0.04 seconds

Additionally, we should not see the indicators we excluded in the fact output either:

root@puppet-primary:~# puppet facts puppet_status_check

{

"puppet_status_check": {

"AS001": true,

"AS003": false,

"AS004": true,

"S0001": true,

"S0003": true,

"S0004": true,

"S0005": true,

"S0008": true,

"S0009": true,

"S0012": true,

"S0013": true,

"S0014": true,

"S0016": true,

"S0019": true,

"S0021": true,

"S0023": true,

"S0024": true,

"S0026": true,

"S0030": true,

"S0033": true,

"S0034": true,

"S0035": true,

"S0036": true,

"S0038": true,

"S0039": true,

"S0045": true

}

}

Top comments (0)