Handling large files (for example, CSV/text files) or HTTP data streams in PHP can be challenging, especially when using traditional methods that load all data into memory. In this article, we’ll compare two approaches:

- Using an array to store all rows (memory-intensive).

- Using PHP Generators (memory-efficient).

We’ll see why generators are a game-changer for large file (or stream) processing by tracking memory consumption in both cases.

The classical approach: reading a file into an array

Let’s start with one of the conventional ways.

For example, I can read and store the entire file in an array.

Here, the data.csv is a huge file containing much data.

With this code I will open a file and load the data in an array. The more the file is big, the more the array will grow.

<?php

function parseCsv($filePath)

{

$rows = [];

$handle = fopen($filePath, 'r');

while (($data = fgetcsv($handle)) !== false) {

$rows[] = $data; // Storing each row in an array

}

fclose($handle);

return $rows;

}

// Track memory usage

$startMemory = memory_get_usage();

$rows = parseCsv('data.csv');

$endMemory = memory_get_usage();

echo "Memory used: " . ($endMemory - $startMemory) . " bytes\n";

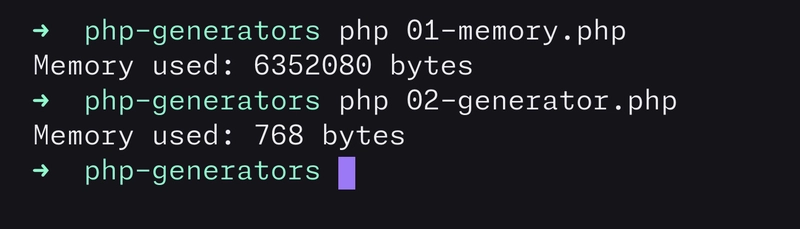

For example, for a data.csv with 10931 lines...

wc -l data.csv

10931 data.csv

...The memory used by the running script is:

php 01-memory.php

Memory used: 6352080 bytes

The issue with this approach

- The entire file is loaded into memory.

- For large files, this can lead to high memory consumption and even out-of-memory errors.

A more efficient way: using Generators

PHP generators allow us to process one row at a time without storing the entire dataset in memory.

<?php

function parseCsvGenerator($filePath)

{

$handle = fopen($filePath, 'r');

while (($data = fgetcsv($handle)) !== false) {

yield $data; // Yield one row at a time

}

fclose($handle);

}

// Track memory usage

$startMemory = memory_get_usage();

foreach (parseCsvGenerator('data.csv') as $row) {

// Process each row without storing all in memory

}

$endMemory = memory_get_usage();

echo "Memory used: " . ($endMemory - $startMemory) . " bytes\n";

For example, for a data.csv with 10931 lines...

wc -l data.csv

10931 data.csv

...The memory used by the running script is:

php 02-generator.php

Memory used: 768 bytes

Generators explained

Generators in PHP provide an efficient way to iterate over large datasets without storing everything in memory.

Unlike traditional functions that return an array, generators produce values one at a time using yield, making them ideal for handling huge files, database queries, and infinite sequences.

What is the yield operator?

The yield keyword pauses the function execution and returns a value, but unlike return, it allows the function to resume from where it left off. This makes it perfect for on-demand, memory-efficient iteration.

So, in a function, instead of returning a full array, we can use yield to produce values one at a time, allowing us to loop over them like an iterator. Let’s see how to transform a regular function into a generator.

Traditional function using return

<?php

function getNumbers()

{

return [1, 2, 3, 4, 5]; // Returns the full array at once

}

// Usage:

foreach (getNumbers() as $num) {

echo $num . " "; // Output: 1 2 3 4 5

}

Optimized function using yield

<?php

function getNumbersGenerator()

{

for ($i = 1; $i <= 5; $i++) {

yield $i; // Yields one value at a time

}

}

// Usage:

foreach (getNumbersGenerator() as $num) {

echo $num . " "; // Output: 1 2 3 4 5

}

Why generators are better

1) Lower memory usage (main benefit)

Since generators yield one item at a time instead of storing everything in memory, they prevent out-of-memory errors and allow handling of huge datasets efficiently.

2) Better performance (less processing overhead)

Traditional methods require building and maintaining large arrays*, which takes extra CPU time.

Generators don’t need to allocate and free large chunks of memory, making execution faster in many cases.

3) Lazy evaluation (process as you go)

Instead of waiting for all data to load, generators process one item at a time, which is great for streaming large datasets or handling real-time data processing.

4) Improved scalability

Since you don’t store everything in memory, your script can handle millions of rows without running into resource limits.

5) Easier to work with infinite sequences

Unlike arrays, generators don’t require a predefined size, making them perfect for infinite sequences, log file streaming, and real-time event handling.

Example: Reading a never-ending stream of live stock prices or logs.

When should you use generators?

- Large files (CSV, JSON, XML, logs, etc.)

- Streaming data (APIs, real-time feeds, AI streaming responses)

- Processing huge datasets in a memory-efficient way

- Generating large sequences without storing them

When not to use generators?

- When you need random access to all elements (e.g., modifying specific rows by index).

- When you must process data multiple times (generators can’t be rewound). -When you need sorting, filtering, or advanced operations on the full dataset at once.

Final thoughts

Generators are a powerful tool in PHP when working with large or streamed datasets. They help improve memory efficiency, performance, and scalability, making them a great alternative to traditional array-based processing.

Do you already use generators in your projects? Let me know in the comments!

Top comments (2)

love to see someone else using

wc -lthe way god intended!Thanks for the article!