I always thought computers were better at math than people until I tried to add 0.1 to 0.2 and got an answer of 0.3000000004 😕

JavaScript is just a toy language so I figured it was a bug and tried the same thing in python but it also gave me the same wrong answer then I discovered that these languages aren't actually broken they just do floating point arithmetic ✨

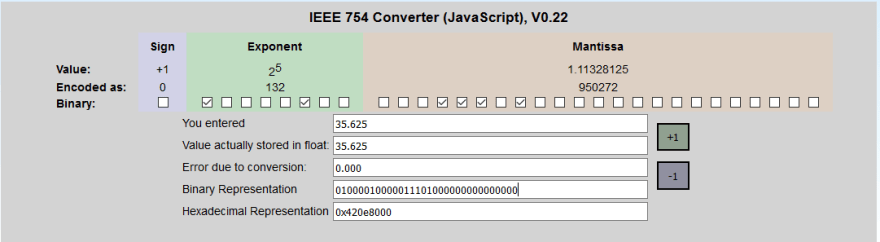

Computers have a limited amount of memory and need to make a trade-off between range and precision in JavaScript all numbers must be stored within 64 bits that means we can have integers accurate up to 15 digits and a max of 17 numbers after the decimal point it's called a floating point because there are no fixed number of digits before or after the decimal point allowing it to represent a wide range of numbers both big and small

The problem is that computers use a base 2 system binary while humans use a base 10 system that leads to rounding errors when all the memory has been used up and that's why your computer sucks at math 🤕

For further actions, you may consider blocking this person and/or reporting abuse

Top comments (1)