1. Preface

typia challenges Agentic AI with its compiler skills.

This new challenge comes with a new open source framework, @agentica.

It specializes in LLM Function Calling and accomplishes everything through it. Simply list the functions you want to call, and you can create your own Agentic AI. If you're a TypeScript developer, you're now an AI developer. Let's enter this new era of AI development.

-

@agentica: https://wrtnlabs.io/agentica -

typia: https://typia.io

import { Agentica } from "@agentica/core";

import { HttpLlm } from "@samchon/openapi";

import typia from "typia";

const agent = new Agentica({

controllers: [

HttpLlm.application({

model: "chatgpt",

document: await fetch(

"https://shopping-be.wrtn.ai/editor/swagger.json",

).then(r => r.json()),

}),

typia.llm.application<ShoppingCounselor, "chatgpt">(),

typia.llm.application<ShoppingPolicy, "chatgpt">(),

typia.llm.application<ShoppingSearchRag, "chatgpt">(),

],

});

await agent.conversate("I wanna buy MacBook Pro");

2. Outline

2.1. Transformer Library

//----

// src/checkString.ts

//----

import typia, { tags } from "typia";

export const checkString = typia.createIs<string>();

//----

// bin/checkString.js

//----

import typia from "typia";

export const checkString = (() => {

return (input) => "string" === typeof input;

})();

typia is a transformer library that converts TypeScript types to runtime functions.

When you call a typia function, it's compiled as shown above. This is the key concept of typia - transforming TypeScript types into runtime functions. The typia.is<T>() function is transformed into a dedicated type checker by analyzing the target type T at the compilation level.

This feature enables developers to ensure type safety in their applications by leveraging TypeScript's static typing while also providing runtime validation. Instead of defining additional hand-made schemas, you can simply utilize pure TypeScript types.

Additionally, since validation (or serialization) logic is generated by the compiler analyzing TypeScript source code, it is both accurate and faster than any competing libraries.

Measured on AMD Ryzen 9 7940HS, Rog Flow x13

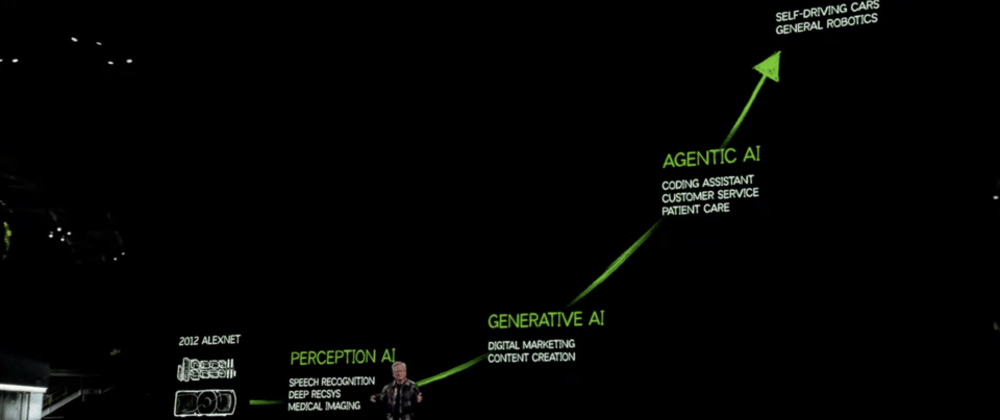

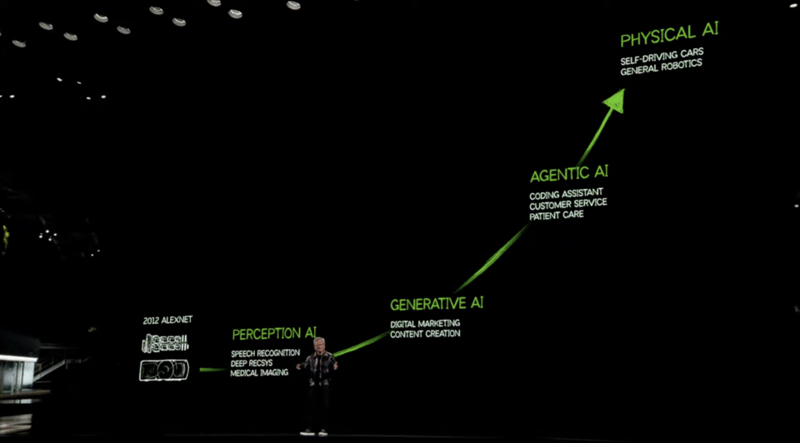

2.2. Challenge to Agentic AI

Jensen Huang Graph, and his advocacy: https://youtu.be/R0Erk6J8o70

With its TypeScript compilation capabilities, typia is challenging the Agentic AI framework landscape.

The new framework is called @agentica, and it specializes in LLM Function Calling, accomplishing everything through function calls. The function calling schema comes from the typia.llm.application<Class, Model>() function.

Here's a demonstration of a Shopping Mall chatbot composed using a Swagger/OpenAPI document from an enterprise-level shopping mall backend consisting of 289 API functions. As you can see in the video below, everything works smoothly. What's amazing is that this shopping chatbot achieves Agentic AI functionality using just a small model (gpt-4o-mini, 8b parameters).

This is the new Agentic AI era opened by typia with its compiler skills. Simply by listing functions to call, you can achieve Agentic AI, as pioneered by Jensen Huang. If you're a TypeScript developer, you are now an AI developer.

import { Agentica } from "@agentica/core";

import { HttpLlm } from "@samchon/openapi";

import typia from "typia";

const agent = new Agentica({

controllers: [

HttpLlm.application({

model: "chatgpt",

document: await fetch(

"https://shopping-be.wrtn.ai/editor/swagger.json",

).then(r => r.json()),

}),

typia.llm.application<ShoppingCounselor, "chatgpt">(),

typia.llm.application<ShoppingPolicy, "chatgpt">(),

typia.llm.application<ShoppingSearchRag, "chatgpt">(),

],

});

await agent.conversate("I wanna buy MacBook Pro");

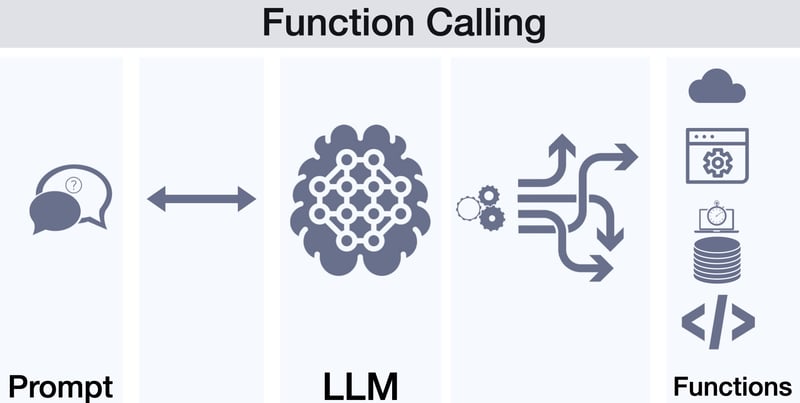

2.3. LLM Function Calling

https://platform.openai.com/docs/guides/function-calling

LLM (Large Language Model) Function Calling means that AI selects the appropriate function to call and fills in the arguments by analyzing conversation context with the user.

typia and @agentica concentrate on and specialize in this concept - using function calling to achieve Agentic AI. Just looking at the definition of LLM function calling, it's such an elegant concept that you might wonder why it isn't more widely used. Wouldn't it be possible to achieve Agentic AI simply by listing the functions needed at any given time?

In this document, we'll explore why function calling hasn't been widely adopted and see how typia and @agentica make it viable for general-purpose applications.

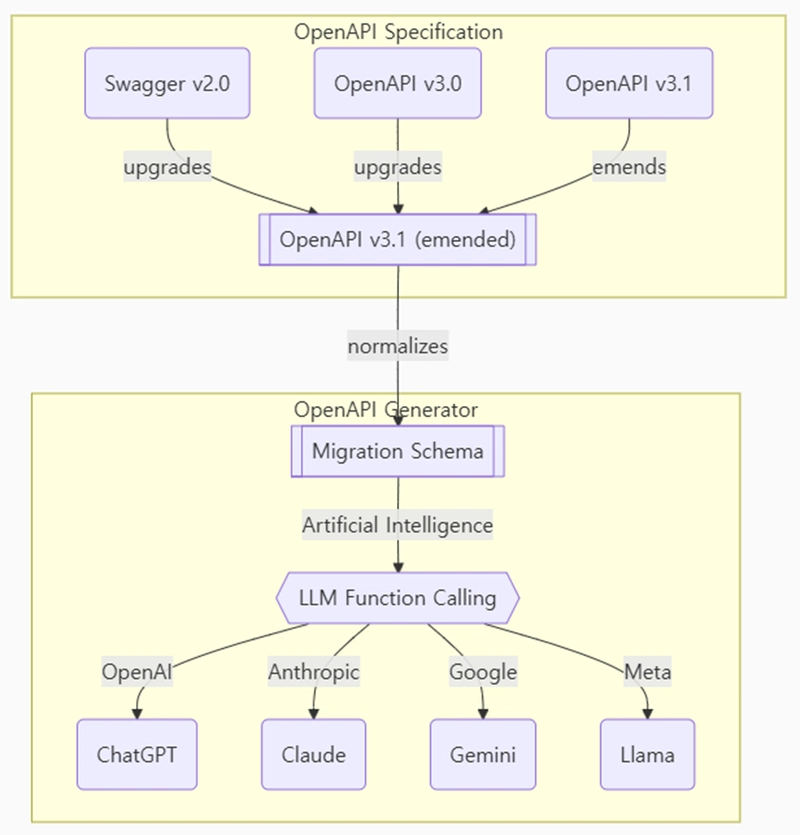

3. Concepts

3.1. Traditional AI Development

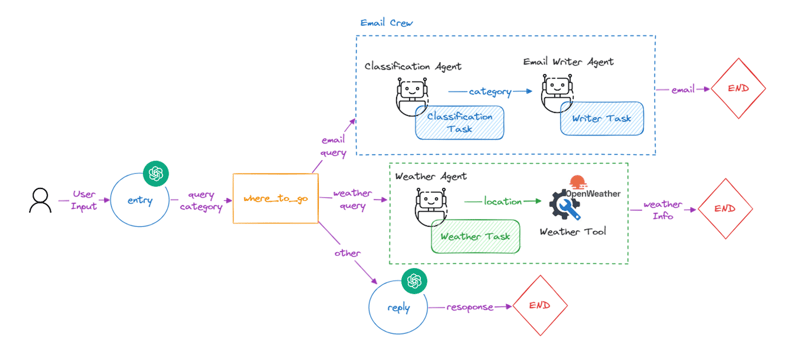

In traditional AI development, AI developers have focused on agent workflows composed of multiple graph nodes. They've concentrated on developing special-purpose AI agents rather than creating general-purpose agents.

However, this agent workflow approach has critical weaknesses in scalability and flexibility. When an agent's functionality needs to expand, AI developers must create increasingly complex agent workflows with more and more graph nodes.

Furthermore, as the number of agent graph nodes increases, the success rate decreases. This occurs because the success rate decreases according to the number of nodes multiplied as a Cartesian product. For example, if the success rate of each node is 80%, and there are five sequential nodes, the overall success rate of the agent workflow becomes just 32.77% (0.85).

To mitigate this Cartesian product disaster, AI developers often create a new supervisor workflow as an add-on to the main workflow's nodes. If functionality needs to expand further, this leads to fractal patterns of workflows. To avoid the Cartesian product disaster, AI developers must face another fractal disaster.

Using such workflow approaches, would it be possible to create a shopping chatbot agent? Is it possible to build an enterprise-level chatbot? This explains why we mostly see special-purpose chatbots or chatbots that resemble toy projects in the world today.

The problem stems from the fact that agent workflows themselves are difficult to create and have extremely poor scalability and flexibility.

3.2. Document Driven Development

Documentation for each function independently.

To escape from the disaster of Cartesian Products and Fractal Agent Workflows, I suggest a new approach: "Document Driven Development." This is similar to the "Domain Driven Development" methodology that separates complex projects into smaller domains, making development easier and more scalable. The only difference is that documentation comments are additionally required in this separation concept.

Write documentation comments for each function independently, describing the purpose of each function to the AI. Trust @agentica and LLM function calling to handle everything else. Simply by writing independent documentation comments for each function, you can make your agent scalable, flexible, and highly productive.

If there's a relationship between functions, don't create an agent workflow - just document it in the description comments. Here's a list of well-documented functions and schemas:

- Functions

- DTO schemas

export class BbsArticleService {

/**

* Get all articles.

*

* List up every articles archived in the BBS DB.

*

* @returns List of every articles

*/

public index(): IBbsArticle[];

/**

* Create a new article.

*

* Writes a new article and archives it into the DB.

*

* @param props Properties of create function

* @returns Newly created article

*/

public create(props: {

/**

* Information of the article to create

*/

input: IBbsArticle.ICreate;

}): IBbsArticle;

/**

* Update an article.

*

* Updates an article with new content.

*

* @param props Properties of update function

* @param input New content to update

*/

public update(props: {

/**

* Target article's {@link IBbsArticle.id}.

*/

id: string & tags.Format<"uuid">;

/**

* New content to update.

*/

input: IBbsArticle.IUpdate;

}): void;

/**

* Erase an article.

*

* Erases an article from the DB.

*

* @param props Properties of erase function

*/

public erase(props: {

/**

* Target article's {@link IBbsArticle.id}.

*/

id: string & tags.Format<"uuid">;

}): void;

}

3.3. Compiler Driven Development

LLM function calling schemas must be written by the compiler.

@agentica is an Agentic AI framework specialized in LLM Function Calling, accomplishing everything through function calls. Therefore, one of the most important aspects is how to build LLM schemas safely and effectively.

In traditional AI development, AI developers have defined hand-made LLM function calling schemas. This results in duplicated code and is a dangerous approach to entity definition.

If there's a mistake in a hand-made schema definition, humans can intuitively work around it. However, AI never forgives such mistakes. Invalid hand-made schema definitions will break the entire agent system.

So, if creating LLM schemas is difficult and error-prone, @agentica would become difficult and error-prone as well. If building LLM schemas is dangerous, @agentica would also be dangerous to use.

import { ILlmApplication } from "@samchon/openapi";

import typia from "typia";

import { BbsArticleService } from "./BbsArticleService";

const app: ILlmApplication<"chatgpt"> = typia.llm.application<

BbsArticleService,

"chatgpt"

>();

console.log(app);

To ensure safety and convenience for LLM function schema building, typia supports the typia.llm.application<Class, Model>() function. It analyzes the target TypeScript class type and generates a proper LLM function calling schema at the compilation level.

Since the schema is built by the compiler through source code analysis, there cannot be any errors in the schema definition. No more hand-made schema definitions are required, and no more duplicated coding is needed.

This compiler-driven schema generation will lead you into the new Agentic AI era.

By the way, @agentica can obtain function schemas not only from TypeScript class types but also from Swagger/OpenAPI documents. So what about backend development? How can we accomplish Compiler Driven Development in the backend environment?

There is a clear solution for this, which I'll describe in a future article.

4. Principles

4.1. OpenAPI Specification

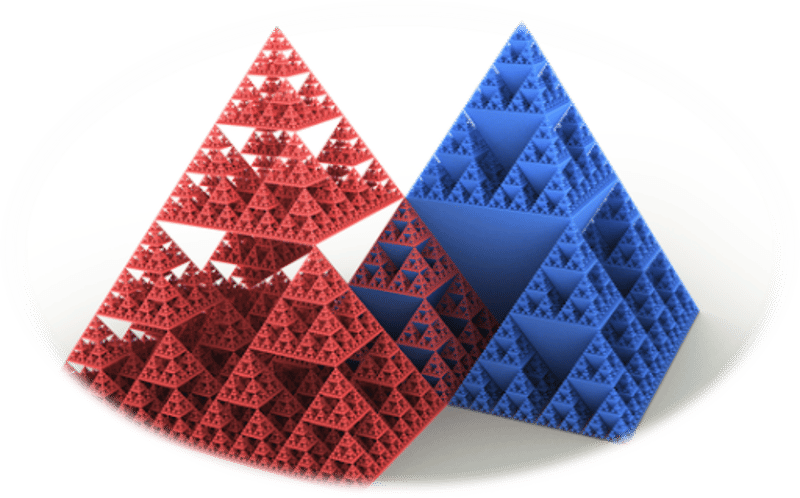

Converting OpenAPI Specifications to LLM Function Calling Schemas.

LLM function calling requires JSON schema-based function schemas. However, LLM (Large Language Model) service vendors do not use the same JSON schema specifications. "OpenAI GPT" and "Anthropic Claude" use different JSON schema specifications for LLM function calling, and Google Gemini differs from both of them.

What's even more challenging is that Swagger/OpenAPI documents also use different JSON schema specifications than LLM function calling schemas, and these specifications vary greatly between versions of Swagger/OpenAPI.

To resolve this problem, @agentica utilizes @samchon/openapi. When a Swagger/OpenAPI document is processed, it's converted to an OpenAPI v3.1 amended specification. It's then converted to the specific LLM function calling schema of the service vendor, bypassing the migration schema.

For reference, the migration schema is another middleware schema that converts OpenAPI operation schemas to function-like schemas. If you want to become a proficient AI developer who can create basic libraries or frameworks, it would be beneficial to learn about each schema definition:

- Swagger/OpenAPI Documents

- LLM Function Calling Schema

- LLM DTO Schemas

-

IChatGptSchema: OpenAI ChatGPT -

IClaudeSchema: Anthropic Claude -

IGeminiSchema: Google Gemini -

ILlamaSchema: Meta Llama - Middleware layer schemas

-

ILlmSchemaV3: middle layer based on OpenAPI v3.0 specification -

ILlmSchemaV3_1: middle layer based on OpenAPI v3.1 specification

-

-

4.2. Validation Feedback

| Components | typia |

TypeBox |

ajv |

io-ts |

zod |

C.V. |

|---|---|---|---|---|---|---|

| Easy to use | ✅ | ❌ | ❌ | ❌ | ❌ | ❌ |

| Object (simple) | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ |

| Object (hierarchical) | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ |

| Object (recursive) | ✔ | ❌ | ✔ | ✔ | ✔ | ✔ |

| Object (union, implicit) | ✅ | ❌ | ❌ | ❌ | ❌ | ❌ |

| Object (union, explicit) | ✔ | ✔ | ✔ | ✔ | ✔ | ❌ |

| Object (additional tags) | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ |

| Object (template literal types) | ✔ | ✔ | ✔ | ❌ | ❌ | ❌ |

| Object (dynamic properties) | ✔ | ✔ | ✔ | ❌ | ❌ | ❌ |

| Array (rest tuple) | ✅ | ❌ | ❌ | ❌ | ❌ | ❌ |

| Array (hierarchical) | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ |

| Array (recursive) | ✔ | ✔ | ✔ | ✔ | ✔ | ❌ |

| Array (recursive, union) | ✔ | ✔ | ❌ | ✔ | ✔ | ❌ |

| Array (R+U, implicit) | ✅ | ❌ | ❌ | ❌ | ❌ | ❌ |

| Array (repeated) | ✅ | ❌ | ❌ | ❌ | ❌ | ❌ |

| Array (repeated, union) | ✅ | ❌ | ❌ | ❌ | ❌ | ❌ |

| Ultimate Union Type | ✅ | ❌ | ❌ | ❌ | ❌ | ❌ |

C.V.meansclass-validator

Is LLM function calling perfect? No, absolutely not.

@agentica is an Agentic AI framework focusing on LLM function calling. If someone familiar with AI development asks, "Is LLM function calling safe? Are there no hallucinations during argument composition?" The answer is: "No, absolutely not. LLM function calling fails quite frequently."

Since @agentica is a framework specialized in function calling, if function calling is dangerous, it would mean that @agentica is also dangerous, which would undermine the key concept of @agentica.

To overcome these function calling challenges, Agentica corrects type errors in the following way: First, it allows the LLM to make invalid arguments. Then it checks and reports every type error in detail to the agent, enabling the agent to correct the function call in the next attempt. This is the validation feedback strategy.

For precise and detailed validation feedback, @agentica uses the typia.validate<T>() function. Since the validation logic is generated by the compiler analyzing TypeScript source code, its error tracking is more detailed and accurate than any other solution.

With this powerful compiler-driven validation feedback strategy, @agentica doesn't fail on LLM function calling at the argument composition level. In fact, the previously demonstrated enterprise-level shopping chatbot with 289 API functions runs on a small 8B parameter model (gpt-4o-mini).

These 8B parameters mean that Agentic AI can be realized even at the personal laptop level. Agentic AI can be accomplished with just an 8GB VRAM graphics card. This validation feedback strategy, powered by compiler-driven development, is why typia could successfully challenge the Agentic AI space.

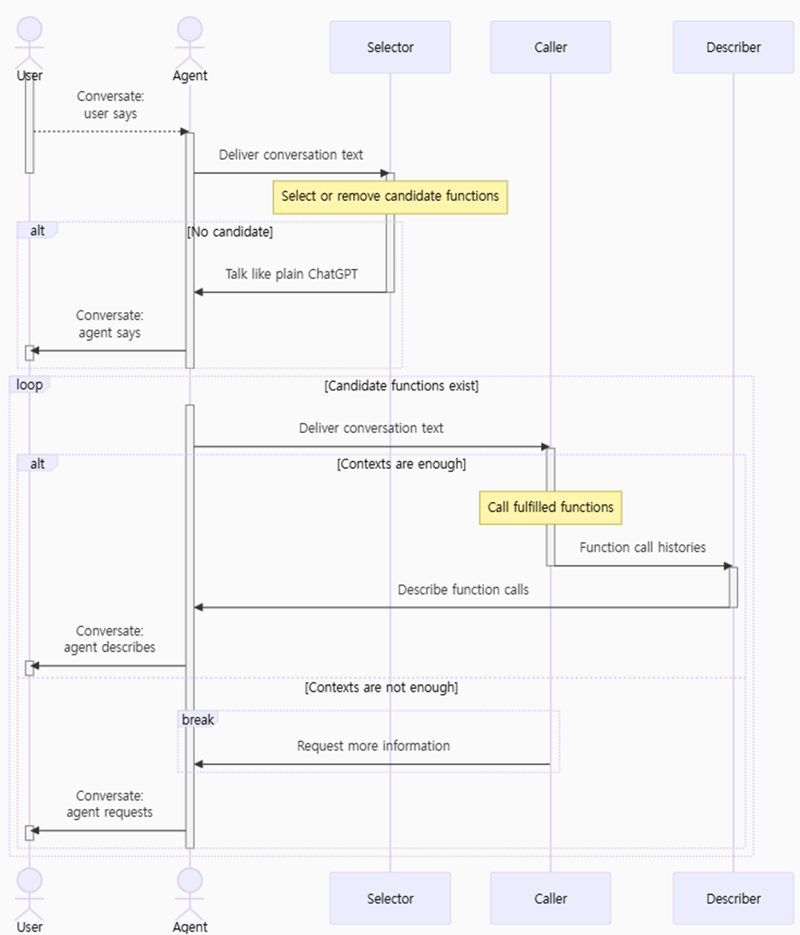

4.3. Internal Workflow

The internal agent workflow in @agentica.

The internal agent orchestration of Agentica is also simple. It has only a three-agent workflow: "selector," "caller," and "describer."

The selector agent is designed to select or cancel candidate functions by analyzing the conversation context. If it fails to find any appropriate function to select, it defaults to conversing as a plain chatbot.

The caller agent tries to call the candidate functions by composing their arguments. If the context isn't sufficient, it requests the user to provide the remaining properties needed for the call. There's a loop between the selector and caller agents until no candidate functions remain.

The describer agent simply describes the results of the function calls.

As you can see, Agentica's workflow isn't complex and may be simpler than many others. The key to achieving Agentic AI isn't based on designing complex agent workflows. The key is having a system that can safely and efficiently mass-produce LLM function calling schemas.

Function calling is everything. Let's embrace function calling.

5. Conclusion

typia is a transformer library that began as a runtime validator. Today, it has successfully accomplished Agentic AI with its compiler skills.

Agentic AI arises from LLM function calling, and typia is the best solution for it. Type-safe and convenient LLM function calling schema generation at the compilation level is the most important aspect of Agentic AI development.

In fact, the number of downloads of typia has dramatically increased since it began supporting the typia.llm.application<Class, Model>() function. typia, which was used by hundreds of thousands of people every month, is now used by millions of people monthly.

Let's participate in the new Agentic AI era with typia and @agentica. TypeScript developers, you are now AI developers.

import { Agentica } from "@agentica/core";

import { HttpLlm } from "@samchon/openapi";

import typia from "typia";

const agent = new Agentica({

controllers: [

HttpLlm.application({

model: "chatgpt",

document: await fetch(

"https://shopping-be.wrtn.ai/editor/swagger.json",

).then(r => r.json()),

}),

typia.llm.application<ShoppingCounselor, "chatgpt">(),

typia.llm.application<ShoppingPolicy, "chatgpt">(),

typia.llm.application<ShoppingSearchRag, "chatgpt">(),

],

});

await agent.conversate("I wanna buy MacBook Pro");

Top comments (0)