Hey, my name is Santhosh Nimmala, and I work as a Principal Consultant at Luxoft, leading Cloud and DevOps in the TRM space. In this series of articles, I will be discussing about various solutions for FSI usecases. In this article, we will delve into Implementing Hybrid cloud Retail trading Solution for Clearning house with AWS Cloud, we have implememted similar soultion at luxoft .

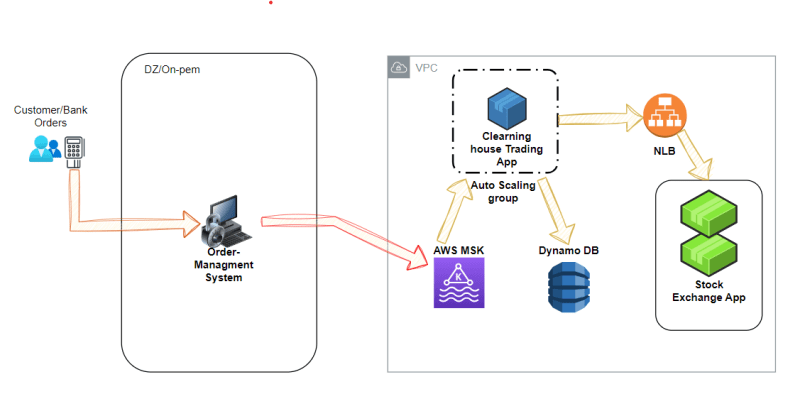

stock trading infrastructure for a clearning house is quickely outgrowing data center (on-prem) , if we reserver new capicity of infra on-prem it will take time and more costly compared to public clouds , our bets current option is to keep the oder managment application on-premises and move the Trading applications, databases and stock exchange client to AWS while continuing to communicate with on-premises infra .

This is a hybrid solution with Order managemnt systems on-prem and Trading systems and DB's on cloud .

AWS Managed Streaming for Apache Kafka (MSK) and Amazon DynamoDB can be used to design a solution to transfer messages from an order booking system to a clearing house trading application. Here is a high-level design of this approach, along with several benefits:

Architecture :

Order Managment System: As customer's like Bank's Trade stocks and other instruments like thousand's of trades per minute will be submitted to order managment system .

AWS MSK: Amazon Managed Streaming for Apache Kafka (Amazon MSK) is a fully managed service that enables you to build and run applications that use Apache Kafka to process streaming data. Amazon MSK provides the control-plane operations, such as those for creating, updating, and deleting clusters. It lets you use Apache Kafka data-plane operations, such as those for producing and consuming data.

Create an AWS MSK cluster to serve as a message broker between the Order Booking System and the Clearing House Trading Application using AWS MSK (Managed Streaming for Apache Kafka). For your message streaming needs, MSK offers scalability, high availability, and durability.

To represent various message or event kinds, create Kafka topics.

Setup the Order Booking System's producers to publish messages to the appropriate Kafka topics.

Set up consumers in the Clearing House Trading Application to process messages and subscribe to Kafka topics.

Clearing House Trading Application: this is where messages were consumed and processed.

Amazon DynamoDB: Use DynamoDB to store processed data or important metadata as a data store. You may keep track of order status, trade history, or other pertinent data in DynamoDB, for instance.

You can keep pertinent information in DynamoDB tables for when the Clearing House Trading Application consumes and processes messages.

To automate data processing and communication with DynamoDB, take into account using AWS Lambda functions or other elements.

To deliver these streams to the stock exchange application, use NLB. From there, you can interact with various interfaces .

Trading application with ASG: If your Clearing House Trading Application needs to be scalable, you can install it in an auto-scaling group to make sure it can effectively handle a range of workloads.

Advantages of this solution :

Scalability: Because AWS MSK and DynamoDB may grow to handle massive amounts of messages and data, your system will be able to withstand heavier loads during the busiest trading hours.

High Availability: AWS MSK offers high availability multi-Availability Zone (AZ) deployments, ensuring that your message broker is error-resistant. DynamoDB also provides durability and availability.

Real-time Messaging Instant Messaging Thanks to AWS MSK's high availability multi-Availability Zone (AZ) installations, your message broker will be error-proof. Additionally, DynamoDB offers excellent availability and durability.

Durability: Kafka keeps messages around for a while to allow replay, in case there are problems with the processing, or for auditing.

Managed Services: Kafka keeps messages for a specific amount of time to enable replay in the event of processing faults or for auditing requirements.

Flexibility: You can effectively with Kafka subjects. Route messages while DynamoDB's adaptable architecture allows for versatile data storing and querying.

Integration Possibilities: Utilising Kafka topics makes it easier to organise and route messages, and DynamoDB's adaptive design enables data archiving and retrieval.

Security: To safeguard the confidentiality of sensitive financial data, AWS implements security measures like isolation, IAM access restrictions, encryption, and auditing.

Cost effectiveness: By paying for the resources used with DynamoDB and AWS MSK, serverless processing components like AWS Lambda assist minimise costs..

Conclusion;Choosing the cloud model turned out to be the best decision because it balanced the flexibility of the cloud with the dependability of on-site infrastructure.By deciding to keep our Order Management System on-site while moving our Trading Applications, Databases, and Stock Exchange Client to AWS, we have created a foundation for the future. We can now move on with a flexible and scalable architecture thanks to this choice.

Top comments (0)