Last week I started taking the fast.ai Practical Machine Learning for Coders course.

I learned how to build a state-of-the art image classifier in about an hour which was amazing! Then I started trying to apply what I learned to my own projects. Here’s what happened.

Project #1 - YouTube thumbnail grader

My first project attempt was to build a classifier that could judge the quality of YouTube video thumbnails.

Something like this would be so cool! Imagine being able to plug in a thumbnail for your newly created YouTube video and get back recommendations on how you could improve it.

As a first step I tried to build a classifier that could recognize good thumbnails.

I collected together a bunch of YouTube thumbnails and estimated how many views per day the videos were getting. I labeled the videos as getting ‘less than 100 views per day’ and ‘more than 100 views per day’ and tried to see how accurate the resulting model was.

I had a feeling this test wouldn’t work very well… there are a lot of factors besides the thumbnail that influence view count (# of followers + fame of the YouTuber) and I wasn’t wrong. The performance was dismal.

The model thought that this thumbnail would get less than 100 views.

This makes sense though… It’s probably the case that thumbnails (while important) don’t make a HUGE difference in view counts.

Honestly I bet you I could make a really good model for predicting view counts. Just regress view counts against subscribers and you’re good. That’s why the YouTube pros alwasy say ‘Like and subscribe’!

So the project failed?

Absolutely not! The whole point of the work (at this stage) is to figure out what machine learning is capable of + go through the steps required to train a model. I definitely did all that.

This project taught me the meachanics of deep leraning + I started to get a more intuitive feel for what kinds of problems a model would succeed or struggle with… in this case the model struggled because it didn’t have the information it would need to make a good judgement.

Project #2 - Chinese language detector

My second attempt invovled building a model that could listen to people speaking and classify the sound as either ‘English’ or ‘Chinese’.

To do this I took audio files (which store audio data as “time-series” amplitudes-over-time)… and converted their amplitude over time data into spectrograms (pictures of the frequencies present in sound over time).

This —>

Got converted to this —>

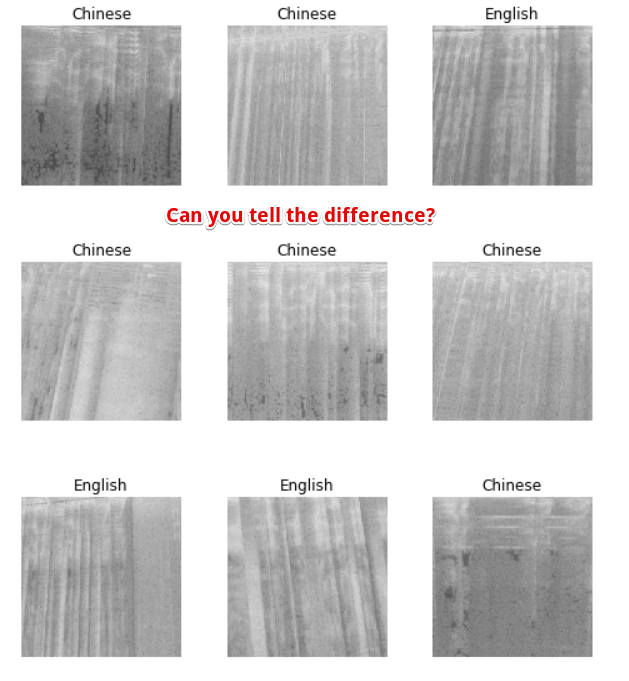

The hypothesis was that the machine learning model would be able to learn the differences between the English and Chinese audio files and would be able to pick out which one was which…

The model’s performance on this project was interesting. It did fairly well at classifying files that were part of the initial training set.

But when it actually came to predicting samples of me speaking Mandarin it was pretty horrible.

Basically the training and testing of this model were… frustrating.

There are so many variables that could cause problems for a model like this.

- The original audio file encoding

- Whether or not the audio files are clean

- The processing of the audio files into spectrograms

- What kinds of features the model is picking up on — (do I have specific vocal characteristics that will mean the model always picks English for me???)

The list goes on.

After doing a bit of research I learned that CNNs (convolutional nerual networks) may not be the best kind of network to apply to a problem like this since the data in the pictures is more repetative than fixed.

I found some researchers who succeeded in solving this problem using RNNs (a neural network where the network bases it’s prediction on data that came from a previous piece of data) instead of CNNs. These networks are good for these kinds of tasks because the network would say… ‘given that I just saw this shape the next shape should be… blah if this were english’.

So I think solving this problem is a bit beyond my present skill level, but I’m not giving up! I’ll put this one on the back burner and pick it up again when I know a little more.

So what are some things I learned from this project?

- A lot about signal processing and the Fast Fourire Transform (the most amazing and cool math tool out there)

- That CNNs struggle when the pattern they’re being asked to classify isn’t clear and obvious

- That I would probably learn faster if I worked with datasets and problems where I can see other people’s answers (maybe contests?)

Taking a step back, why learn about this stuff?

Honestly, after a week of working with this tech I am blown away!

I can’t yet see exactly how this stuff will change the world but I have no doubt that it will change… everything.

My bet is that with a little more experience I’ll be able to build some useful tools. We’ll see. It’s definitely interesting so far and I’ll keep you posted as I learn more.

Top comments (0)