Companies of all sizes are looking into implementing data science and machine learning into their products, strategies, and reporting.

However, as companies start managing data science teams, they quickly realize there are a lot of challenges and inefficiencies that said teams face.

Although it has been nearly a decade since the over-referenced data scientist is the sexiest job article there are still a lot of inefficiencies that slow data scientists down.

Data scientists still struggle to collaborate and communicate with their fellow peers across their departments. Also, the explosion of data sources inside companies has only made it more difficult to manage data governance. Finally, the lack of a coherent and agreed-upon process in some companies makes it difficult for teams to get on the same page.

All of these pain points can be fixed. There are tools and best practices that can help improve your data science teams' efficiencies. In this article, we will discuss these problems and how your team can approach them so you can optimize your data science team's output.

Collaboration And Communication

Collaboration and communication remain a challenge for any technical team. This is why most Agile methodologies have some form of stand-up meeting and other forms of transparent communication. However, just having a stand-up meeting is rarely enough as far as communication and collaboration.

Communication

Communication is crucial when it comes to working on technical projects. Anyone managing back and front-end teams can tell you how difficult it can be to ensure that all the different components and pieces get put together correctly.

This same problem occurs with data scientists. Whether it be communicating with data engineers or stakeholders, there are a lot of opportunities for miscommunication and misunderstandings. Many of these problems occur due to a lack of transparency as well as just general differences in perspectives and goals.

Collaboration

Collaboration is another important aspect of data science best practices because it helps share company best practices. This has been a challenge for the past decade for many data scientists as their work was often saved in a shared team folder.

Another struggle for some data scientists is managing version control in more collaborative environments. As more and more developers and data scientists interact on the same bits of code and Jupyter notebooks, it becomes difficult to track what changes were made by who and why.

This can cause chaos eventually once someone makes an edit that they want to revert, but can't remember what they did.

Solution

In response to this many companies have arisen to help data science teams better manage their collaboration and communication. Two, in particular, stand out.

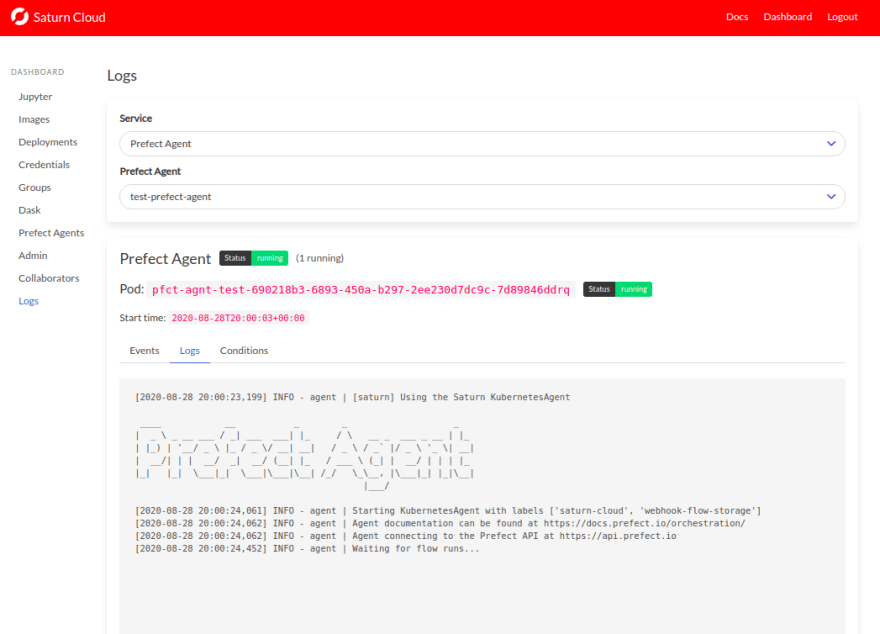

Domino Data Lab and SaturnCloud. Both offer a somewhat similar product in a lot of ways. Of the two Domino Data Lab is a much more enterprise-focused and feature-rich product.

Otherwise, they both started with a focus on helping data scientists better track, share, and manage their Jupyter Notebooks.

They both offer automatic version control that can make it very easy for data scientists to roll back any changes they make in said notebooks. In particular, this feature is very helpful when it comes to collaborating on models and research. Team members can all make updates to their notebooks and models and not worry about committing a change that ruins a workflow.

All of these features can drastically improve your data scientist's efficiency by making collaboration easy.

Improve Data Governance And Data Lineage

A topic that doesn't get enough attention but is arguably one of the more important subjects in data is data governance. The problem is, data governance is not sexy. It's all about regulating what data is available, where it comes from, and who is in charge of it.

Data governance has a lot of different aspects to it.

According to the Business Application Research Center (BARC), an organization's key data governance goals should be to increase the value of data, establish internal rules for data use, and implement compliance requirements. Another way you can look at this is that data governance is focused on managing the data's availability, usability, integrity, and security.

Data governance is important because it helps data scientists know where their data is coming from as well as if it is reliable. A struggle for businesses these days is that every system integrates with every other system and thus has little data from everywhere.

Solution

In turn, it can be hard to tell which data is the most up to date as well as which comes from a source of truth. This is where data governance/lineage tools come in handy. Tools such as TreeSchema and Talend, in particular Talend's Data Lineage tools.

Tools like TreeSchema automatically scrape your team's various data sources to help better track metadata like who owns which table, what is the schema of your data and where did the data come from.

Not only does TreeSchema help track where the data comes from, but it also helps track changes in the data and bad data practices.

For example, let's say you add a new data source or field in a table. In a normal company, a new data object would be created but might not have any documentation for other users to know what that data is. If it does have documentation it might be in some shared folder or SharePoint site. Instead, with TreeSchema, you centralize your data documentation and get updates when there are holes in that documentation. This can be seen in the picture below.

Having a centralized solution to manage your team's data governance can ensure that your data scientists don't have to spend too much time chasing down data engineers to figure out what every column means.

Define Your Data Science Process

With data science still being a relatively new field, there aren't as many specific processes and methodologies that new data scientists can follow. In software, there are concepts like Scrum and Waterfall that make it clear how a software developer goes from idea to product.

In data science, this is a little murkier. There are general ideas like exploratory data analysis(EDA). However, there isn't anything that is fully concrete. I have experienced this lack of process in my first job when I was just given a project with no real clear guidelines on what the goal was and how we would know when my work was done.

Solution

To avoid this confusion, data science teams should establish a general process of how they will go from question to answer. I do believe that your team's data science process shouldn't be as stringent as most software development processes because data science isn't software development. There is less of a defined end when it comes to performing analysis or developing models.

Instead, most data science processes can be focused on ensuring that your team remains focused on the questions they are trying to answer at the moment and not getting dragged into too many rabbit holes.

There are a few general outlines of a process that it seems like many data scientists agree on. This is data collection, data cleaning, EDA, consolidation and conclusion, model development, and model deployment.

Each of these steps helps data scientists better define their goals and in turn know once each step is complete.

For example, EDA in theory can go on forever. An analyst could keep drilling into every specific fact and figure. This isn't conducive to driving a project forward.

That is why it is beneficial to define some boundaries to EDA. Whether that be a time or a set of questions you aim to answer. Both provide boundaries to avoid data scientists and analysts getting too lost in the data.

Chanin Nantasenamat has a great visual example of this process in The Data Science Process: A Visual Guide to Standard Procedures in Data Science. It does skip over the conclusion and consolidation step. But the visuals provide a great perspective.

Conclusion

Data science has become commonplace. That being said, data science teams, tools, and strategies are far from easy to implement.

Regardless of the size of the company you have, your data science teams can improve their effectiveness. They can do this by improving their communication and collaboration, knowing and trusting their data, and having a general set of guidelines for their data analysis process. Improving these three areas can help reduce a lot of redundancy, unnecessary work, and untrustworthy results. Many of these growth opportunities can be implemented by a combination of finding the right tool and or process as well as onboarding your data scientist to help them adapt to the usage of said tools and processes.

Data science doesn't have to always be hard, as long as you help provide a more defined path forward.

If you are interested in reading more about data science or data engineering, then read the articles below.

How To Use AWS Comprehend For NLP As A Service

4 SQL Tips For Data Scientists

What Are The Benefits Of Cloud Data Warehousing And Why You Should Migrate

5 Great Libraries To Manage Big Data With Python

Top comments (0)