Hello everyone. This is my first post and I would like to share my experience with cloud resume challenge, not just because it is one of the conditions, but it has been quite a lot of learning for the last few weeks and I am really excited about it.

After attaining a couple of AWS certs during the COVID-19 pandemic, I was thinking to advance further into the professional certs. Meanwhile, the cloud resume challenge popped up in my LinkedIn feed. It was almost at the end of the challenge but I still wanted to give a try as the challenge was exhilarating.

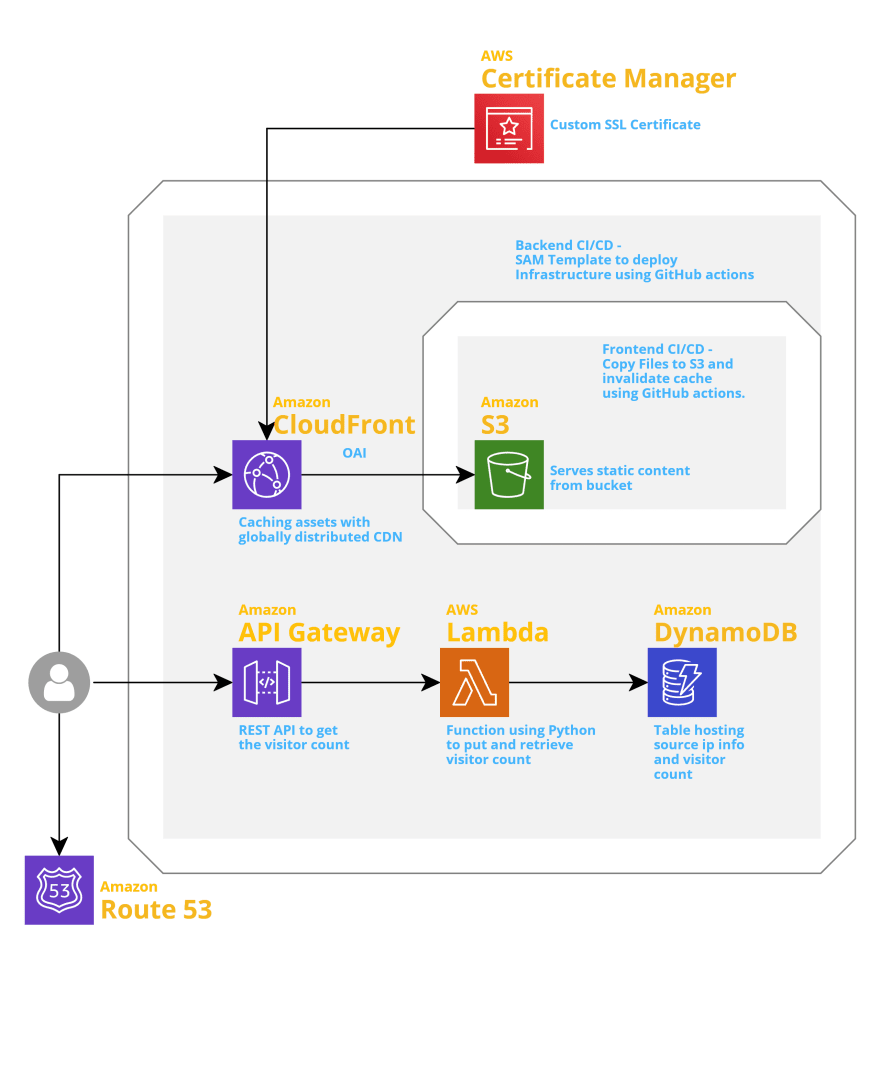

I always liked to visualize the design, so I created a simple architecture diagram on how the end product will be.

Below is the challenge, conditions, steps, and my learning along the way.

CHALLENGE:

Build a website to host your resume along with visitor(s) count. Visit the official Cloud Resume Challenge homepage for more information.

1. Certification

I completed my SAA in 2018 and recently completed my SysOps and Security Specialty. I am good to start with the project.

2. Front-End

I saw many web templates around, but I wanted to make a fresh start. I took some time and started writing in HTML and styled using CSS. I also used javascript to call the API and for a little bit of responsive design. I have to work more on the responsive design but it seemed fine for now to get started. w3schools is the best. I designed the entire site by referring it.

3. Website Hosting

I used S3 to host the website and CloudFront for secure access (https) and static asset caching. I had to use a custom domain name for the CloudFront distribution and Amazon Route 53 to use my own domain. I was able to generate the SSL certificate for my domain using AWS Certificate Manager. There are various providers and ways to host this but I decided to stick with AWS services.

4. Back-End

For the backend, I had to use a few AWS services to retrieve the visitor count.

API Gateway: Created an API to handle the task sent from the webpage to access the backend service(AWS Lambda in this case). I updated the API URL in the javascript code. I spent a lot of time here and wanted to explore more, but that is for another day of learning. I used this post which has more information about API gateway. It's a long one, but really worth it. I also received the CORS(Cross-Origin Resource Sharing) error as I didn't configure it. Once enabled, it worked as expected.Here is a wonderful article on CORS that I used while setting up API Gateway.

AWS Lambda: Lambda is a FaaS offering from AWS. It allows you to run a function without provisioning any servers. I decided to use Python to query the database(DynamoDB) and retrieve the count of visitors. Instead of just retrieving the count, I wanted to store the unique count as well, so I decided to get the IP information and check against the database before storing the details. I don't have much experience in Python and I am no developer but I do use PowerShell for all of my automation. Check this site to get started on Python. I had to perform Python tests as well to make sure my code works. I also used moto to mock AWS calls. This would involve no interaction with AWS and the function can be tested at the same time. This article helped me to test my Python code using moto.

DynamoDB: Amazon DynamoDB is another serverless product offering from AWS. It's a NoSQL database and I used this to store and update the visitor count and metadata information such as first and last visited date etc.,

5. IaC(Infrastructure as Code)

One of the conditions was to configure the backend services using AWS Serverless Application Model(SAM) template. I completed a few AWS certs but haven't looked much into SAM. I have used CloudFormation and was thinking to use the same, but out of curiosity, I decided to look into SAM. It really saved a lot of time and configuration in the template. In a couple of days, my template was ready to provision S3, API Gateway, Lambda, and DynamoDB. Check out Chris's blog for more details.

As SAM supports CloudFormation resources, I decided to add CloudFront distribution as well in the SAM template so the entire infrastructure and configuration can be provisioned with a single template.

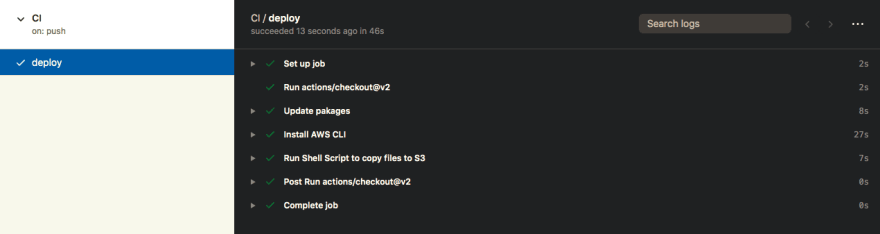

6. Front-End CI/CD

I used GitHub Actions and created a workflow to checkout packages, install AWS CLI, copy files to S3, and invalidate CloudFront cache when I push any changes to the master repository.

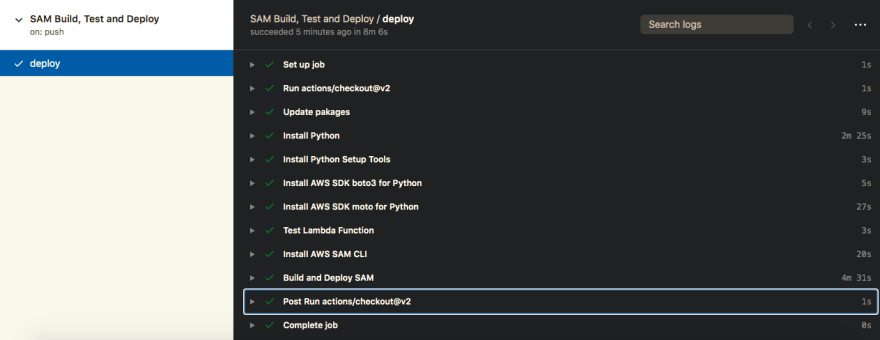

7. Back-End CI/CD

I used another repository and setup GitHub Actions for the back-end infrastructure. The workflow installs required tools, run Python tests, and upon successful testing, SAM provisions/updates the infrastructure in AWS.

And finally, after working on this for a couple of weeks, I was happy to see the serverless website that I have built.

Top comments (1)

This is great, I had a great time completing the Cloud Resume Challenge using Azure! Thank you for sharing your experience