Data – both insight and evidence – inform business decisions and underpin discoveries. The web is the largest depository of data that exists. If you know how to look, you can find the majority of the human perspectives and rational finds.

The modern-day problem is not the lack of information, but the filter failure amplified by the “overwhelm.” The information is messy, cluttered, and fragmented; it is hard to compare and judge. Web Scraping is used to gather the data in one place, clean it up, structure it, and organize it to be presented to users or analyzed.

Data – both insight and evidence – inform business decisions and underpin discoveries. The web is the largest depository of data that exists. If you know how to look, you can find the majority of the human perspectives and rational finds.

The modern-day problem is not the lack of information, but the filter failure amplified by the “overwhelm.” The information is messy, cluttered, and fragmented; it is hard to compare and judge. Web Scraping is used to gather the data in one place, clean it up, structure it, and organize it to be presented to users or analyzed.

How Does Web Scraping Work?

Web Scraping (Web Harvesting, Web Data Extraction) is the automated extraction of information from web-based sources.

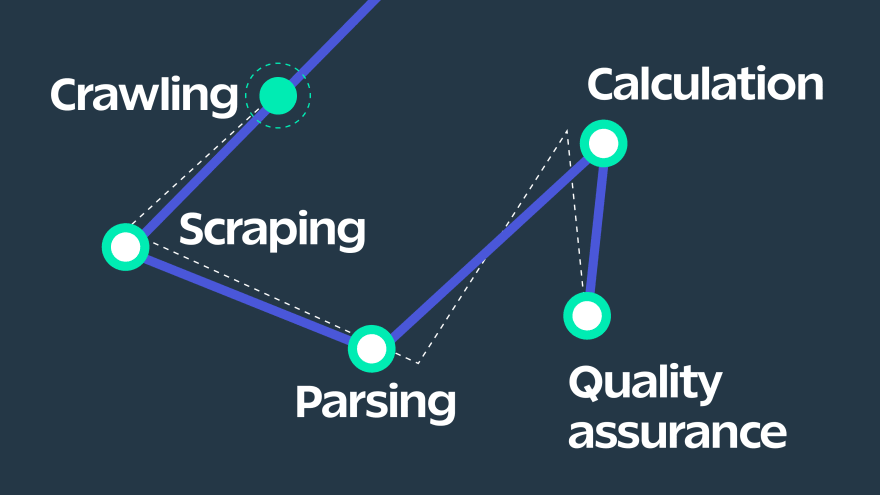

Web Scrapers download the HTML, extract relevant information, and organize it under the pre-defined logic. The process involves:

Crawling: private crawlers index websites by digesting their markup and other patterns and identifying types of information. They create the “map” for scrapers the same way Google knows what information is where;

Scraping: downloading pages’ HTML or files;

Parsing: cleaning, structuring, transforming formats of the information;

Calculation, other operations, feeding it to the database.

Quality assurance is conducted at every stage of the process.

Is Web Scraping Legal?

The legality of web scraping depends on the jurisdiction and context. European Union and some US states have stricter regulations than the rest of the world.

Disregarding those regulations is not uncommon for a simple reason: enforcing them is not always feasible. But breaking the rules will come with other forms of punishment. Publishing a study based on illegally obtained information would invite, at the very least, peers’ disapproval. Court cases can be used as a pressure tactic and get dragged for years.

We recommend you stick to fully legal web scraping. It is not that difficult to do: there are many ways to acquire data legally, safely, and courteously.

Many websites allow scraping as long as you follow the rules in the robots.txt file, Terms of Service, and licensing agreements. Some of them even have dedicated APIs for data scrapers to use.

Information regarded as “plain facts” is freely available. Brand names, product descriptions, displayed price, and availability are plain facts.

Private data and copyrighted materials are protected types. It can be made available after the owners’ permission, so do not hesitate to contact them.

Using residential proxies service makes your web scraping project a lot more powerful. Multiple simultaneous requests can slow or shut down the targeted website. It may qualify as harming their business – even though it had not happened due to malicious intent. Make sure you take measures to protect the sites you are scraping.

Main Applications and Benefits of Web Scraping for Business

Business Intelligence

Better decisions are informed by evidence and follow rational models. The number of data applications in business is immense; it is hard to list them all:

Competitor monitoring and analysis;

Products changes;

Dynamic price updates to match the market (built-in in marketplaces);

Monitoring suppliers, vendors, and new offers – i.e., travel and real estate industries;

Funding opportunities;

Scanner for potential hires;

Fads and fashion signals.

Copyright Protection

Web scraping is not the only tool used to protect copywriting content, but it can be used to monitor the web for “suspiciously similar” images or texts.

Trading Insight

Many of the world’s formal indexes and signals change daily. Not many professions require monitoring them frequently; trading might be an exception. But many other predictions, from weather to markets to SEO, require monitoring trends. Regular automated scraping can help to build them without updating databases daily.

Sentiment Mining

Many social and economic phenomena are determined by perspectives and opinions, not rational facts or fundamental ethical values. Behavioral investment, brand loyalty, and political populism are based on understanding, exploiting (and sometimes shaping) public sentiment.

Unlike facts and ethics, irrationality is diverse and unpredictable. The only way to know it is to observe and listen attentively. On the web, users share opinions openly, but the scale is so large that even a single market can be too much to monitor manually. Web scrapers can help anyone track words associated with their brands, monitor specific terms, or scrape conversations and detect repeated phrases to evaluate the current situation. It sounds like something from CIA movies, but the main challenges are merely hardware and bandwidth.

Customer Development

Customer Development sales framework follows a few principles: focus on the customer (or a segment) that might be the most advantageous to you; listen to their needs explained in their own voice, understand them deeply; customize your offer according to the needs you have discovered – not the ideas you have envisioned on your own.

Web scraping cannot be a sole instrument for Customer Discovery, but it can help detect repeated user feedback patterns or fill your CRM with contextual information.

Pragmatically speaking, you also need the means to contact those potential customers. Web scraping can extract their publicly available emails or event attendance schedule and feed them to your CRM.

Learning and Continuous Education for Your Team

When your subject is narrowly specialized – artificial organs, neutral waters legislation, windmills, or silk production – it is easier to set up a scraper than monitor several forums and websites. Outside of business, patients with rare diseases often create new article alerts to monitor new treatments published anywhere on the web.

Slack, Zapier, Google, and a few other tools have similar features, but they are still limited to most basic tasks. The bot will monitor search engines and all relevant journals and report to your team daily or weekly.

Content Aggregators

If you are an aggregator, scraping might be essential for your business model. Aggregators scrape the rest of the web and accumulate cleaned-up content in one place, making money by selling traffic to advertisers. Users appreciate the ability to filter the content and the convenience of comparing products side-by-side.

In other cases, websites might aggregate or synchronize reviews and news, competitors’ schedules, or product availability. Anything that is currently scattered and users want to have in one database.

Product Descriptions

If your business is a marketplace, sometimes it is easier to scrape product descriptions and images. You will have to clean it up manually, but it is faster than endless copy-paste.

How to Do Web Scraping?

Small-scale, one-time projects can be scraped by hand. However, most business tasks are high-scale or repetitive: you need to monitor trends and update fast-changing information.

When you scrape only a few established websites like Wikipedia or PubMed, you might find it easiest to use APIs they provide. Not all of them are free, but the information provided comes in a convenient form, and there is no need to maintain a complex tools stack.

The next option is specialized scraping solutions: tools designed exclusively for search engines, leading marketplaces, social media, major job search websites, or emails. Specialized apps have the right instruments and keep up with the changes in their niche market.

If your goal is to scrape smaller or private pages, and you do not expect any unusual needs, use ready-made scraper tools. They all aim at generic tasks, but you can save a lot of effort on the initial setup and upkeep.

When neither of the above is a good fit, you can design and program your own web scraper using designated libraries and frameworks. It will take more time and effort, but you will be able to execute unique projects and non-trivial tasks.

Developing your web scraper will require server setup, bandwidth solutions, and proxies. Using a cloud or private server can boost your capacity. If you learn how to use proxies for web scraping you will be sure your project runs uninterrupted and protects your actual IP from exposure.

Logging and investigating errors is a standard part of web scraper maintenance. A convenient infrastructure for troubleshooting and updating your scraper can save you from many headaches.

Web scraping is instrumental whenever the information is fragmented, scattered, or changes fast and would benefit from a side-by-side presentation or trend accumulation. Spreadsheets and manual scraping hardly go out of fashion, but automation is a worthy investment at any larger scale.

This post was originally published on SOAX blog.

Top comments (0)